How to Backup Virtual Machines to Object Storage with Borg, Borgmatic, Rclone, and Cron's Rsync

The article covers in-depth how to execute backups of Virtual Machines into S3 (Object Storage) using Borg and RClone. Borg is a deduplicating backup program which also supports compression and authenticated encryption. It provides an efficient and secure way to backup data. Rclone is an open source, multi threaded, command line computer program to manage or migrate content on cloud and other high latency storage.

For the purpose of this tutorial, we will use a CPU-powered Virtual Machine offered by NodeShift; however, you can replicate the same steps with any other cloud provider of your choice. NodeShift provides the most affordable Virtual Machines at a scale that meets GDPR, SOC2, and ISO27001 requirements.

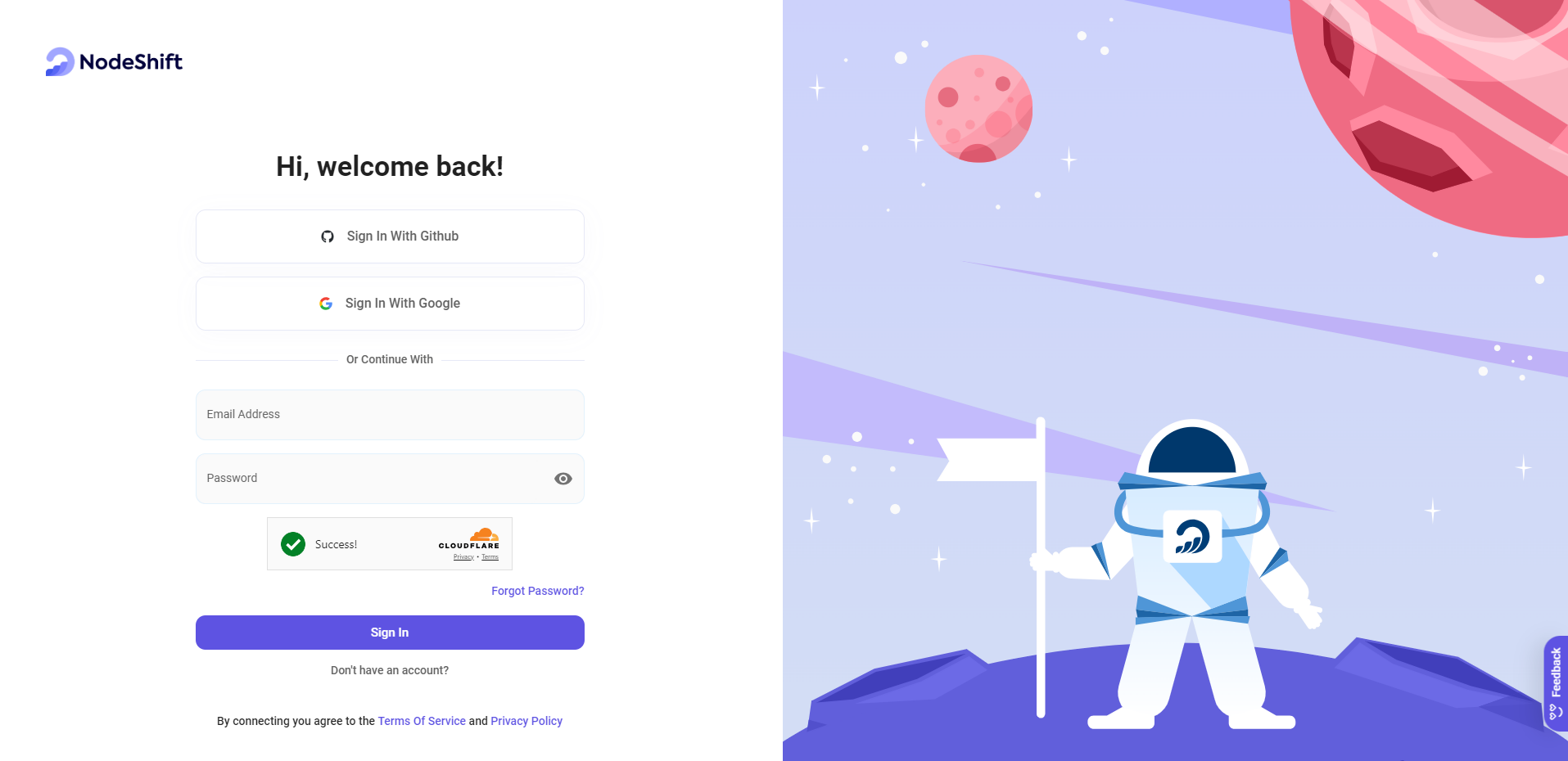

Step 1: Sign Up and Set Up a NodeShift Cloud Account

- Visit the NodeShift Platform and create an account.

- Once you have signed up, log into your account.

- Follow the account setup process and provide the necessary details and information.

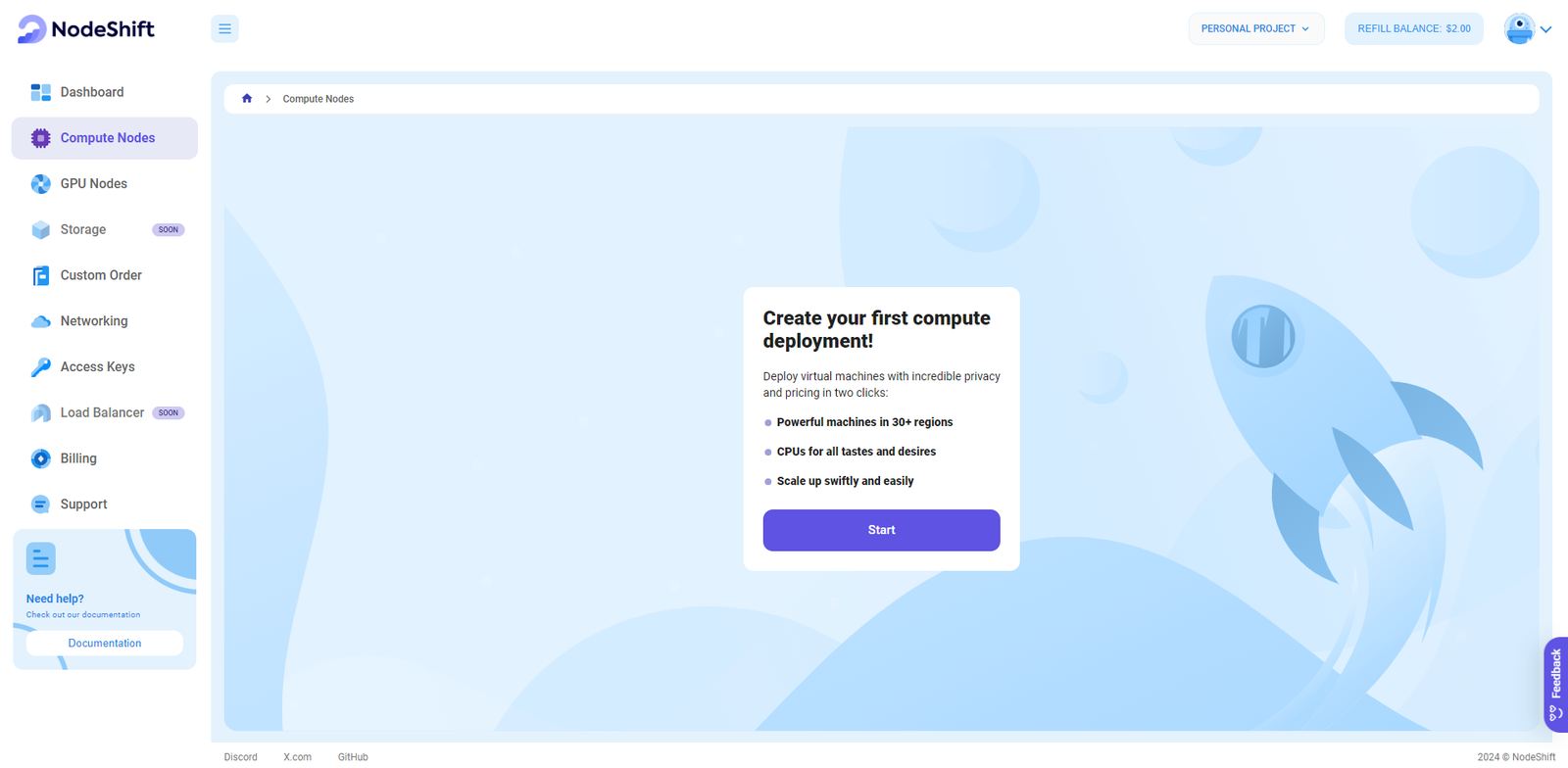

Step 2: Create a Compute Node (CPU Virtual Machine)

NodeShift Compute Nodes offers flexible and scalable on-demand resources like NodeShift Virtual Machines which are easily deployed and come with general-purpose, CPU-powered, or storage-optimized nodes.

- Navigate to the menu on the left side.

- Select the Compute Nodes option.

- Click the Create Compute Nodes button in the Dashboard to create your first deployment.

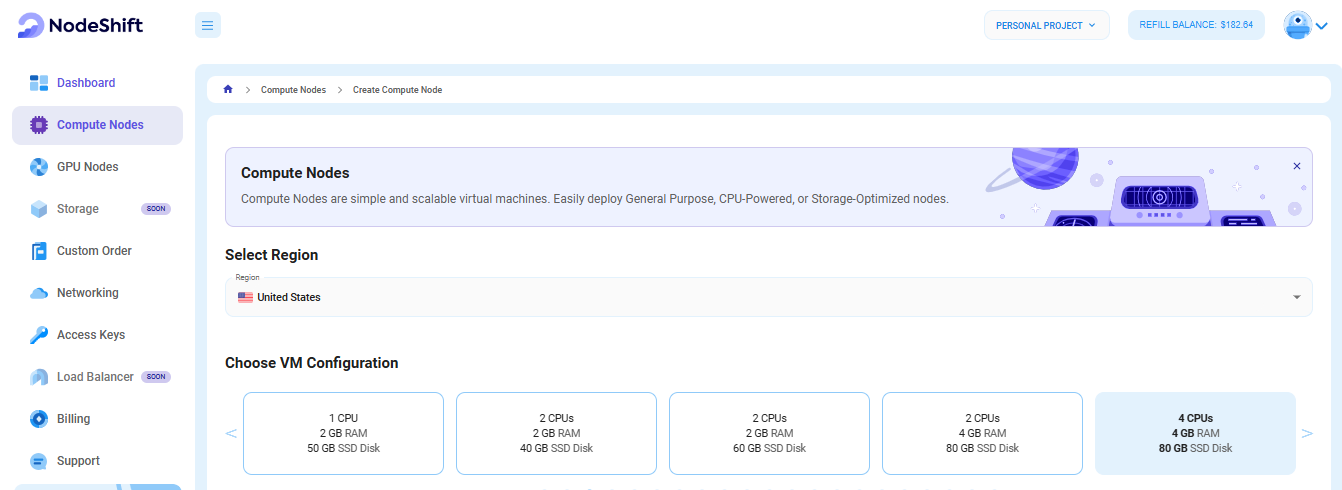

Step 3: Select a Region and Choose VM Configuration

- In the "Compute Nodes" tab, select a geographical region where you want to launch the Virtual Machine (e.g., the United States).

- In the "Choose VM Configuration" section, select the number of cores, amount of memory, boot disk type, and size that best suits your needs.

- There is no specific configuration required for the VM in this tutorial because we will be backing up the VM, so you can choose any configuration. However, if you have deployed a VM, you can follow the same steps for its backup.

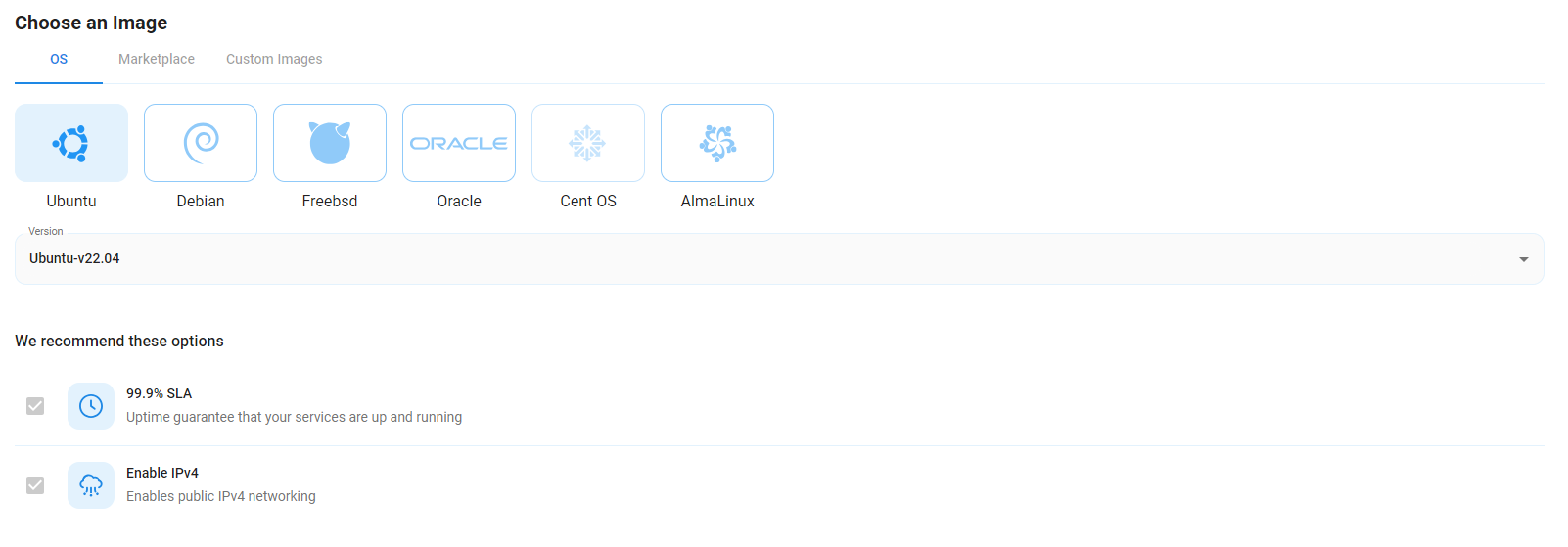

Step 4: Choose an Image

Next, you will need to choose an OS for your Virtual Machine. We will deploy the VM on Ubuntu, but you can choose according to your preference. Other options like CentOS and Debian are also available.

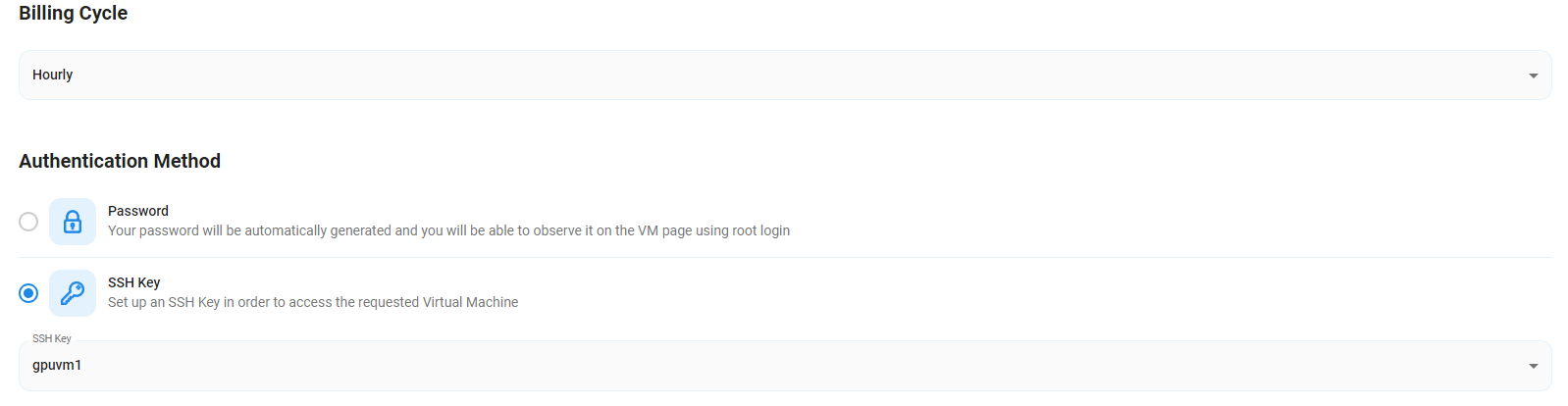

Step 5: Choose the Billing Cycle & Authentication Method

- Select the billing cycle that best suits your needs. Two options are available: Hourly, ideal for short-term usage and pay-as-you-go flexibility, or Monthly, perfect for long-term projects with a consistent usage rate and potentially lower overall cost.

- Select the authentication method. There are two options: Password and SSH Key. SSH keys are a more secure option. To create them, refer to our official documentation.

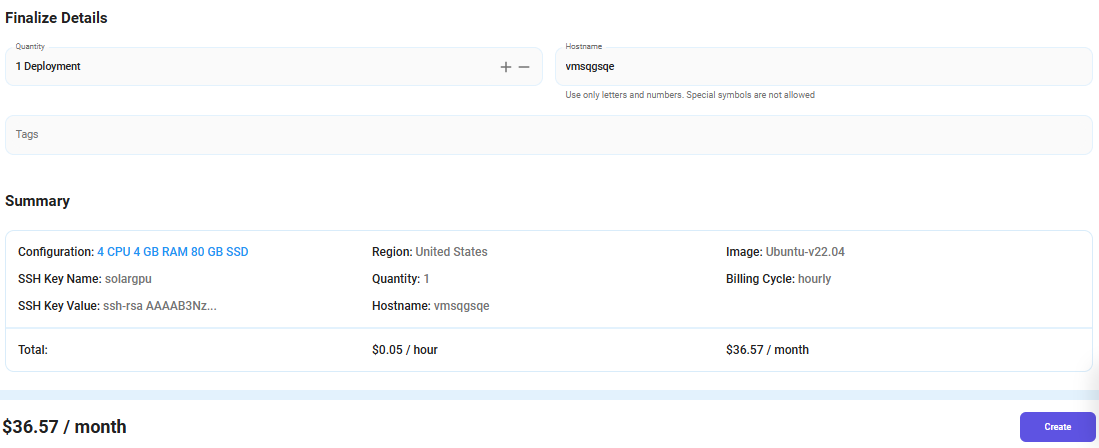

Step 6: Additional Details & Complete Deployment

- The ‘Finalize Details' section allows users to configure the final aspects of the Virtual Machine.

- After finalizing the details, click the 'Create' button, and your Virtual Machine will be deployed.

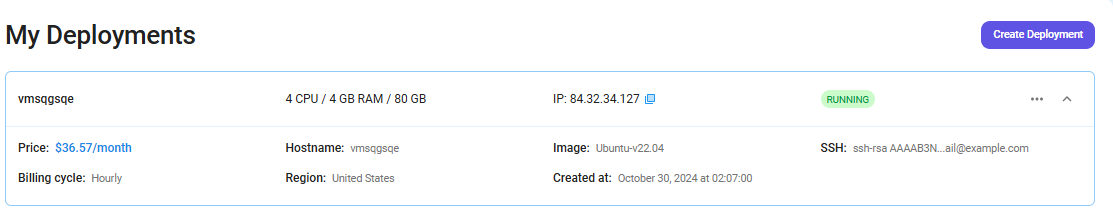

Step 7: Virtual Machine Successfully Deployed

You will get visual confirmation that your node is up and running.

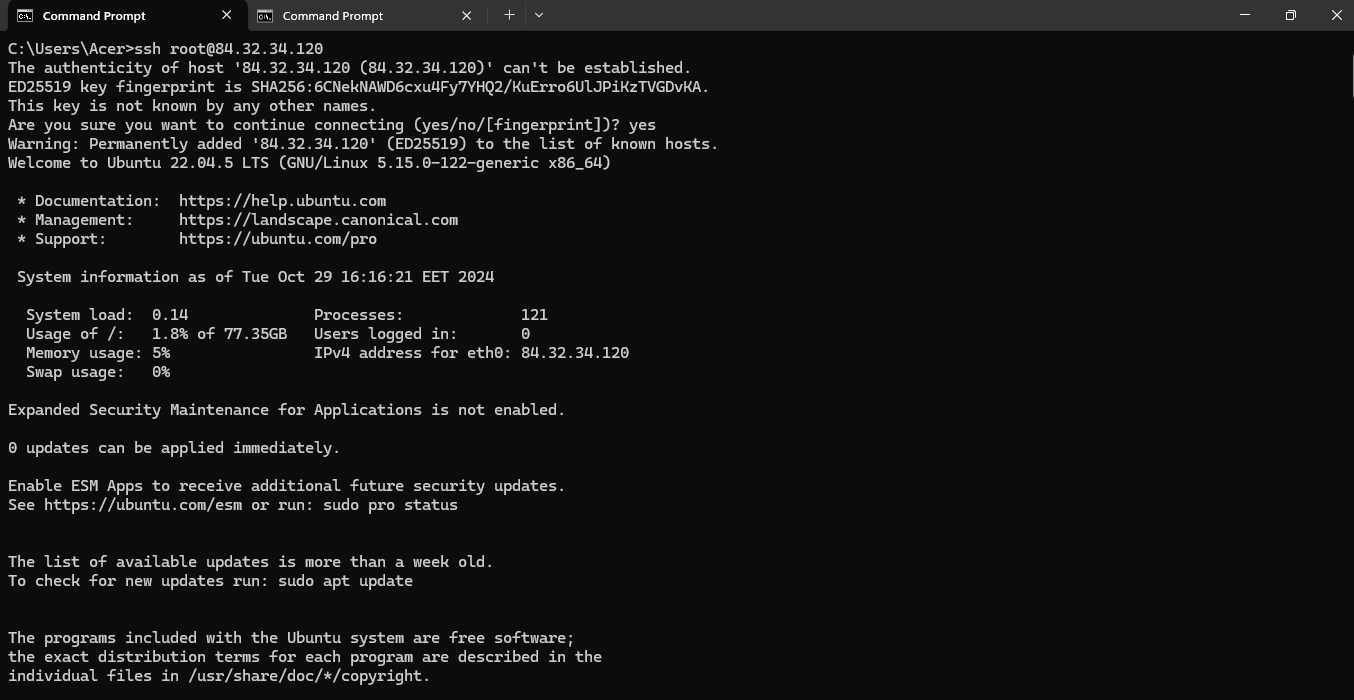

Step 8: Connect via SSH

- Open your terminal

- Run the SSH command:

For example, if your username is root, the command would be:

ssh root@ip

- If SSH keys are set up, the terminal will authenticate using them automatically.

- If prompted for a password, enter the password associated with the username on the VM.

- You should now be connected to your VM!

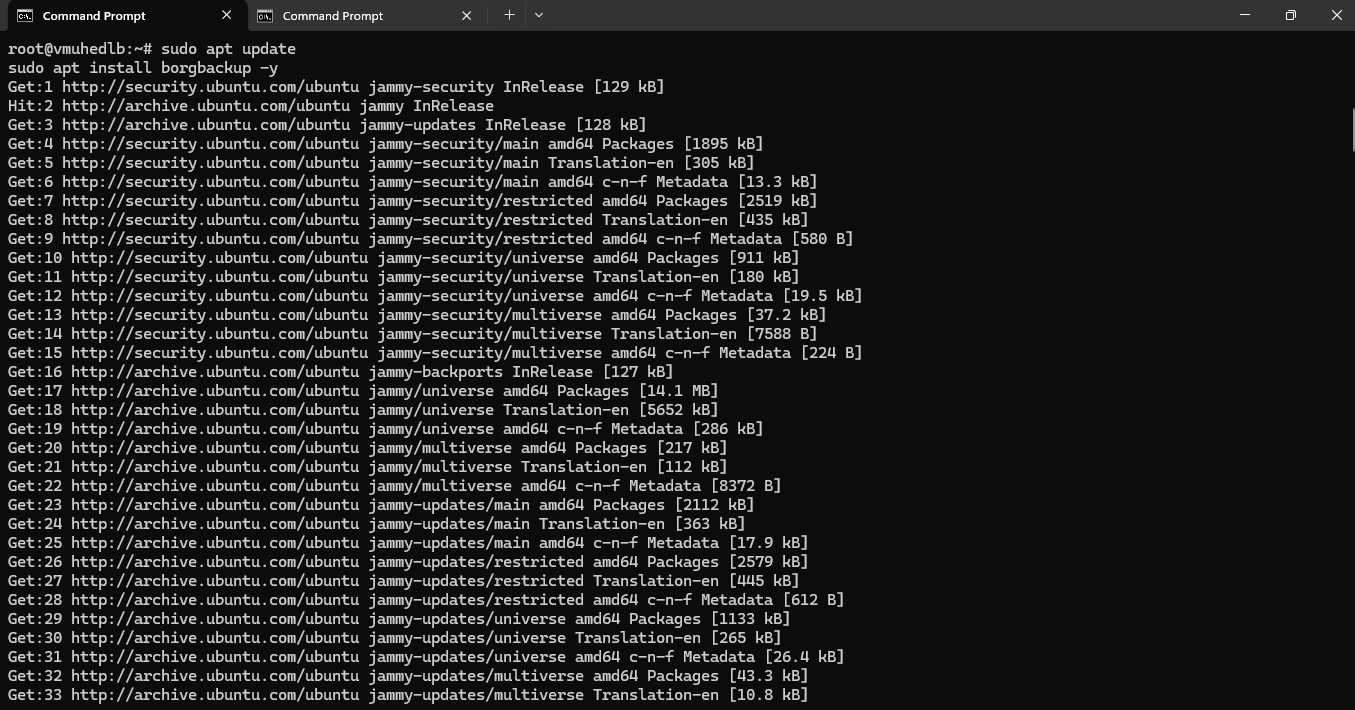

Step 9: Update Your Server and Install BorgBackup on Your VM

Run the following commands to update your server and install BorgBackup on your VM:

sudo apt update

sudo apt install borgbackup -y

Borg is a deduplicating backup program which also supports compression and authenticated encryption. It provides an efficient and secure way to backup data. The data deduplication technique used makes Borg suitable for daily backups since only changes are stored. The authenticated encryption technique makes it suitable for backups to not fully trusted targets.

Then, run the following command to verify the installation:

borg --version

Step 10: Configure S3 Storage as a Backup Repository

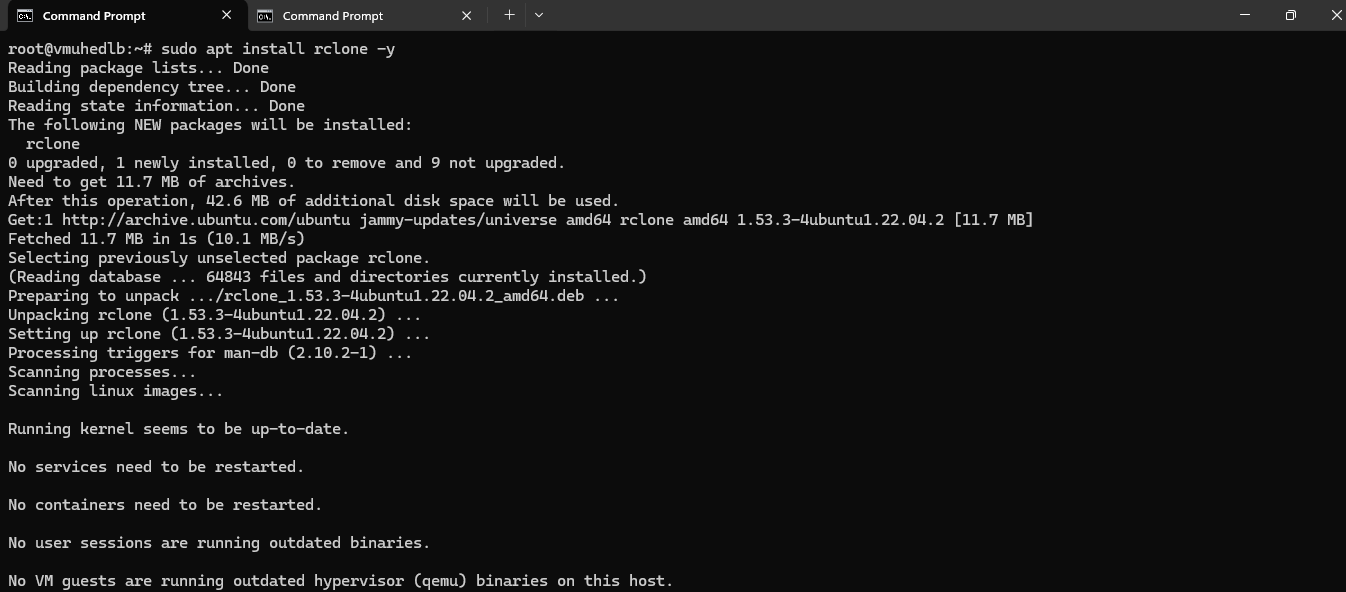

We’ll use rclone to connect Borg with S3 storage. Run the following command to install rclone:

sudo apt install rclone -y

Rclone is an open source, multi threaded, command line computer program to manage or migrate content on cloud and other high latency storage. Its capabilities include sync, transfer, crypt, cache, union, compress and mount. The rclone website lists supported backends including S3 and Google Drive.

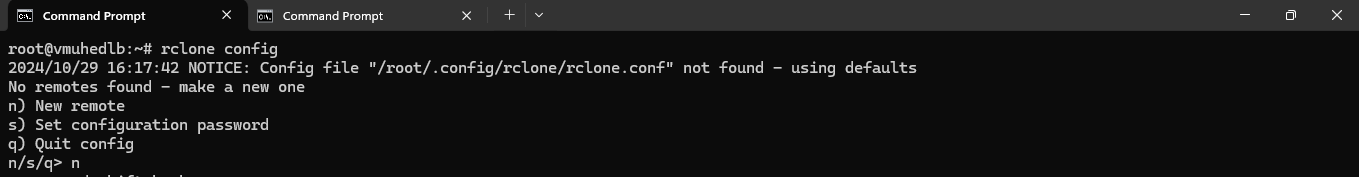

Run the following command to configure the S3 Storage Endpoint in Rclone:

rclone config

Follow the prompts:

- Type:

s3 - Provider:

Other S3 provider - Access Key:

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx - Secret Key:

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

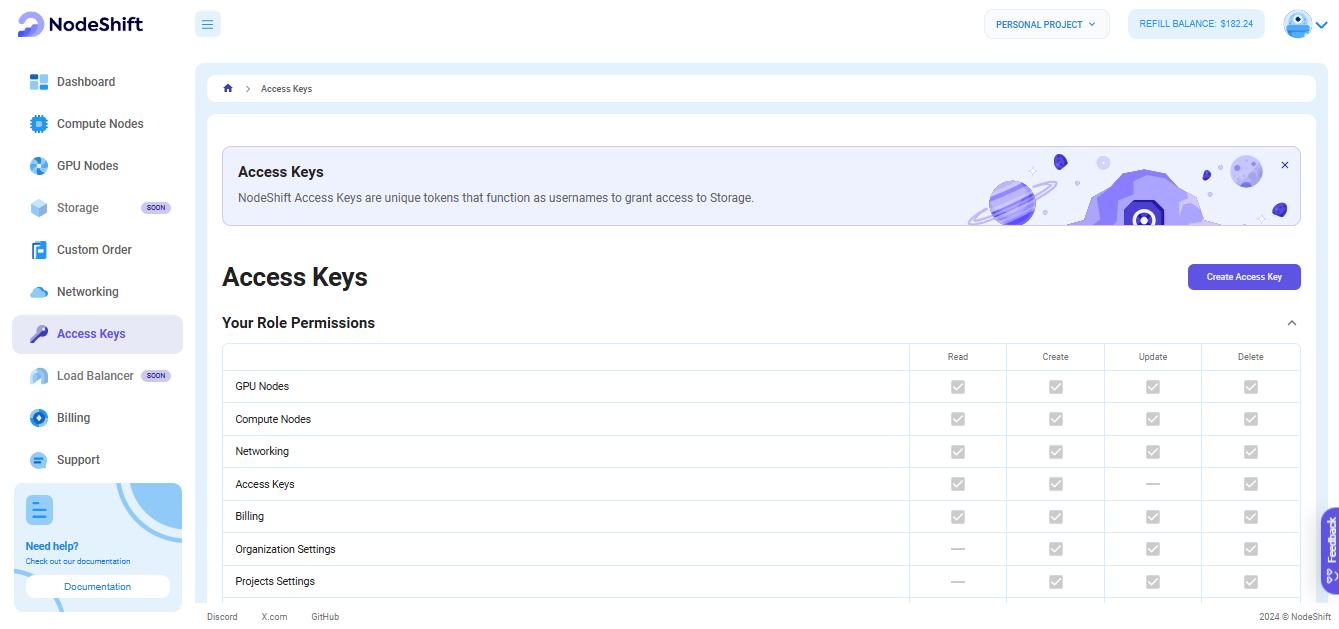

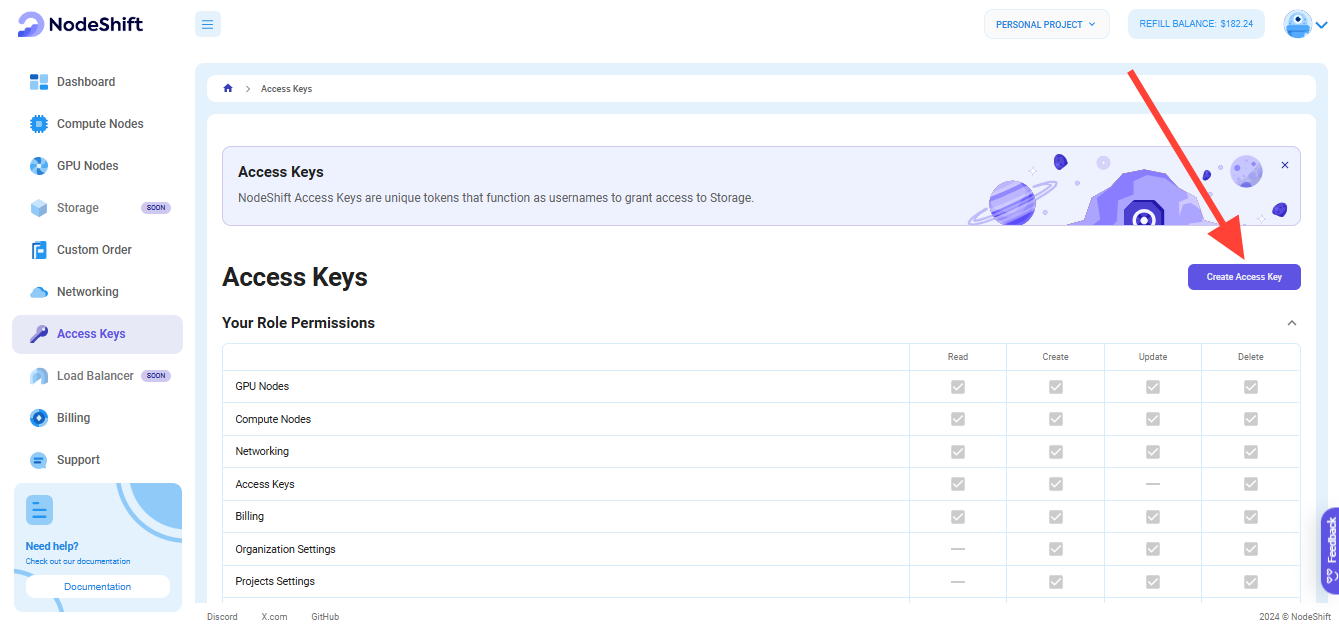

How you Create Access Key

Access Keys are credentials allowing to access S3-compatible resources and deploy via GitHub Actions and Terraform. NodeShift uses these keys automatically to verify user identity and protect the files. But they can also be utilized for direct access to the data. For example, using AWS CLI.

Generate Credentials

Navigate to the Access Keys page. During the first visit the page will be empty.

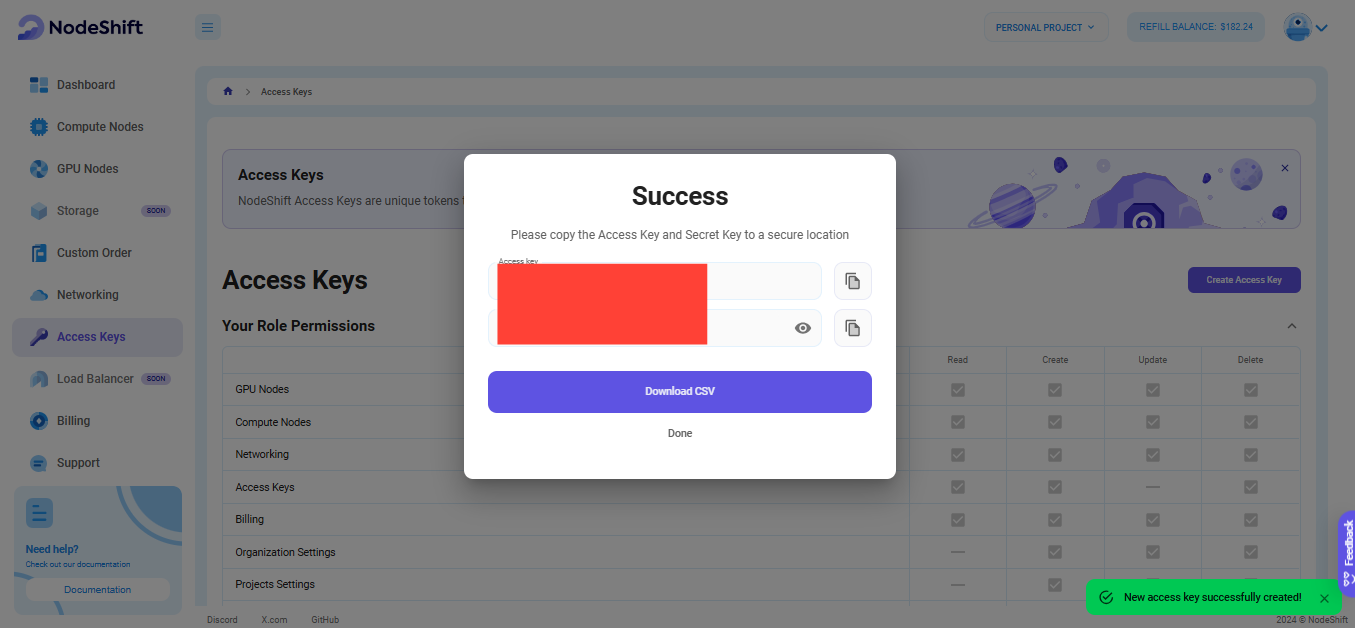

To start, create your first key. Click Create Access Key.

When a key is generated, it is automatically saved for this NodeShift account for future usage. Access keys represent a pair of two items - Key ID and the Secret value. Secret value will not be accessible after closing the generation window. Therefore, it should be saved securely and never shared with anyone. ID and Secret can be copied separately or downloaded at at once.

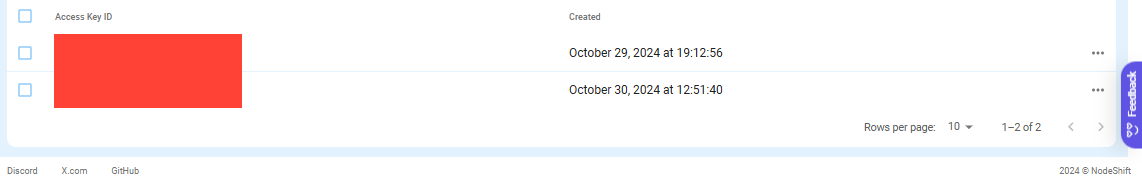

NodeShift allows for multiple access keys to be generated for a single account. Generated keys are displayed in a table, where they can be deleted one by one or in bulk.

Deleting an access key in this table prevents future access to the resources using the ID and the Secret from this key.

- Endpoint:

https://eu.storage.nodeshift.com - Region: Leave it blank or select EU.

- Name: Give it a name, e.g.,

nodeshift-backup.

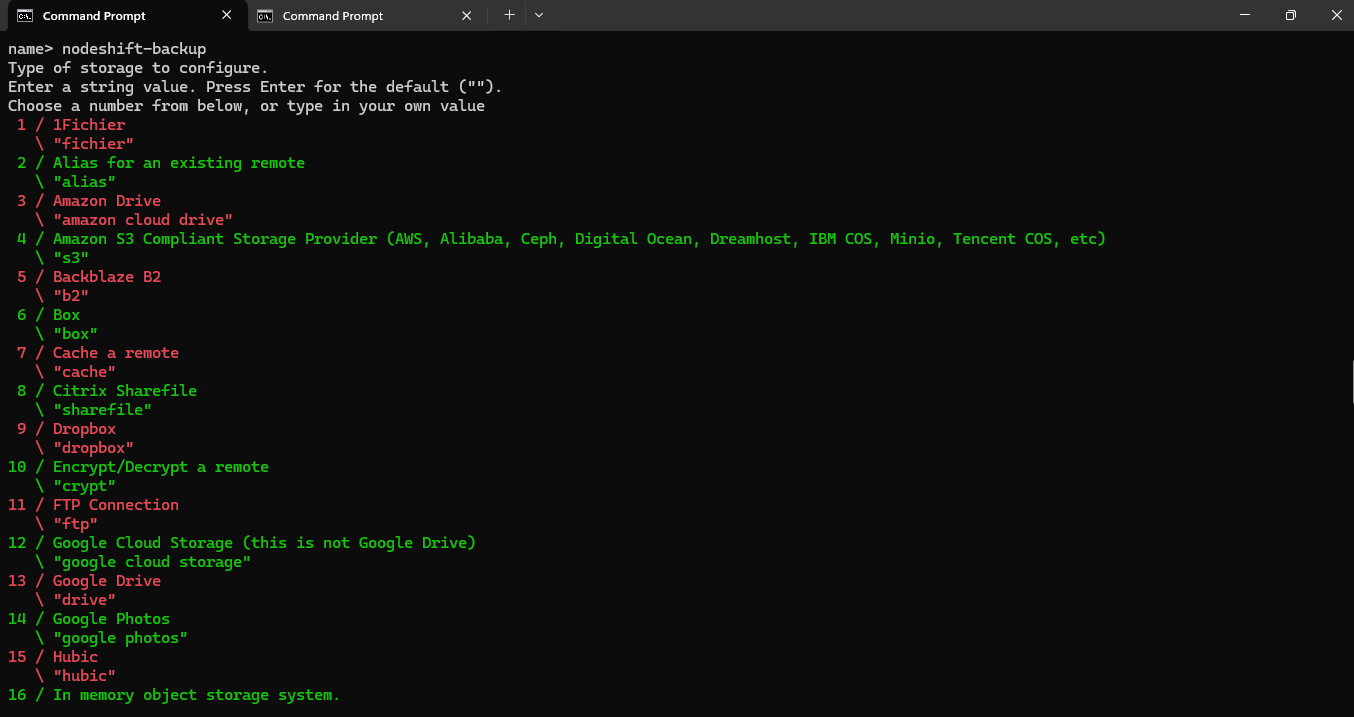

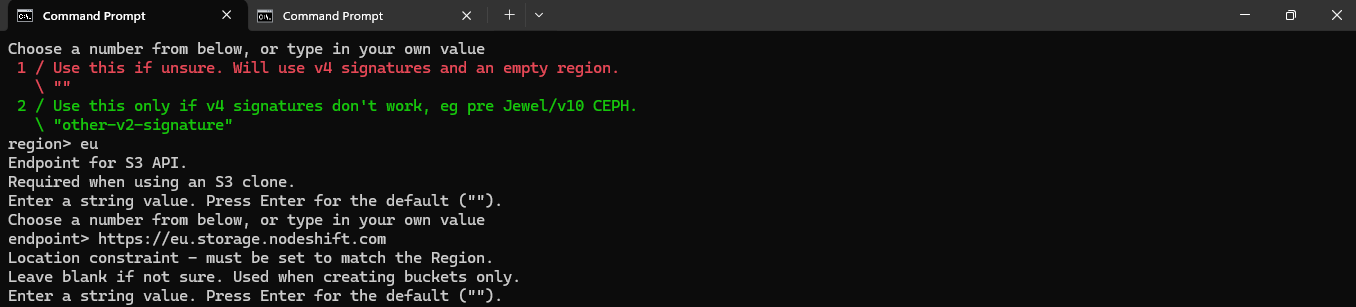

Follow these steps to configure rclone and connect it to your NodeShift S3 storage:

Select n to create a new remote.

n

Name the remote:

Give your remote a name. This could be anything meaningful, e.g., nodeshift-backup.

name> nodeshift-backup

Select Storage Provider:

When prompted to choose a storage type, select S3 by entering the corresponding number:

Storage> s3

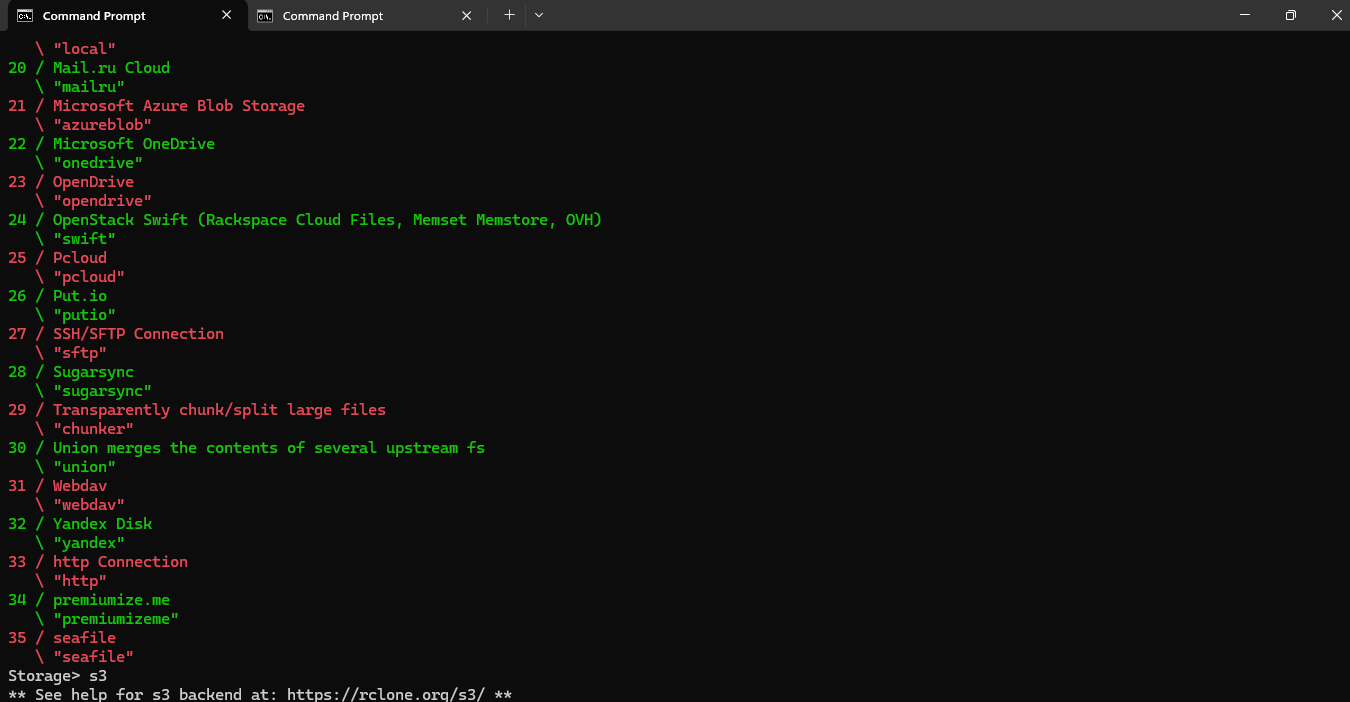

Since NodeShift's S3 storage is compatible with generic S3 APIs, you should choose the "Other" option:

provider> 13

This will allow rclone to treat NodeShift’s storage as a standard S3-compatible provider. After this, follow the prompts to input the access keys and endpoint URL as explained earlier.

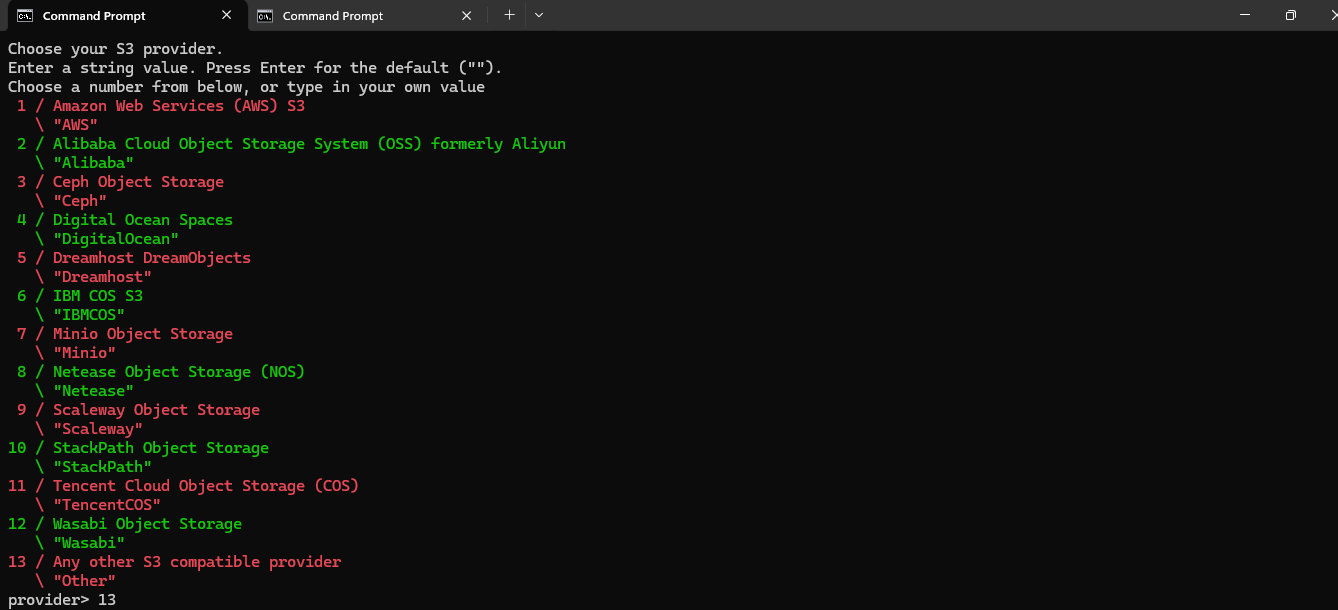

Since you have access keys from the NodeShift platform and will enter them manually, you should choose option 1:

env_auth> 1

This ensures that rclone will prompt you to enter the Access Key and Secret Key directly in the next steps.

After this, proceed as follows:

Access Key

Enter the access key you got from the NodeShift platform:

AWS Access Key ID> xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

Secret Key

Provide the secret key as well:

AWS Secret Access Key> xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

Region

You can also leave this blank, as NodeShift's S3-compatible storage works across regions:

Region>

Endpoint

Provide the EU endpoint for your storage:

Endpoint> https://eu.storage.nodeshift.com

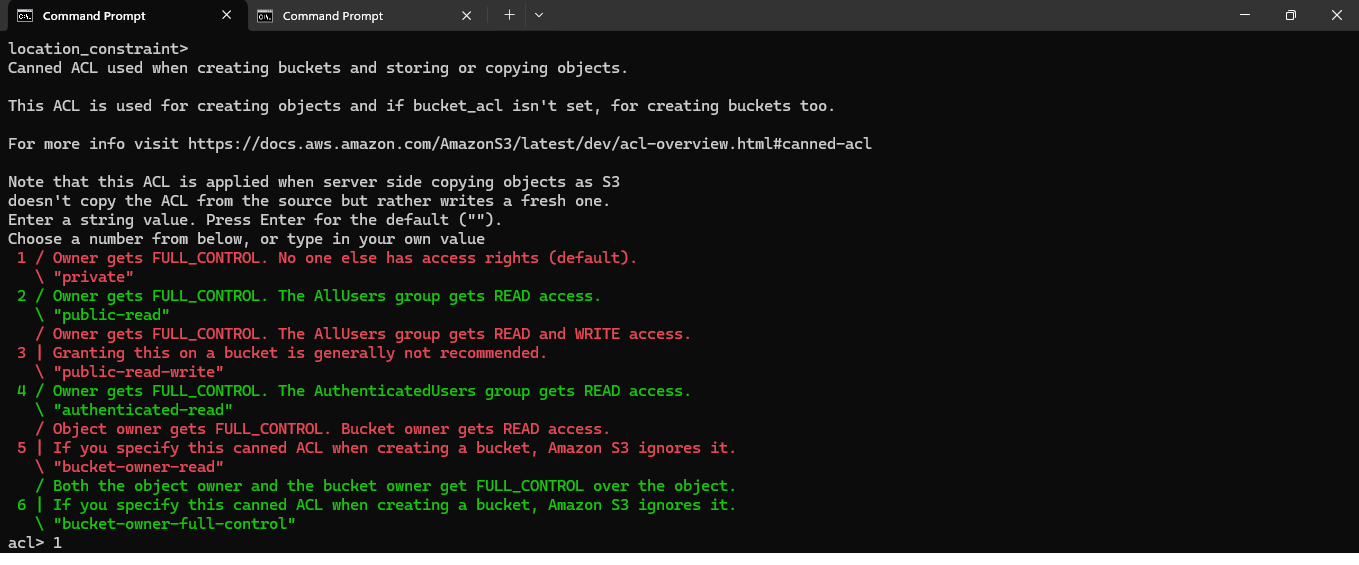

The Canned ACL determines the access permissions for the objects you upload to the S3-compatible storage. Here’s what you should do based on your scenario:

Recommended Option:

Since you are backing up sensitive data from a virtual machine, the safest choice is:

acl> 1

This will set the ACL to private, meaning:

- Owner gets FULL_CONTROL.

- No one else will have access by default.

This ensures that your backups are secure and only accessible with your provided credentials.

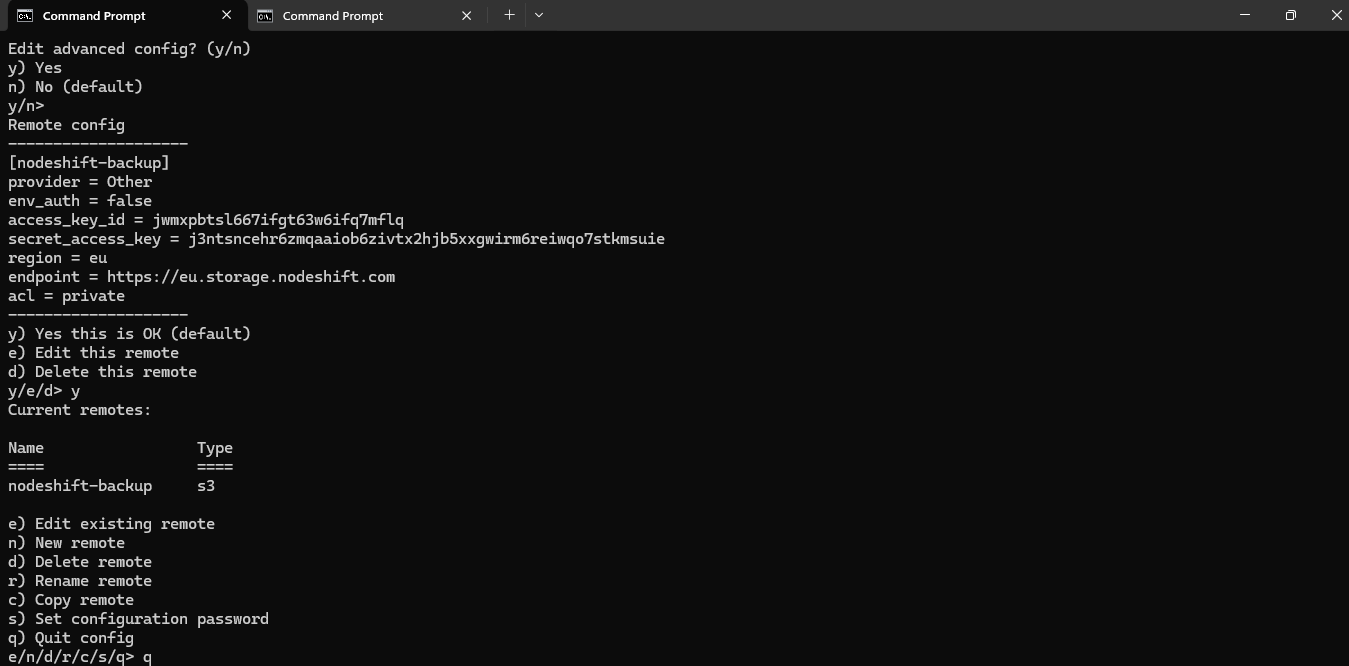

Everything looks correctly configured, you can confirm the setup by choosing:

y

This will finalize the configuration for the nodeshift-backup remote and save it.

Advanced Configurations

You can skip any advanced configurations unless you have specific requirements (just press Enter).

Confirm Settings

After inputting all the details, confirm the configuration when prompted. Then, quit the setup by typing:

q

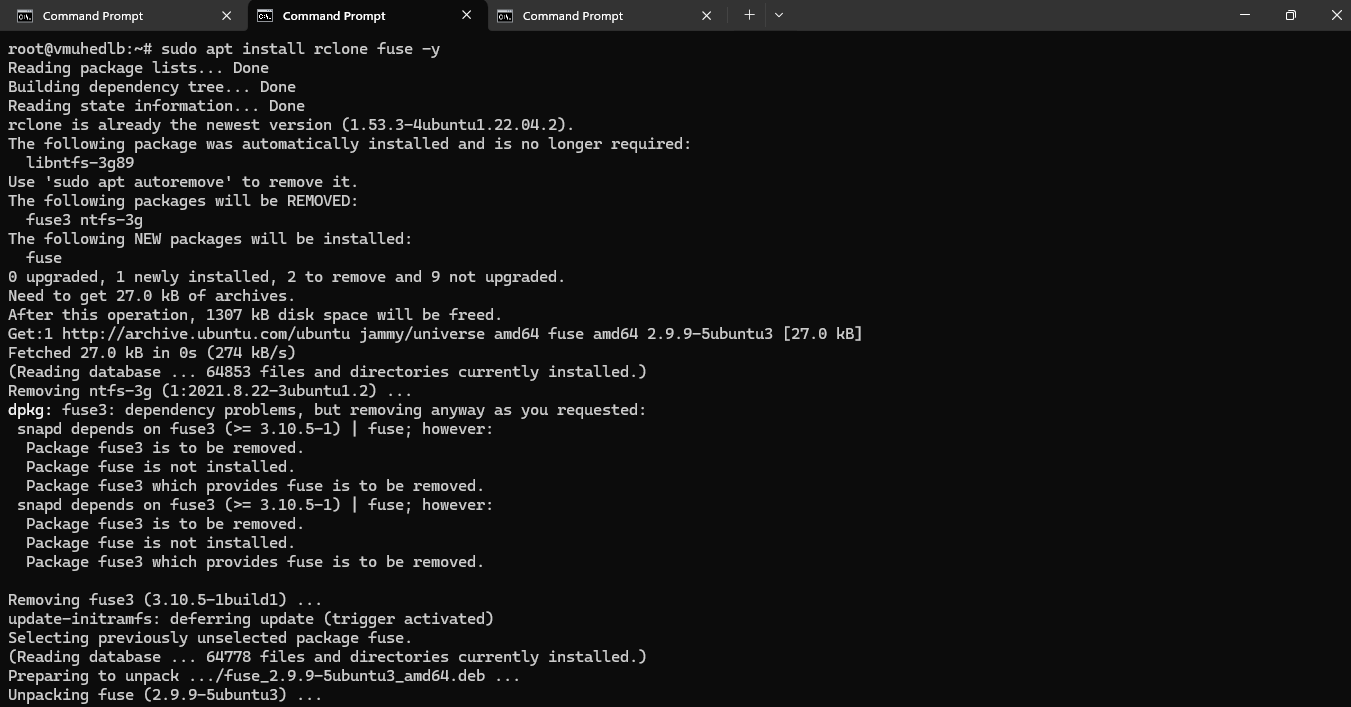

Step 11: Install Rclone FUSE Support

Make sure rclone supports FUSE to mount the S3 bucket. Run the following command to install the rclone FUSE:

sudo apt install rclone fuse -y

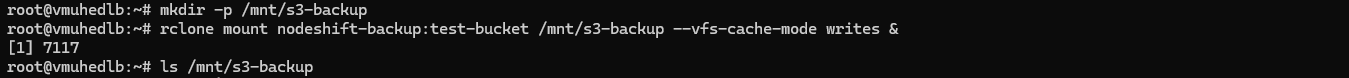

Create a local directory to mount the S3 bucket:

Run the following command to create a local directory to mount the S3 bucket:

mkdir -p /mnt/s3-backup

Mount your test-bucket using rclone:

rclone mount nodeshift-backup:test-bucket /mnt/s3-backup --vfs-cache-mode writes &

This command mounts the bucket to /mnt/s3-backup in the background. Verify it by listing the directory:

ls /mnt/s3-backup

If you see an empty directory when running ls /mnt/s3-backup, that’s expected if there are no files or folders in your test-bucket yet.

Here’s what you can do to verify that the rclone mount is working and continue with the Borg backup setup.

Check if the mount is active: Run the following to confirm the mount point is active:

mount | grep s3-backup

The output in below screenshot confirms that the rclone mount is active and successfully mounted at /mnt/s3-backup. You are now ready to proceed with initializing the Borg repository and creating backups.

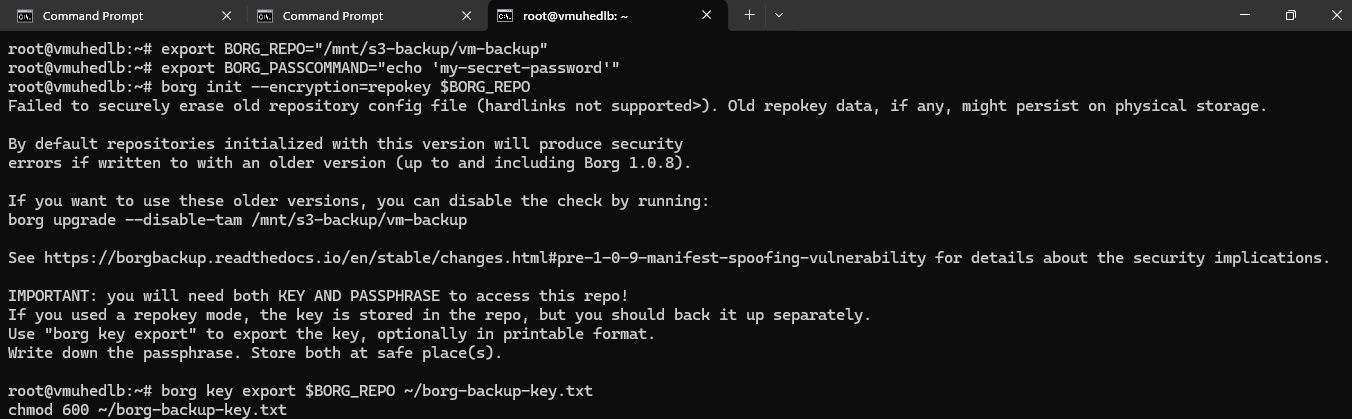

Step 12: Initialize the Borg Repository

Run the following commands to create the backup repository on your S3 storage:

export BORG_REPO="rclone:nodeshift-backup:/vm-backup"

export BORG_PASSCOMMAND="echo 'my-secret-password'"

borg init --encryption=repokey $BORG_REPO

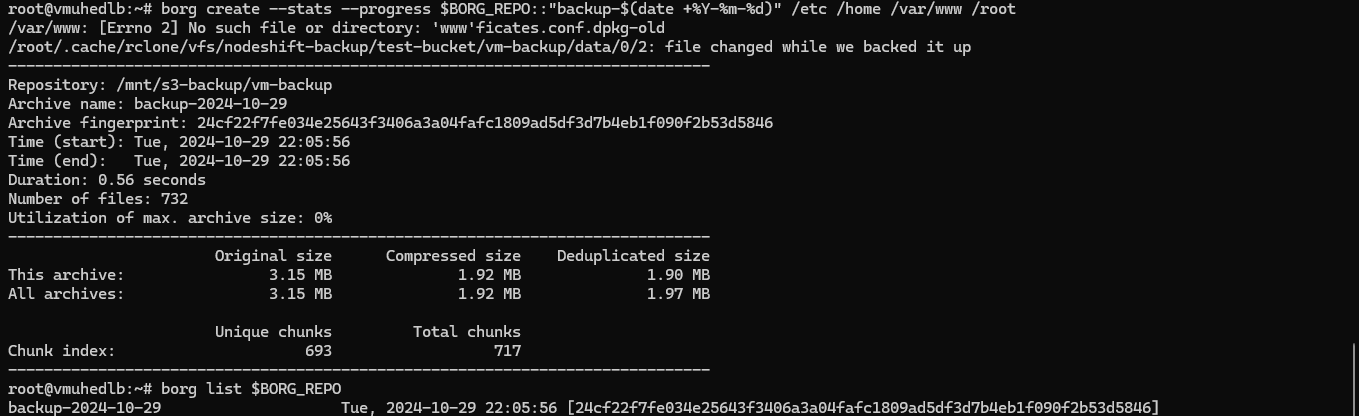

Step 13: Create Your First Backup and Verify the Repository

Run the following command Borg to back up your entire VM or specific directories.

borg create --stats --progress $BORG_REPO::"backup-$(date +%Y-%m-%d)" /etc /home /var/www /root

This command will back up the specified directories with a timestamped archive.

Then, run the following command to verify the repository:

borg list $BORG_REPO

If the repository is properly initialized, this command should show the repository structure or be empty if no backups exist yet.

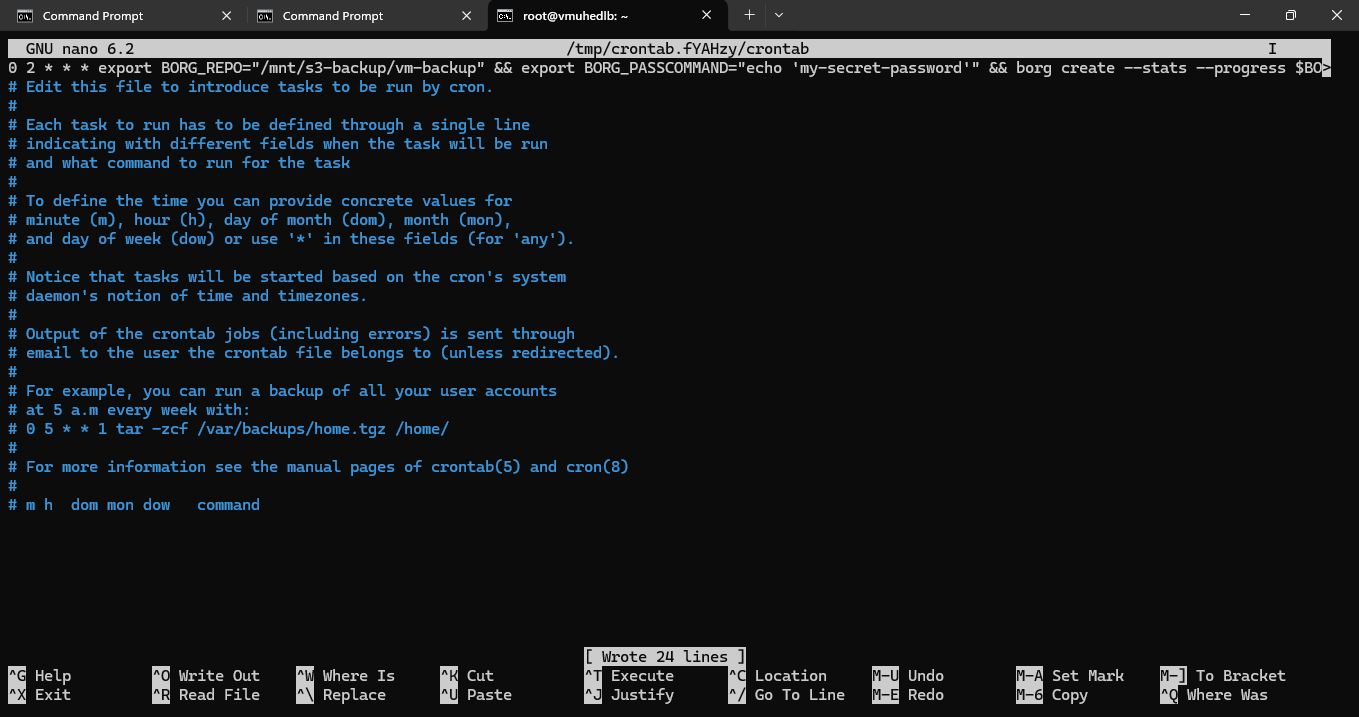

Step 14: Automate Backups Using Cron

To automate daily backups at 2:00 AM, edit your cron jobs:

crontab -e

After running above command, it will prompt a select an editor option - you should choose 1 here, this selects Nano, which is the easiest text editor to use, especially if you’re not familiar with vim or ed.

How to Use Nano for Crontab:

- After selecting

1(Nano), you’ll enter the crontab editor. - Add your BorgBackup cron job in the editor.

0 2 * * * export BORG_REPO="/mnt/s3-backup/vm-backup" && export BORG_PASSCOMMAND="echo 'my-secret-password'" && borg create --stats --progress $BORG_REPO::"backup-$(date +\%Y-\%m-\%d)" /etc /home /root

- Edit the job with the corrected single-line version above.

- Save and exit Nano:

- Press

Ctrl + O, then Enter to save. - Press

Ctrl + Xto exit.

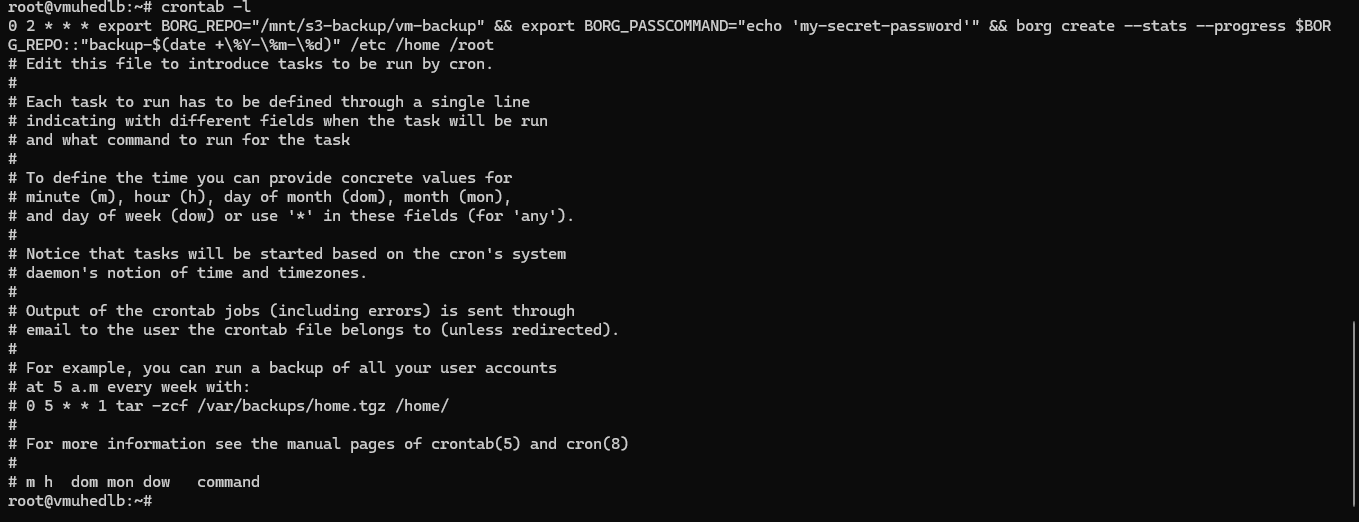

Step 15: Verify the Cron Job

Run the following command to Verify the Cron Job:

crontab -l

The cron job is now correctly set up!

- Cron Job: This job will run every day at 2:00 AM, backing up

/etc,/home, and/rootto your Borg repository stored on NodeShift’s S3-compatible bucket.

Conclusion

In this guide, we defined the Mastering NodeShift Compute VM Backups: Using Borg, Borgmatic, Rclone and Cron’s Rsync for Reliable Data Protection and provided step-by-step instructions on NodeShift Compute VM Backups. From installing the Required Software, Essential tools, package, to creating a cron job.

For more information about NodeShift: