How to Deploy Apple's DCLM-7B in the Cloud

Apple has made a significant move in the open-source AI world by releasing DCLM, a 7 billion-parameter open-source language model.

DCLM-Baseline-7B is a 7 billion parameter language model trained on the DCLM-Baseline dataset, which was curated as part of the DataComp for Language Models (DCLM) benchmark. This model is designed to showcase the effectiveness of systematic data curation techniques for improving language model performance.

Model Details

| Size | Training Tokens | Layers | Hidden Size | Attention Heads | Context Length |

|---|---|---|---|---|---|

| 7B | 2.5T | 32 | 4096 | 32 | 2048 |

Key points of DCLM:

- Model Specifications: The 7B base model is trained on 2.5 trillion tokens, using primarily English data with a 2048 context window.

- Training Data: Combines datasets from DCLM-BASELINE, StarCoder, and ProofPile2.

- Performance: The model achieves an MMLU score of 0.6372, positioning it above Mistral but below Llama3 in performance.

- License: Released under an open license, specifically the Apple Sample Code License.

- Comparison: Matches the performance of closed-dataset models like Mistral.

- Training Framework: Developed using PyTorch and the OpenLM framework.

- Availability: The model is accessible on Hugging Face and integrated within Transformers.

Additional Insights:

- Data Curation: Detailed explanation of the data curation process, offering insights into effective LLM training.

- Training Framework: Utilizes the DataComp-LM framework, focusing on improving language models through dataset experiments.

- Benchmark: Trained on 2.5 trillion tokens from Common Crawl datasets, aiming for enhanced performance.

Step-by-Step Process to Deploying Apple/DCLM-7B in the Cloud

For the purpose of this tutorial, we will use a GPU-powered Virtual Machine offered by NodeShift; however, you can replicate the same steps with any other cloud provider of your choice.

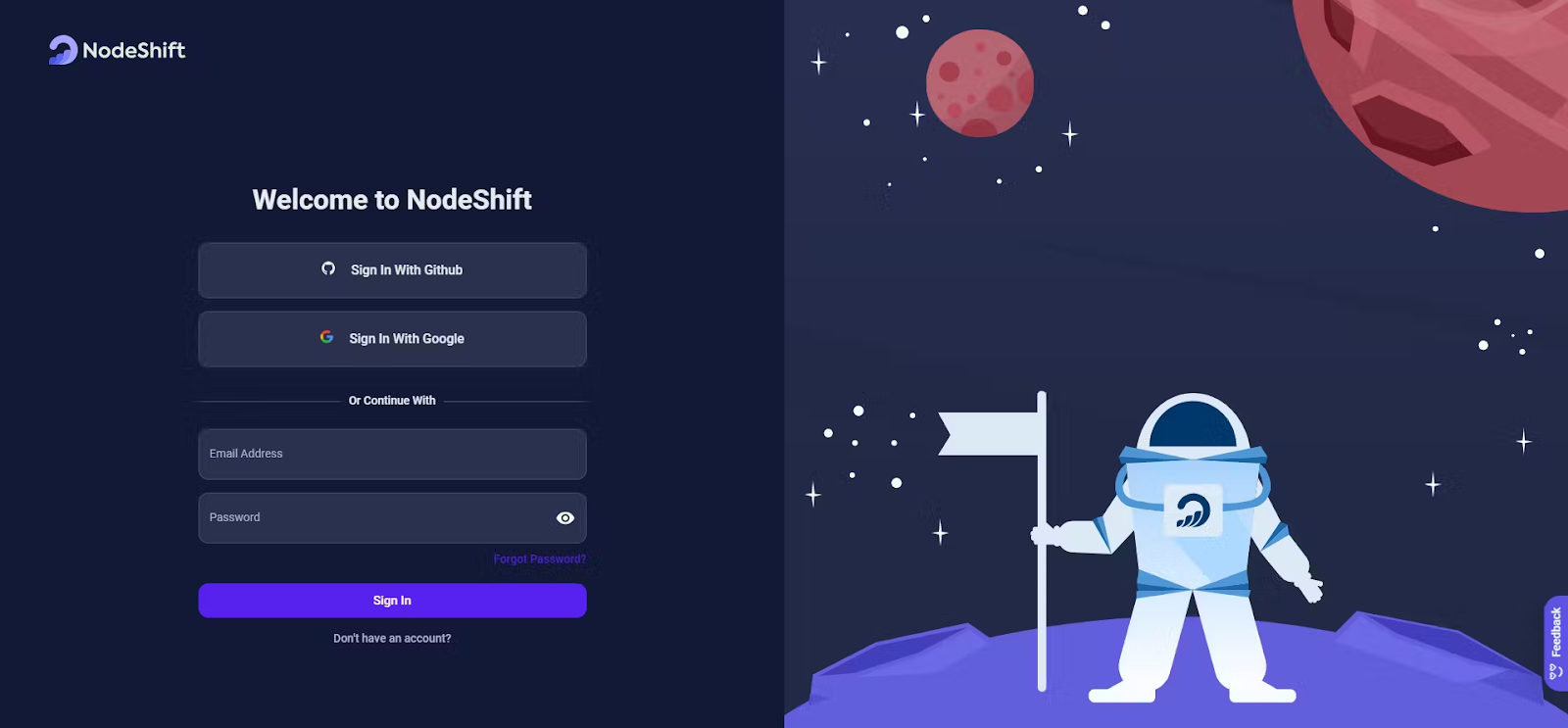

Step 1: Sign Up and Set Up a NodeShift Cloud Account

Visit the NodeShift Cloud website (https://app.nodeshift.com/) and create an account. Once you've signed up, log into your account.

Follow the account setup process and provide the necessary details and information.

Step 2: Create a GPU Virtual Machine

NodeShift GPUs offer flexible and scalable on-demand resources like NodeShift Virtual Machines (VMs) equipped with diverse GPUs ranging from H100s to A100s. These GPU-powered VMs provide enhanced environmental control, allowing configuration adjustments for GPUs, CPUs, RAM, and Storage based on specific requirements.

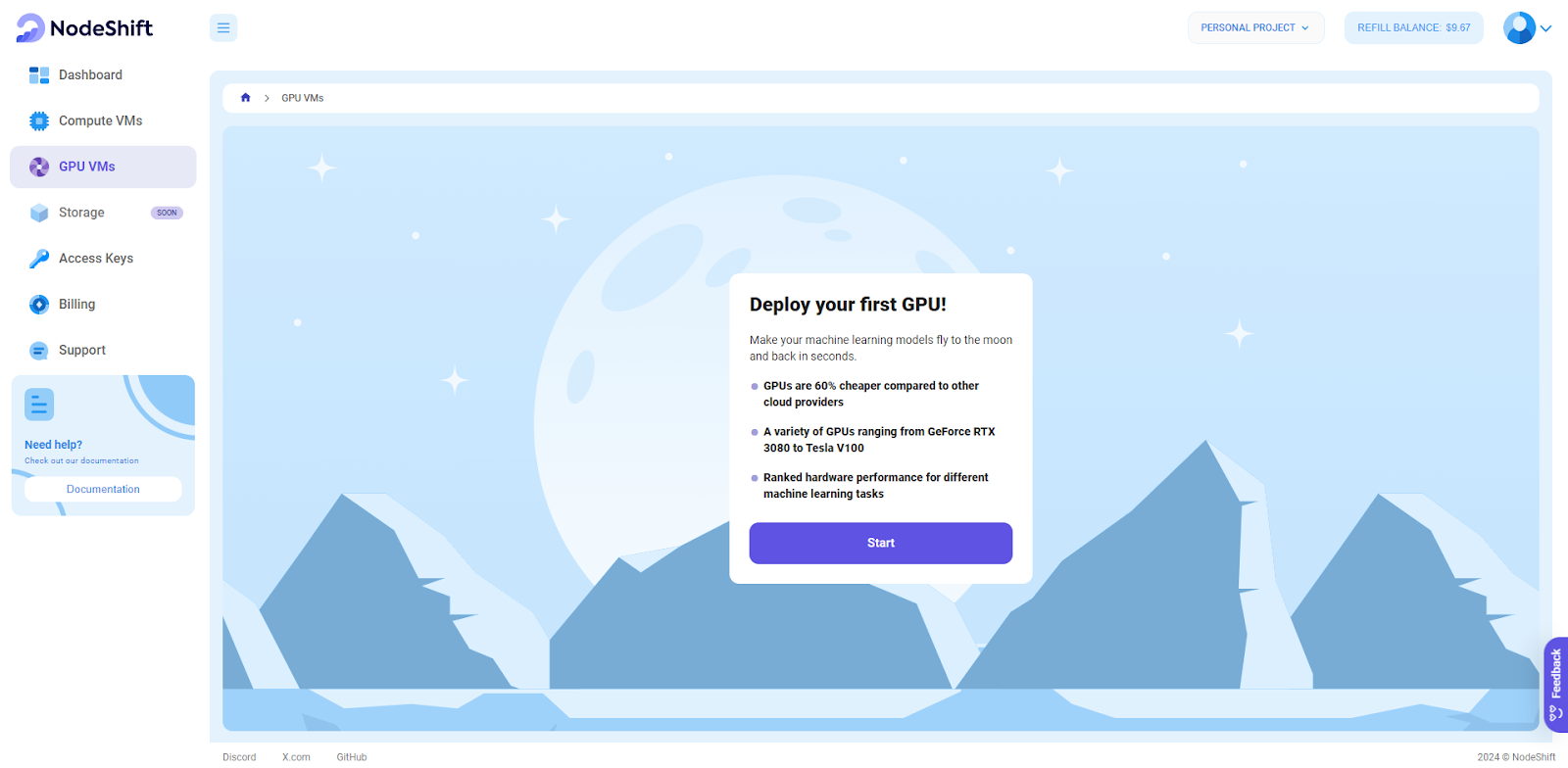

Navigate to the menu on the left side. Select the GPU VMs option, create a GPU VM in the Dashboard, click the Create GPU VM button, and create your first deployment.

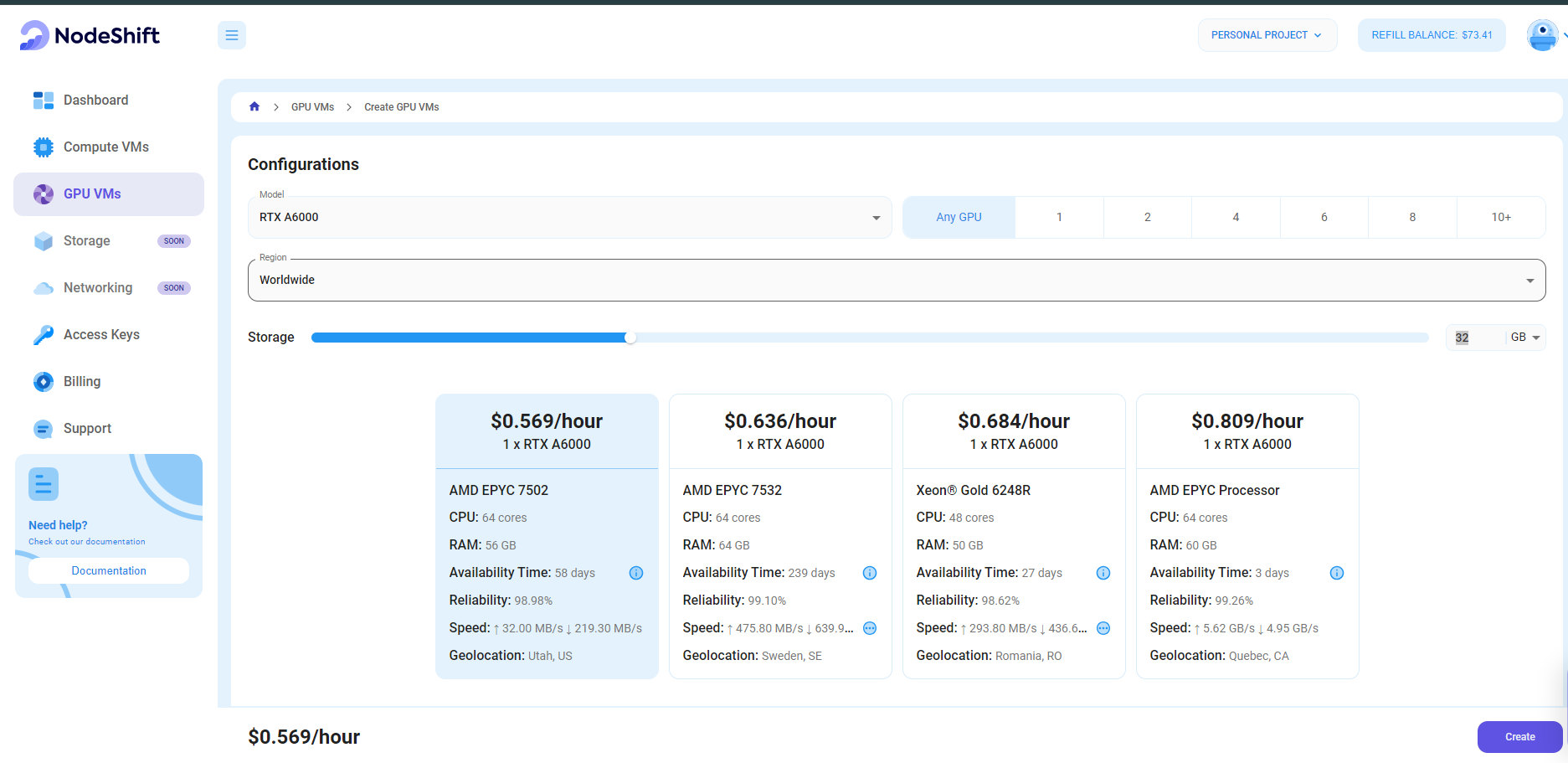

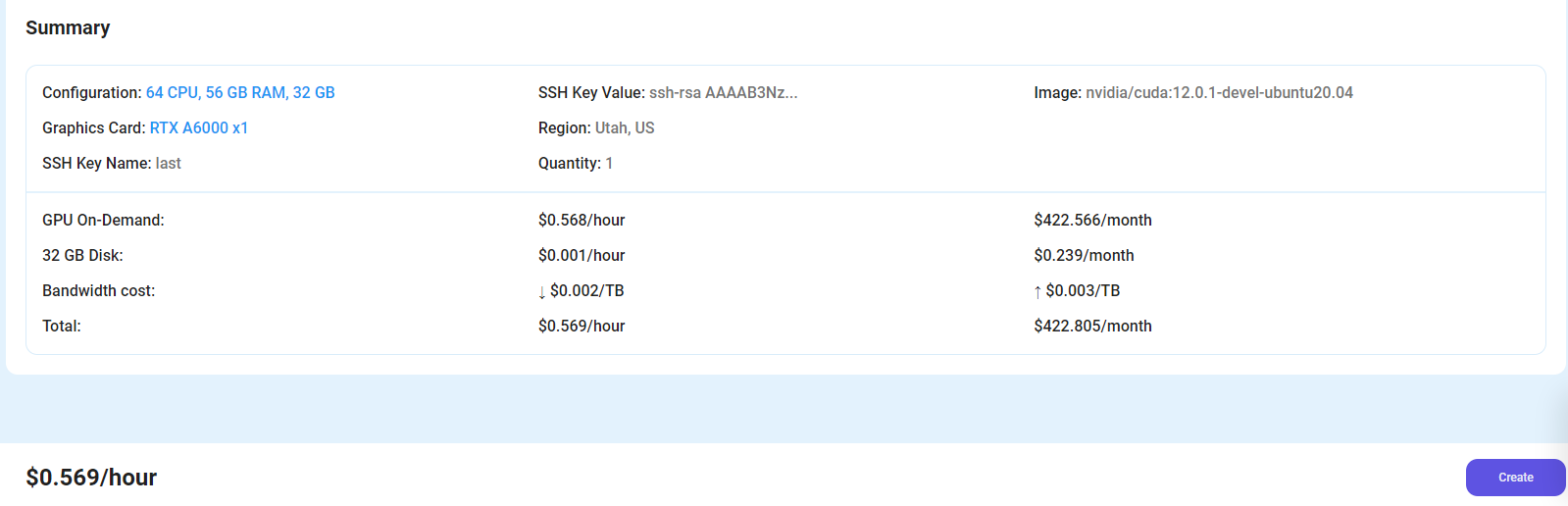

Step 3: Select a Model, Region, and Storage

In the "GPU VMs" tab, select a GPU Model and Storage according to your needs and the geographical region where you want to launch your model.

For the purpose of this tutorial, we are using 1x NVIDIA RTX A6000 to deploy Apple/DCLM-7B. After this, select the amount of storage (you will need at least 70 GB of storage for Apple's DCLM-7B.

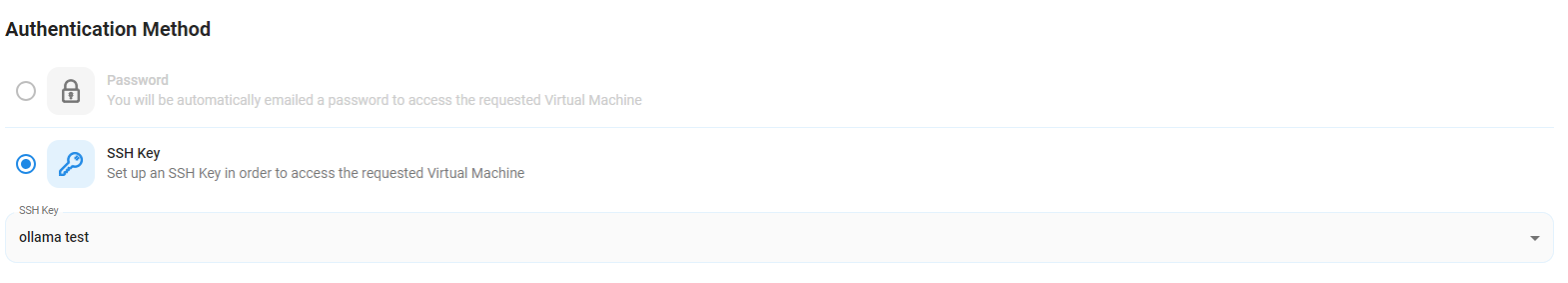

Step 4: Select Authentication Method

There are two authentication methods available: Password and SSH Key. SSH keys are a more secure option, in order to create them, head over to our official documentation: (https://docs.nodeshift.com/gpus/create-gpu-deployment)

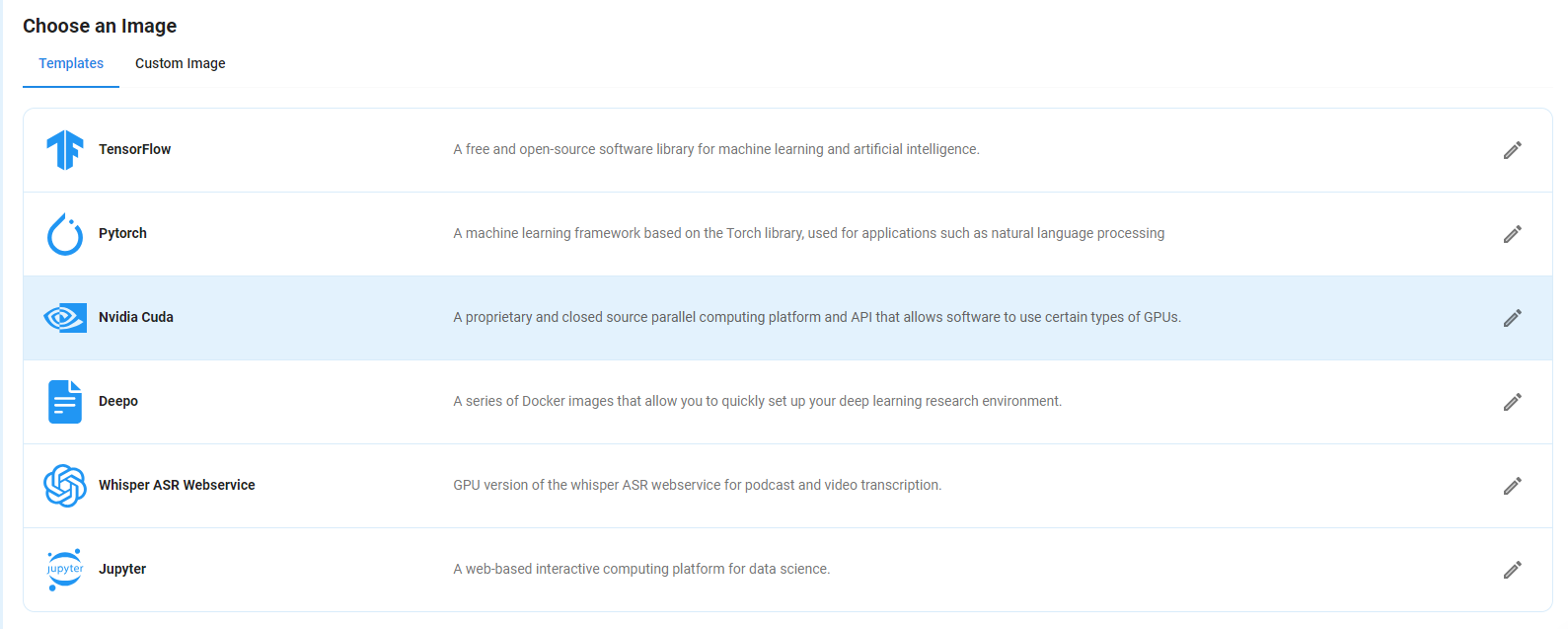

Step 5: Choose an Image

Next, you will need to choose an image for your VM. We will deploy Apple/DCLM-7B on an NVIDIA Cuda Virtual Machine. This proprietary and closed-source parallel computing platform will allow you to install Apple/DCLM-7B on your GPU VM.

After choosing the image, click the ‘Create’ button, and your VM will be deployed.

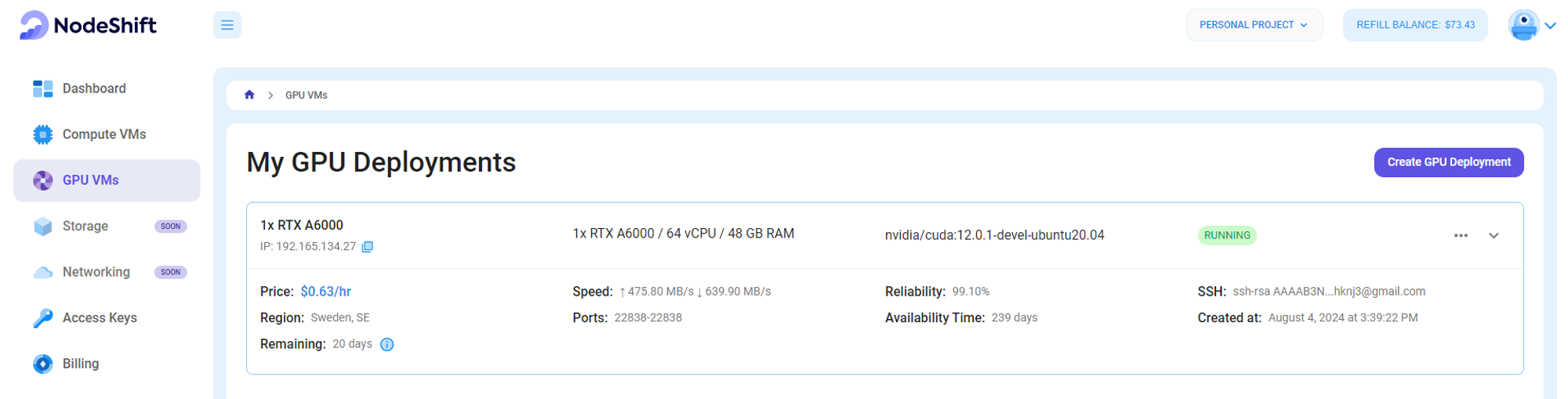

Step 6: Virtual Machine Successfully Deployed

You will get visual confirmation that your machine is up and running.

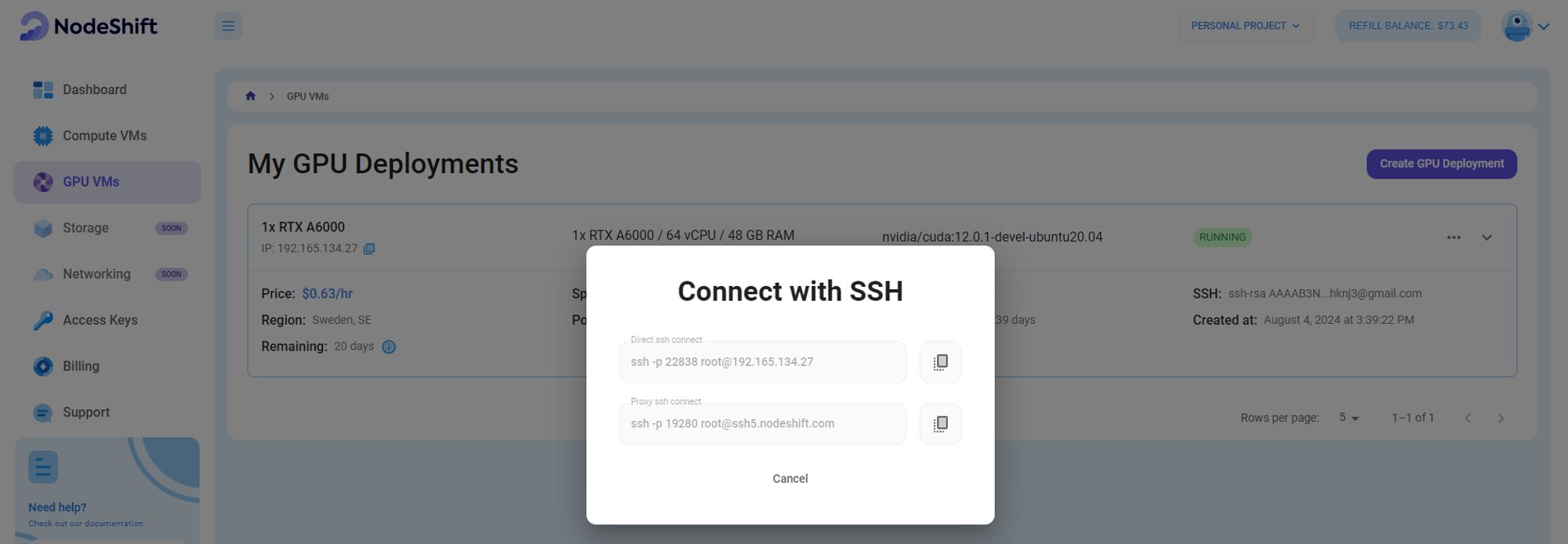

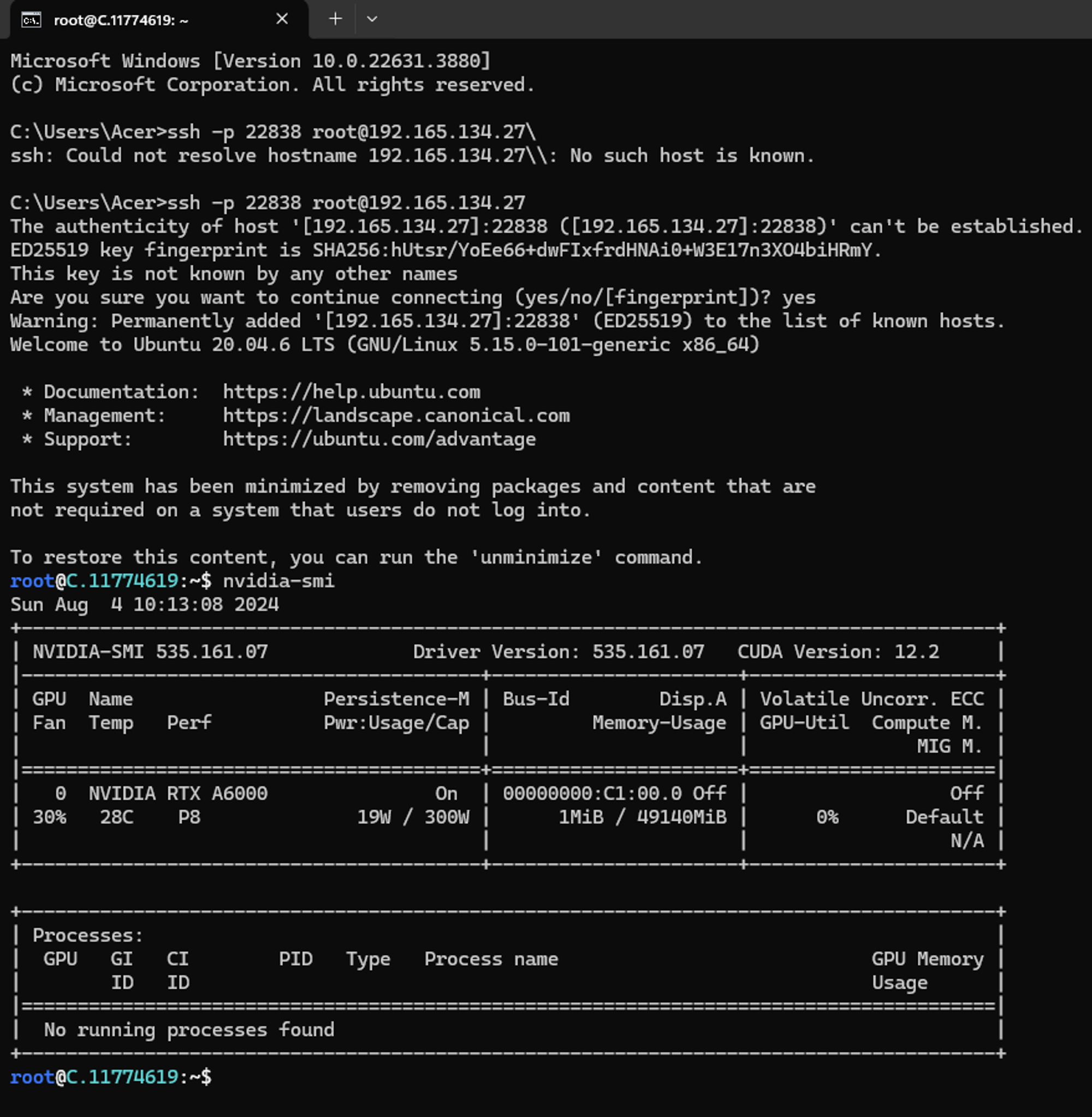

Step 7: Connect to GPUs using SSH

NodeShift GPUs can be connected to and controlled through a terminal using the SSH key provided during GPU creation.

Once your GPU VM deployment is successfully created and has reached the 'RUNNING' status, you can navigate to the page of your GPU Deployment Instance. Then, click the 'Connect' button in the top right corner.

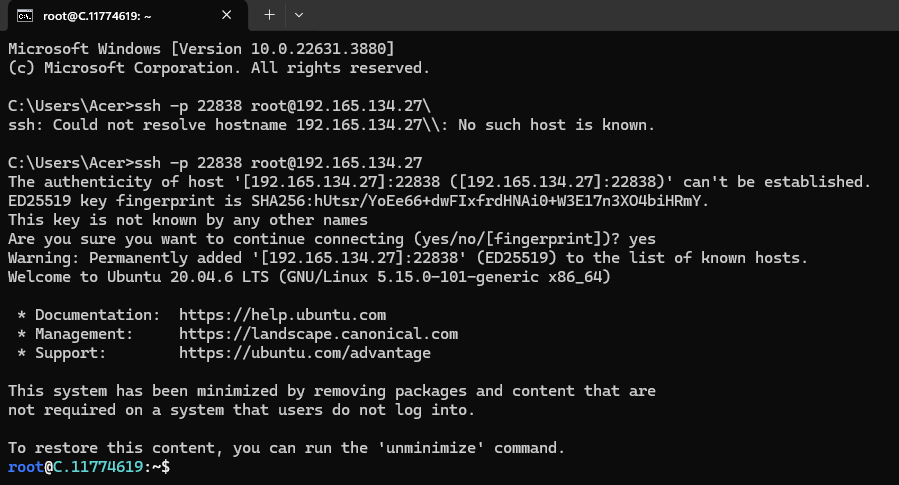

Now open your terminal and paste the proxy SSH IP.

Next, If you want to check the GPU details, Run the below command 'nvidia-smi':

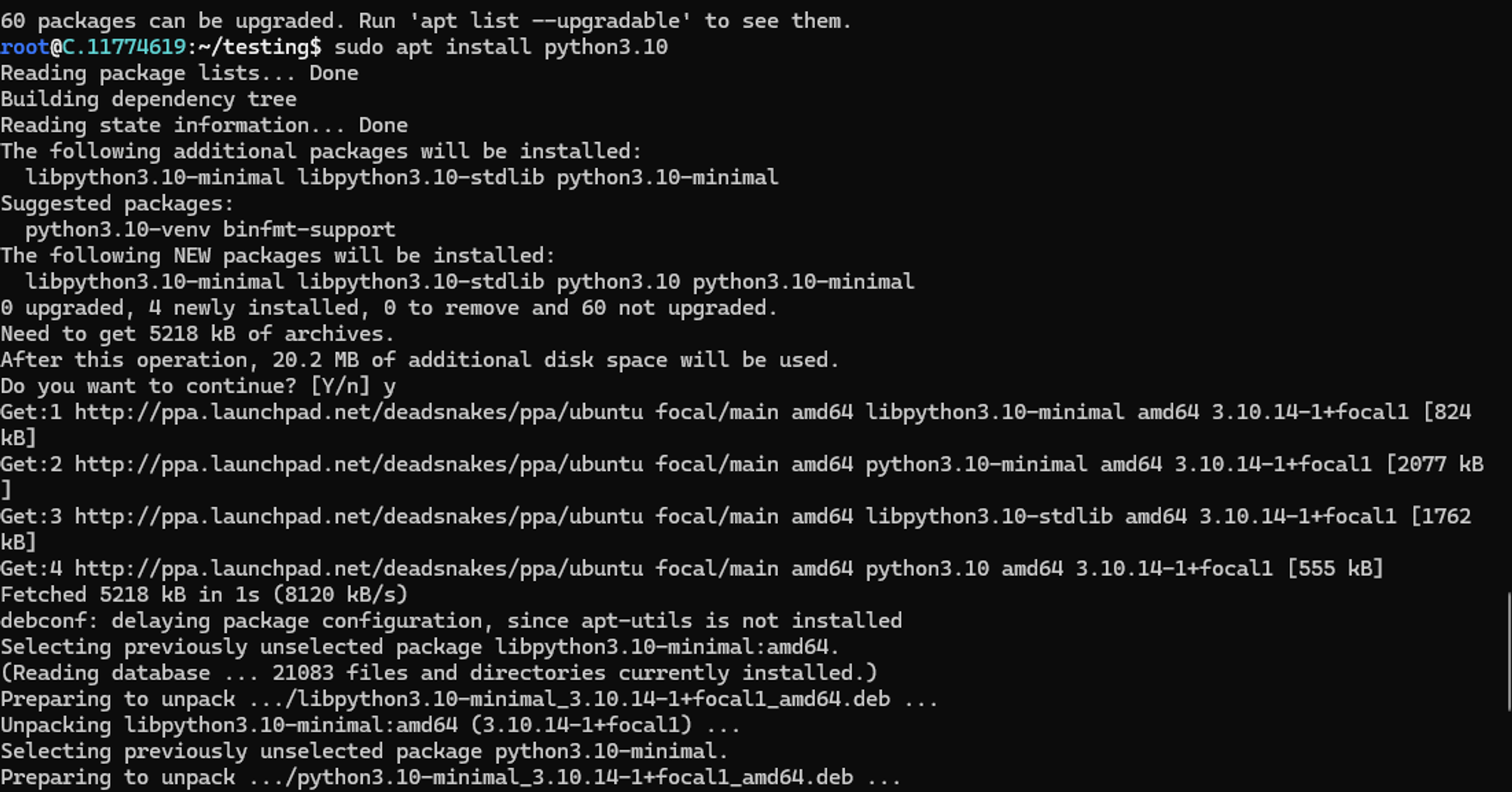

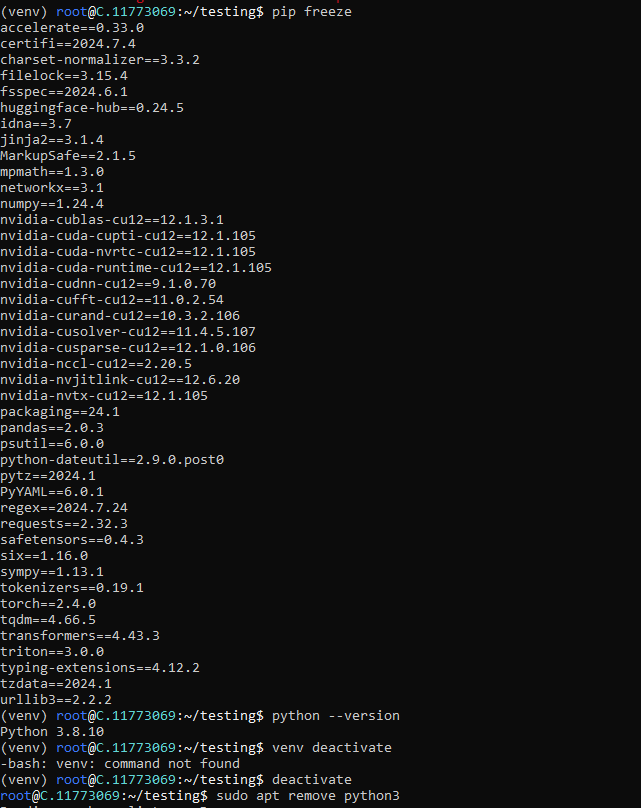

Step 8: Install Python & Python Packages

After completing the above steps, it's time to create the virtual environment for Python. Download Python and Python packages.

Run the command below to install Python and Python Packages.

sudo apt install python3.10

pip install pandas

pip install transformers

pip install accelerate

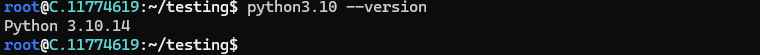

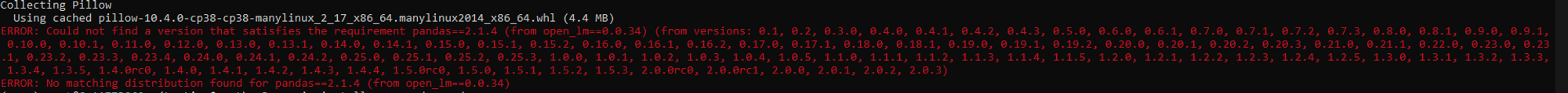

Note: Download the Latest version of Python because Python packages like Pandas, Pytorch require the latest version of Python to run for Apple/DCLM-7B models; if you download the old version of Python it will give an error.

Check the screenshot below for errors.

Note: After all these steps, check the version of Python packages including Pandas to see if any errors occur.

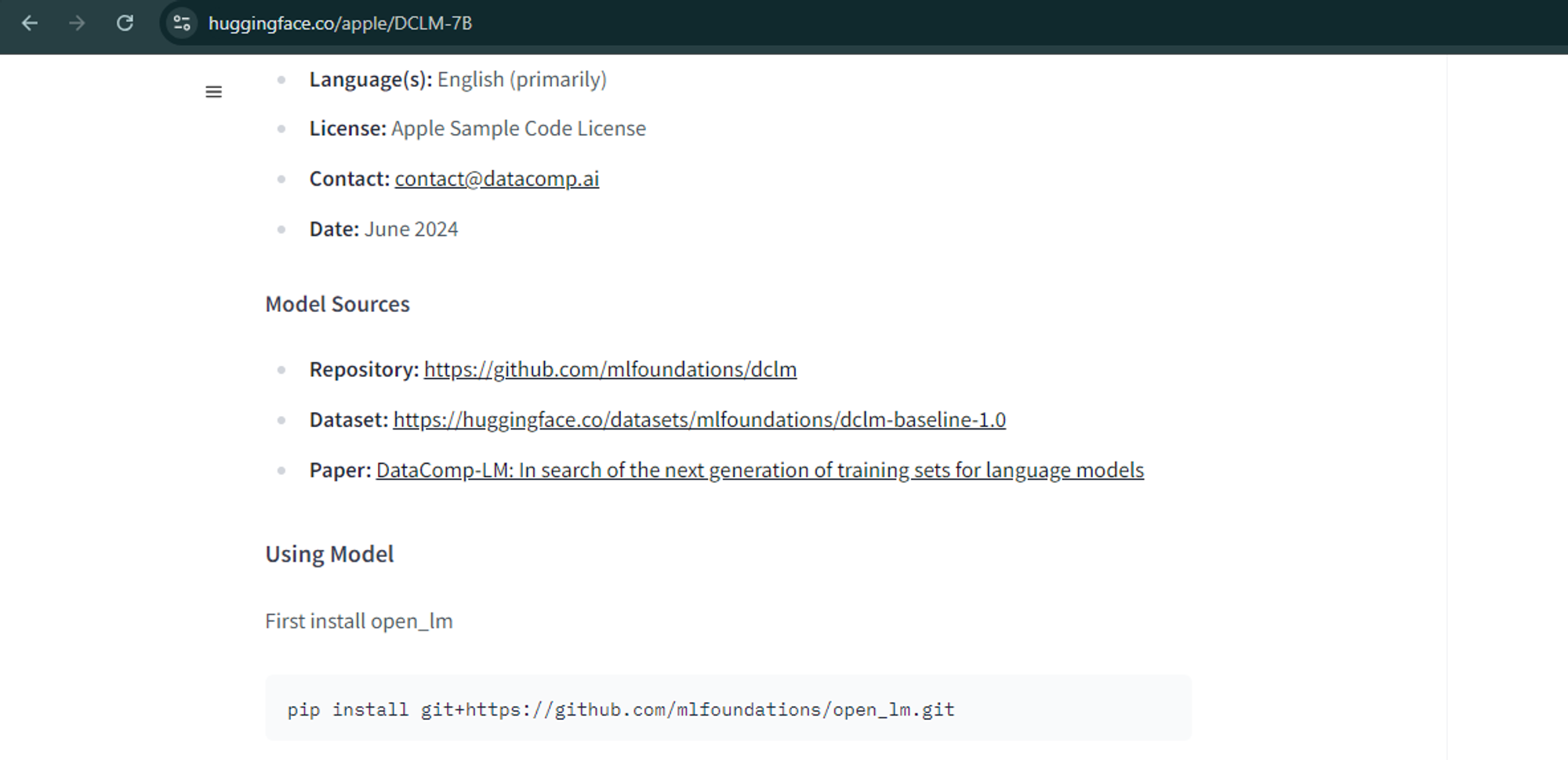

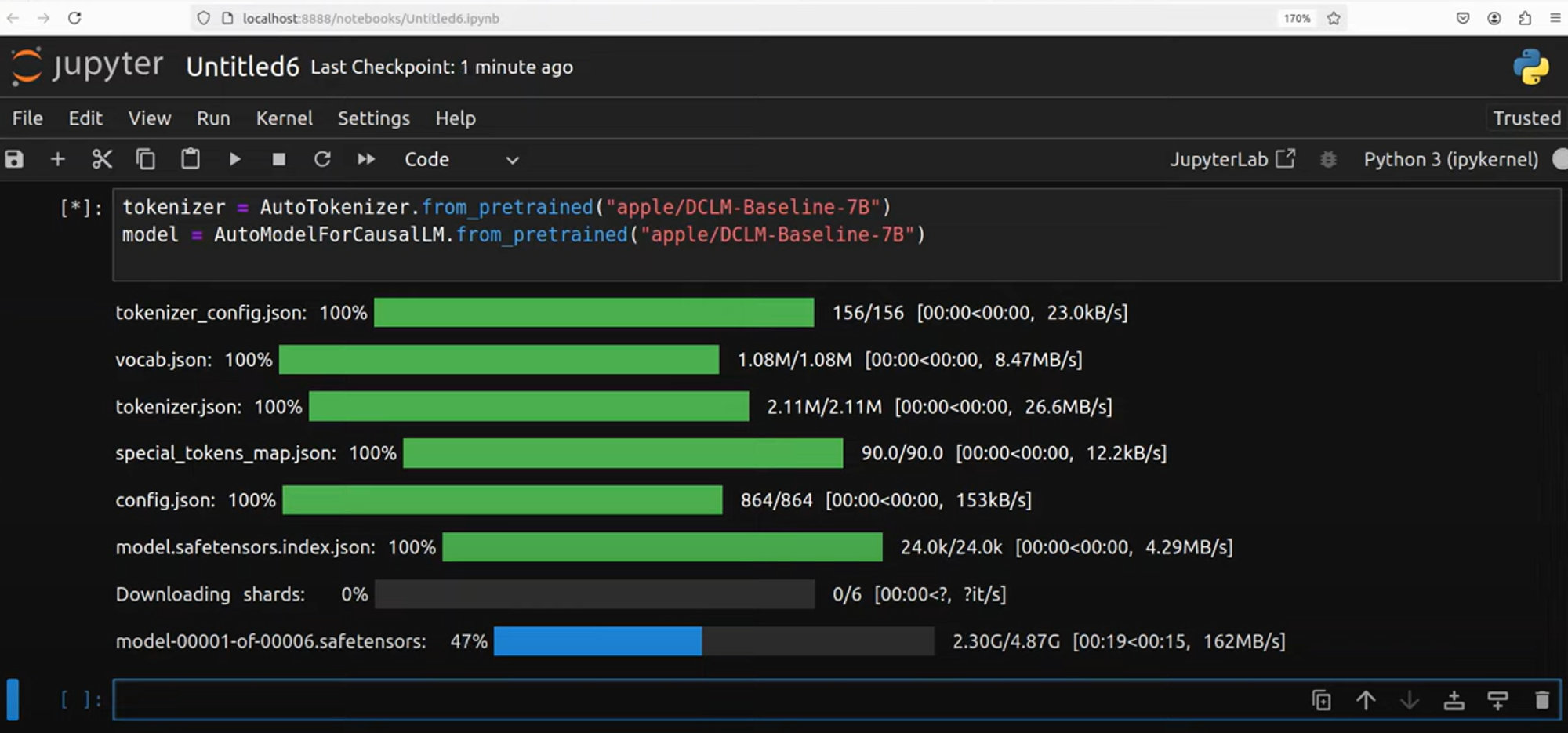

Step 9: Install Apple/DCLM-7B Model

Now, it is time to download the model from the Hugging Face website. Link: https://huggingface.co/apple/DCLM-7B

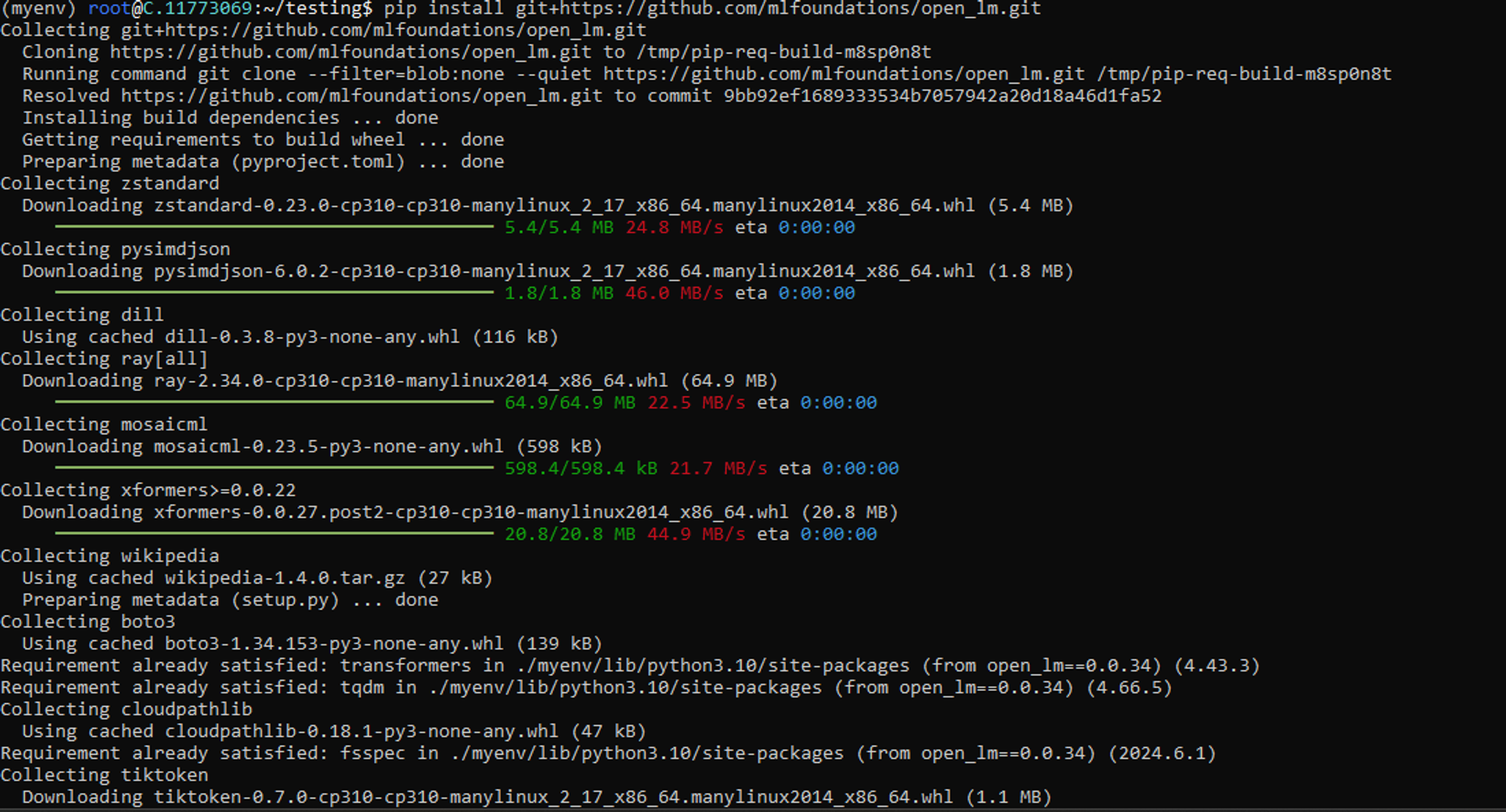

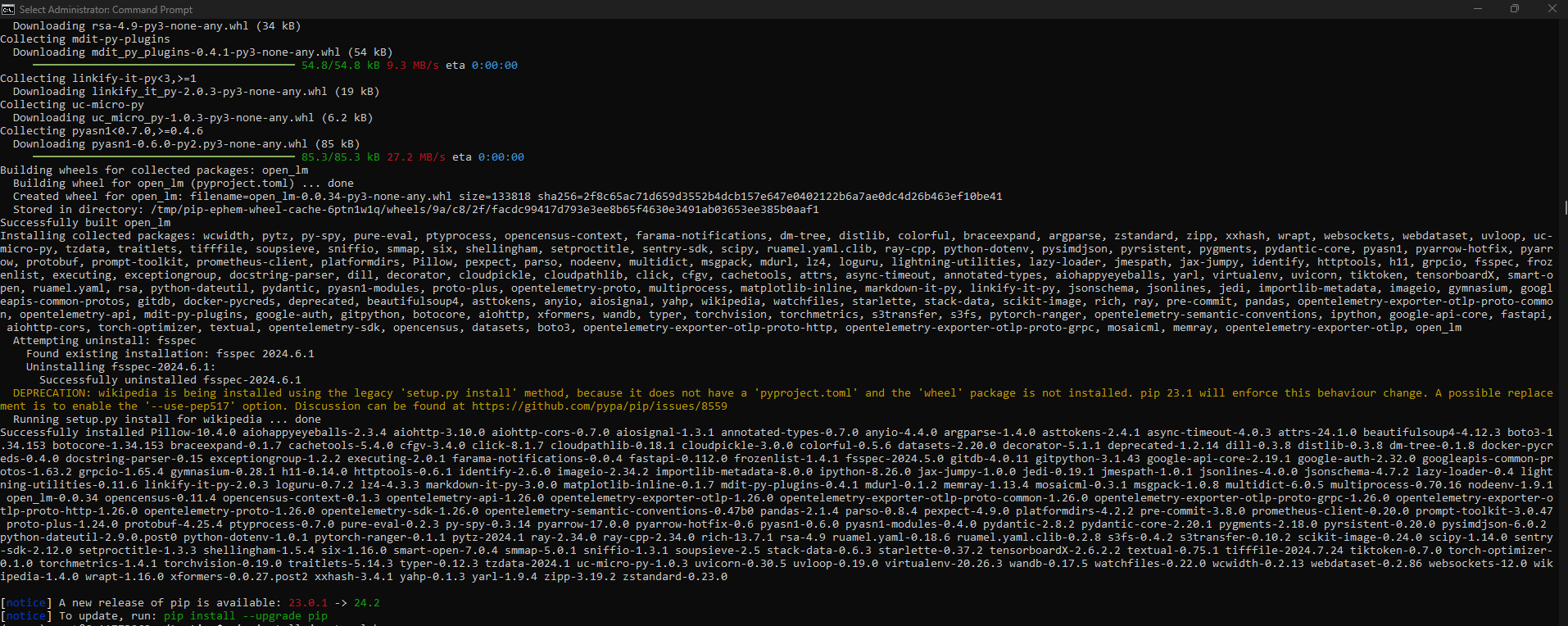

After this, we will run the following command in cmd, and the installation will start:

pip install git+https://github.com/mlfoundations/open_lm.git

Now, we see that our installation process is complete.

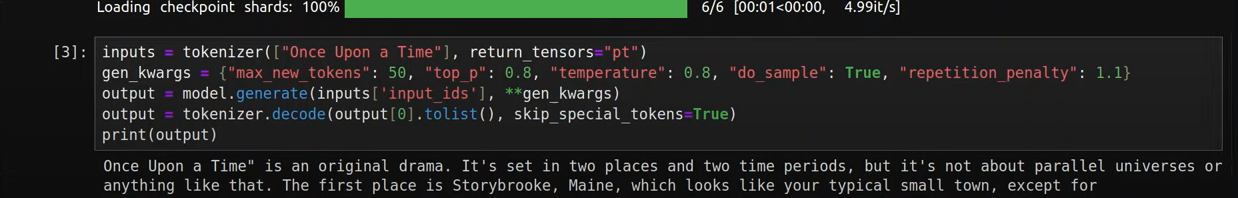

Step 10: Run Apple/DCLM-7B Model

We have two options for running the DCLM 7B Model: Jupyter Lab and the terminal.

For Jupyter Lab, we have to install a notebook, and for the terminal, we will run through the script available on the Hugging Face website.

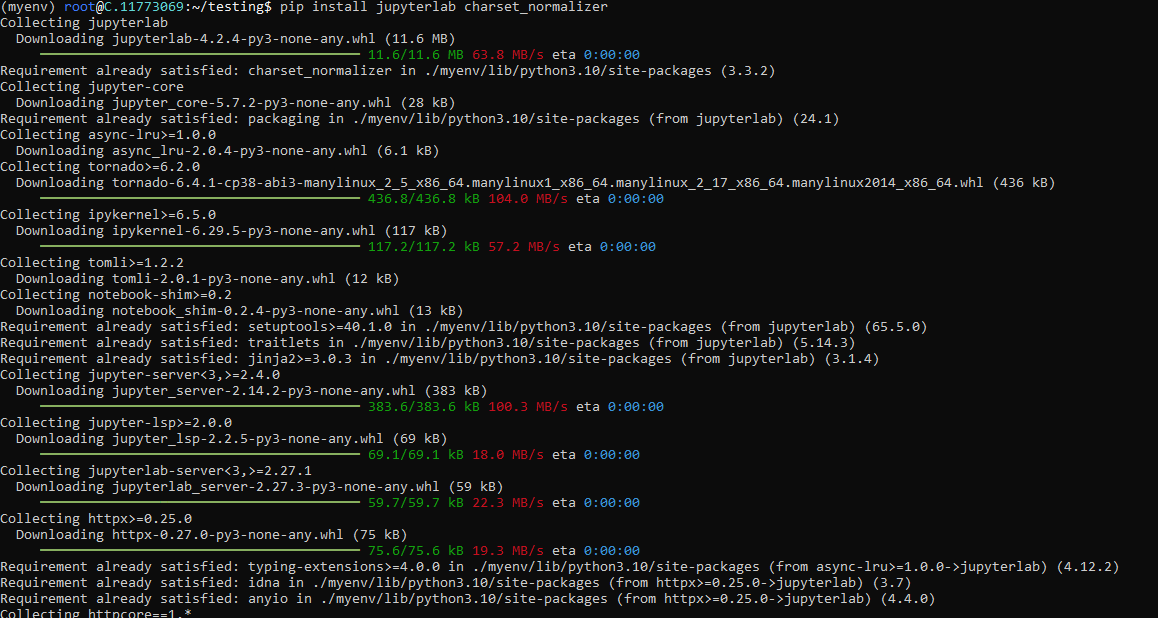

We will do this through Jupyter Lab. Run the commands below to install the Jupyter Lab on the VM.

pip install jupyterlab charset_normalizer

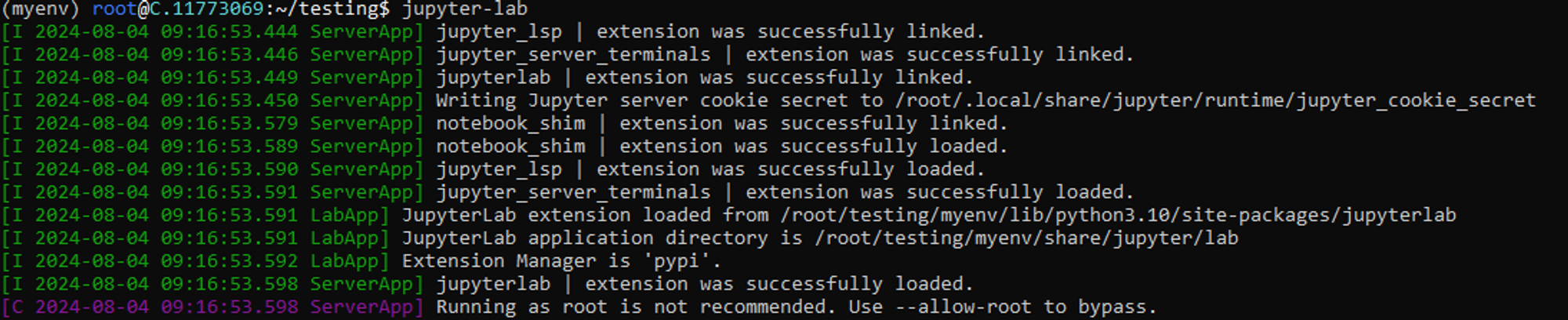

When you run this command:

jupyter-lab It will launch notebook in your browser, and now you can interact with your model:

Conclusion

The Apple DCLM-Baseline-7B model, a 7 billion parameter language model, exemplifies the impact of systematic data curation on language model performance. Trained on 2.5 trillion tokens and leveraging advanced curation techniques, it achieves competitive results in the MMLU benchmark. Openly licensed and accessible on Hugging Face, this model is developed using PyTorch and the OpenLM framework. Deploying Apple/DCLM-7B in the cloud, particularly using NodeShift's GPU-powered VMs, involves straightforward steps, from account setup to running the model in Jupyter Lab, ensuring users can harness its capabilities effectively.

For more information about NodeShift: