How to deploy Pixtral-12b in the Cloud?

Pixtral 12B, an open-source large language model (LLM) with 12 billion parameters, was made available by Mistral. This is Mistral’s first multimodal model, which means it can analyze both text and visuals. Pixtral 12B, an open-source large language model (LLM) with 12 billion parameters, was made available by Mistral. This is Mistral’s first multimodal model, which means it can analyze both text and visuals.

It can comprehend papers, graphs, and charts, among other activities involving a combination of words and graphics, thanks to its 12 billion parameters.

The ability of Pixtral 12B to process multiple images at their native resolution within a single input is one of its key features. The model features a 128,000-token context window, which enables for the analysis of large and complex documents, images, or many data sources simultaneously. This makes it useful for corporations in areas like document scanning and financial reporting.

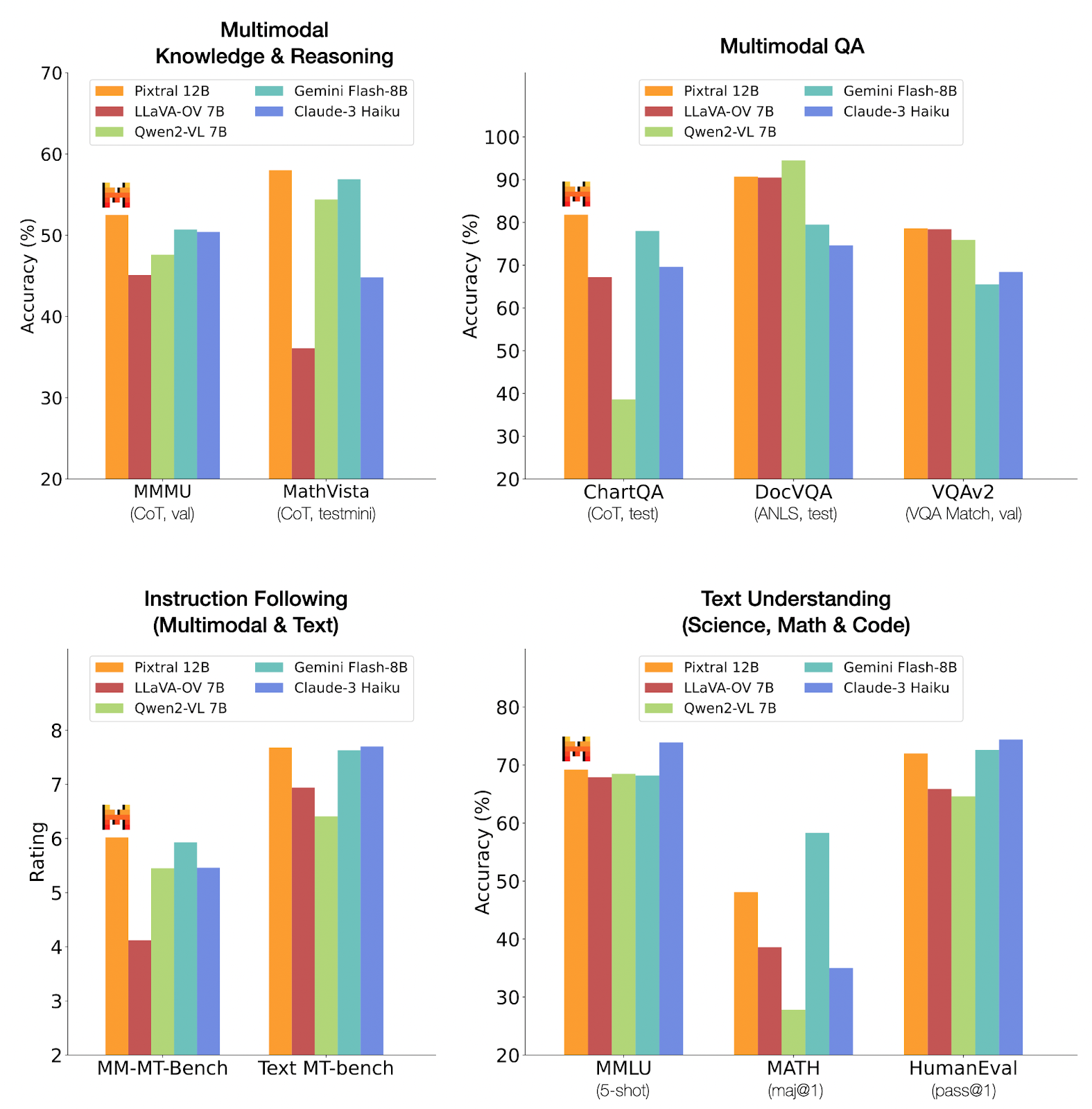

Pixtral benchmarks

Pixtral does well on Multimodal Knowledge & Reasoning challenges, particularly on the MathVista exam where it tops the field. It also has a prominent place in multimodal QA activities, especially in ChartQA.

However, other models like as the Gemini Flash-8B and the Claude-3 Haiku shown competitive or greater performance in instruction following and text-based tasks. This implies that while Pixtral 12B may not be the best at problems that are solely text-based, it does excel at multimodal and visual reasoning.

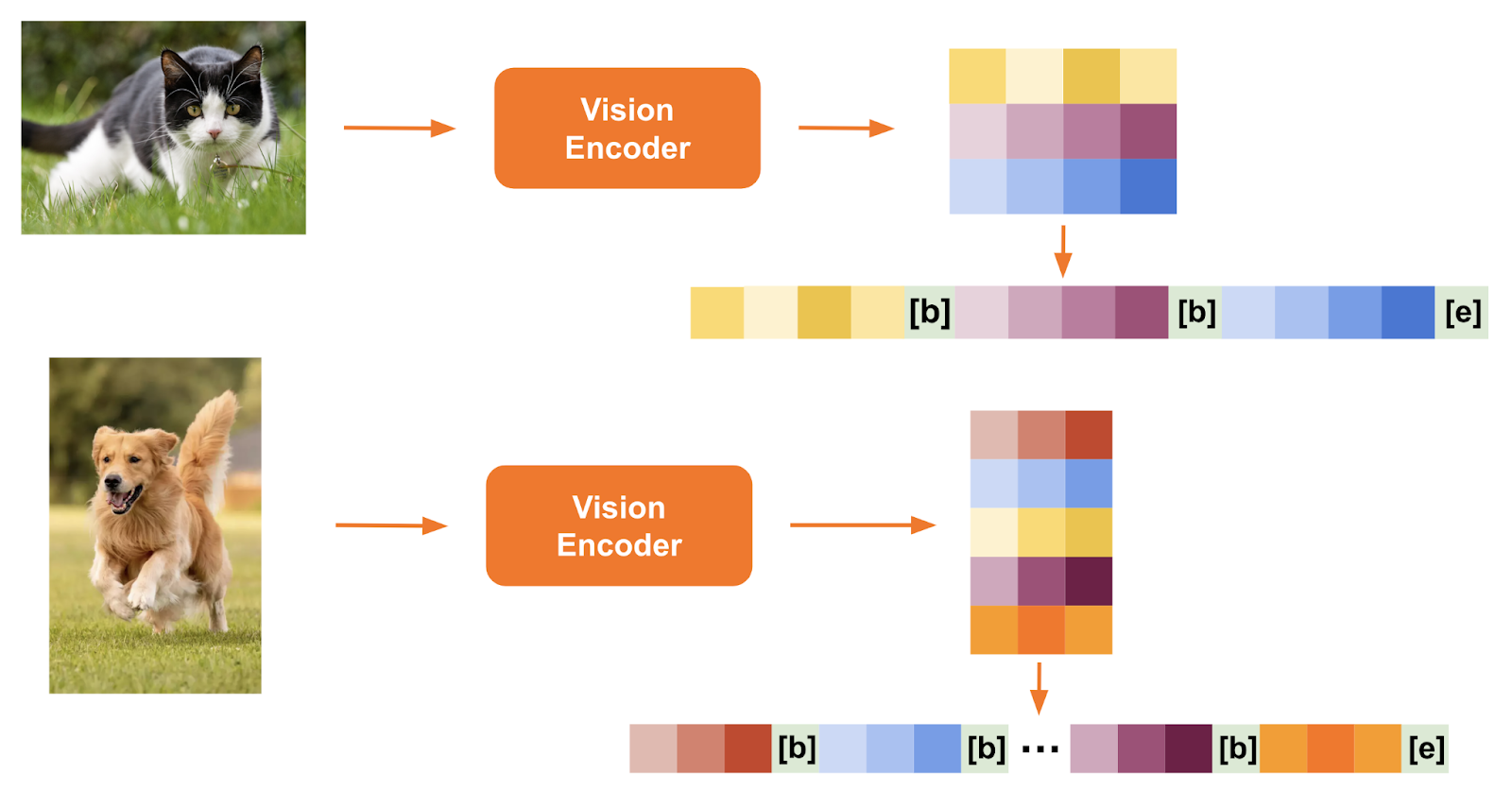

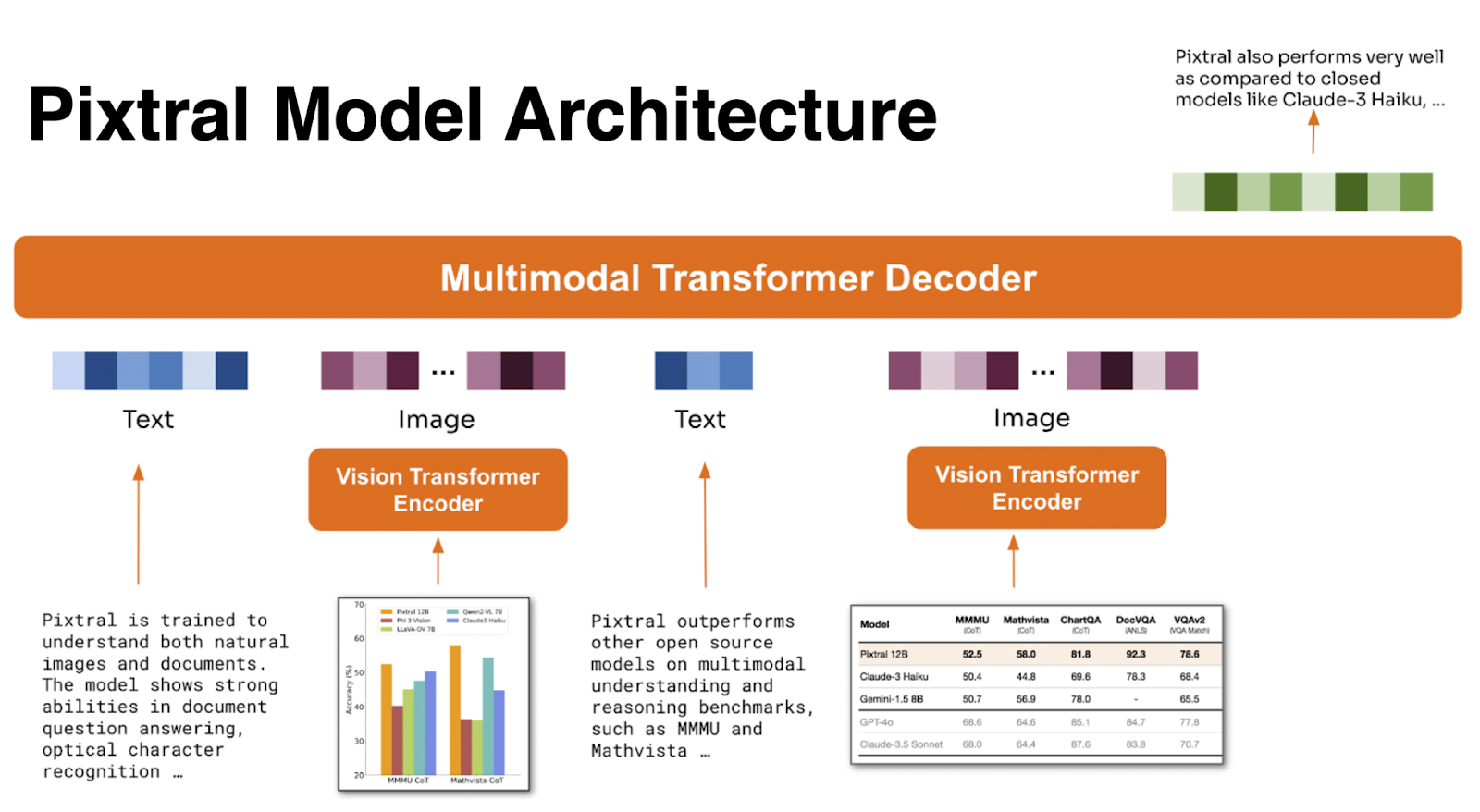

Pixtral Architecture

Pixtral 12B's architecture is built to handle text and images at the same time. A Multimodal Transformer Decoder and a Vision Encoder are its two primary parts.

With 400 million parameters, the Vision Encoder is specially trained to handle images with different resolutions and sizes.

With 12 billion parameters, the Multimodal Transformer Decoder is the second and larger component. It is intended to anticipate the following text token in sequences that interleave text and image data, and it is built on the current Mistral Nemo architecture.

This decoder can analyze very lengthy contexts (up to 128k tokens), enabling it to handle numerous image tokens and significant textual information in huge documents.

Pixtral can handle a variety of image sizes and formats thanks to its combined architecture, which converts high-resolution images into meaningful tokens without sacrificing context.

Step-by-Step Process to deploy Pixtral-12b in the Cloud

For the purpose of this tutorial, we will use a GPU-powered Virtual Machine offered by NodeShift; however, you can replicate the same steps with any other cloud provider of your choice. NodeShift provides the most affordable Virtual Machines at a scale that meets GDPR, SOC2, and ISO27001 requirements.

Step 1: Sign Up and Set Up a NodeShift Cloud Account

Visit the NodeShift Platform and create an account. Once you've signed up, log into your account.

Follow the account setup process and provide the necessary details and information.

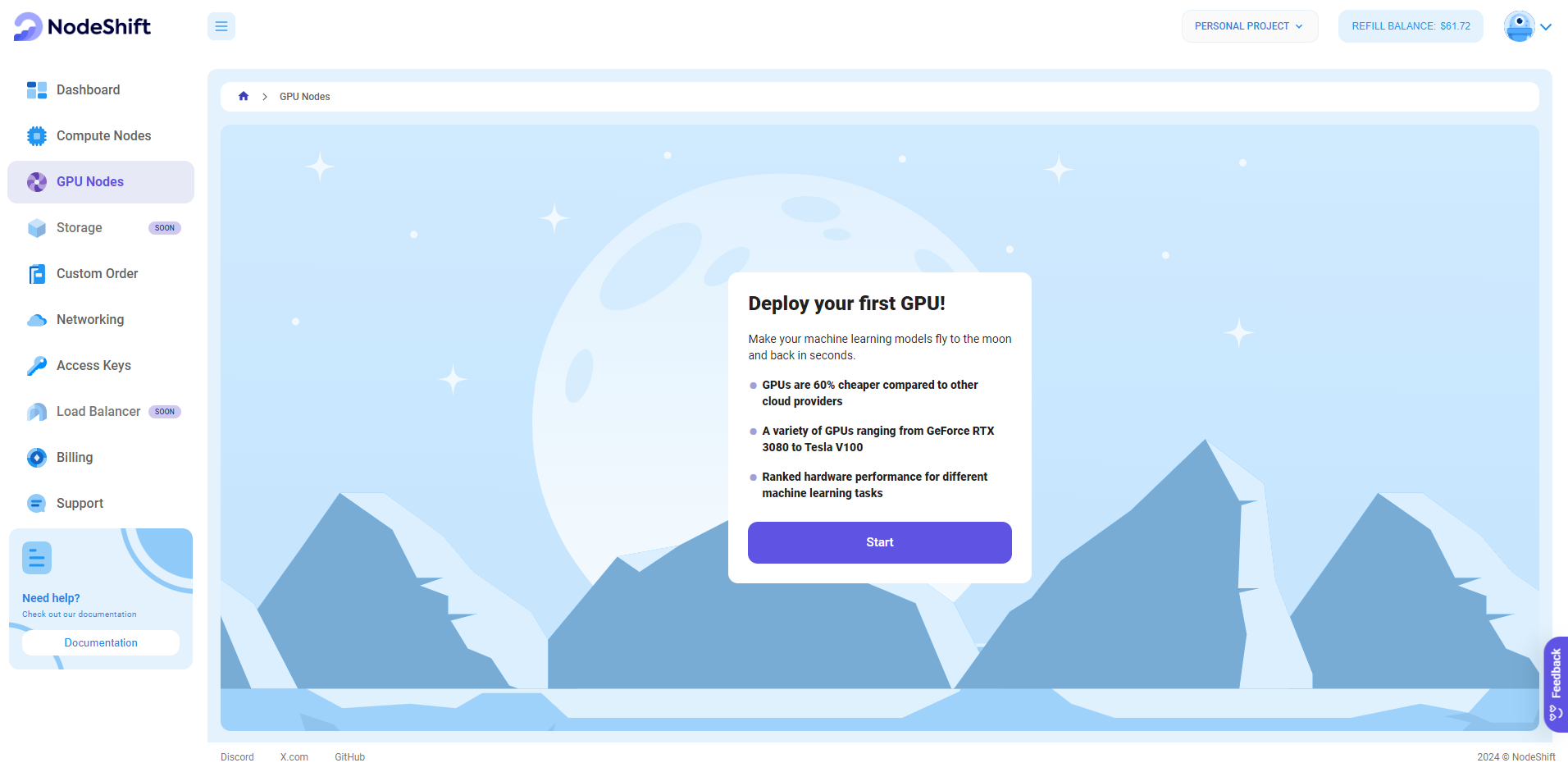

Step 2: Create a GPU Node (Virtual Machine)

GPU Nodes are NodeShift's GPU Virtual Machines, on-demand resources equipped with diverse GPUs ranging from H100s to A100s. These GPU-powered VMs provide enhanced environmental control, allowing configuration adjustments for GPUs, CPUs, RAM, and Storage based on specific requirements.

Navigate to the menu on the left side. Select the GPU Nodes option, create a GPU Node in the Dashboard, click the Create GPU Node button, and create your first Virtual Machine deployment.

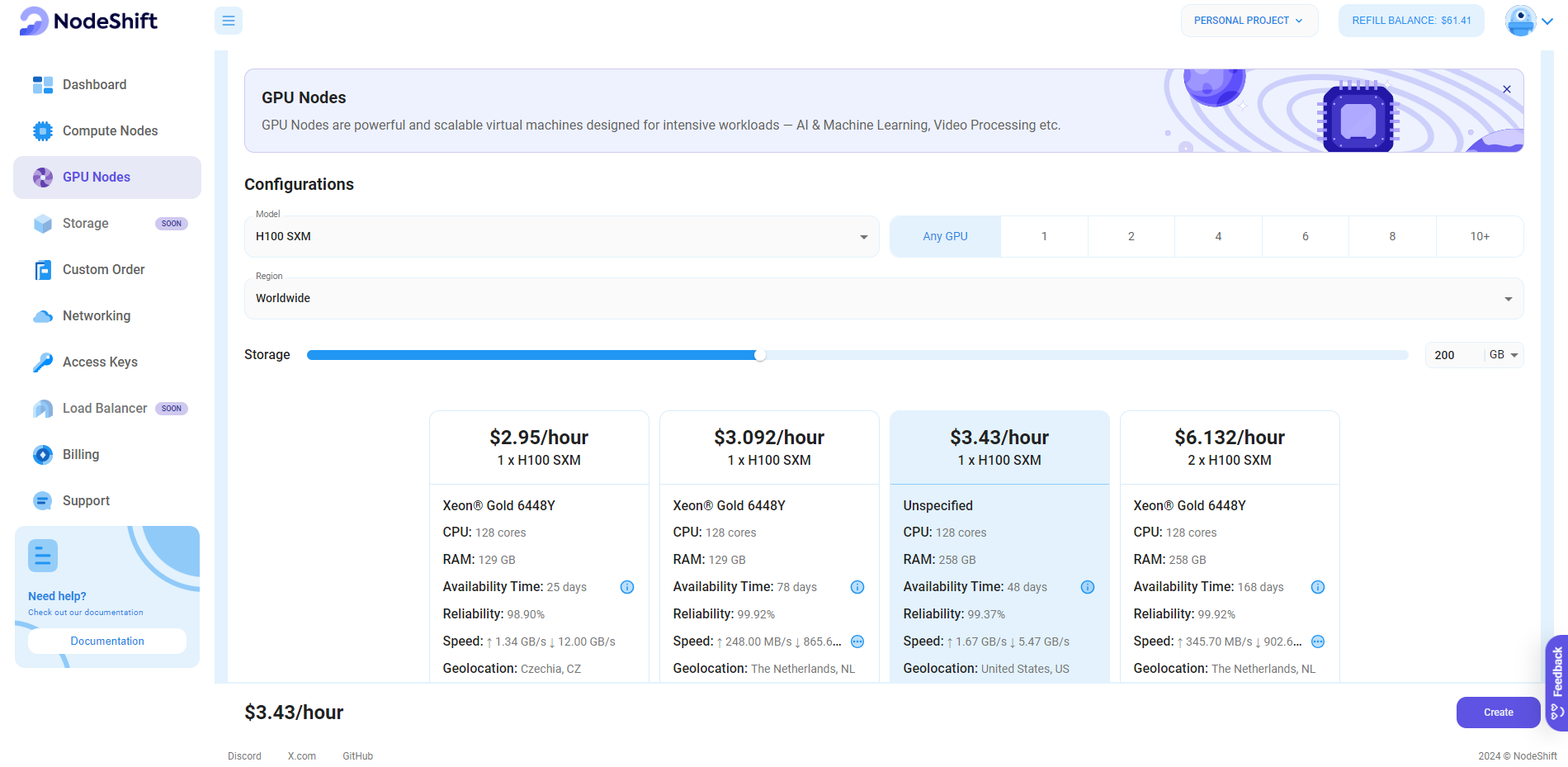

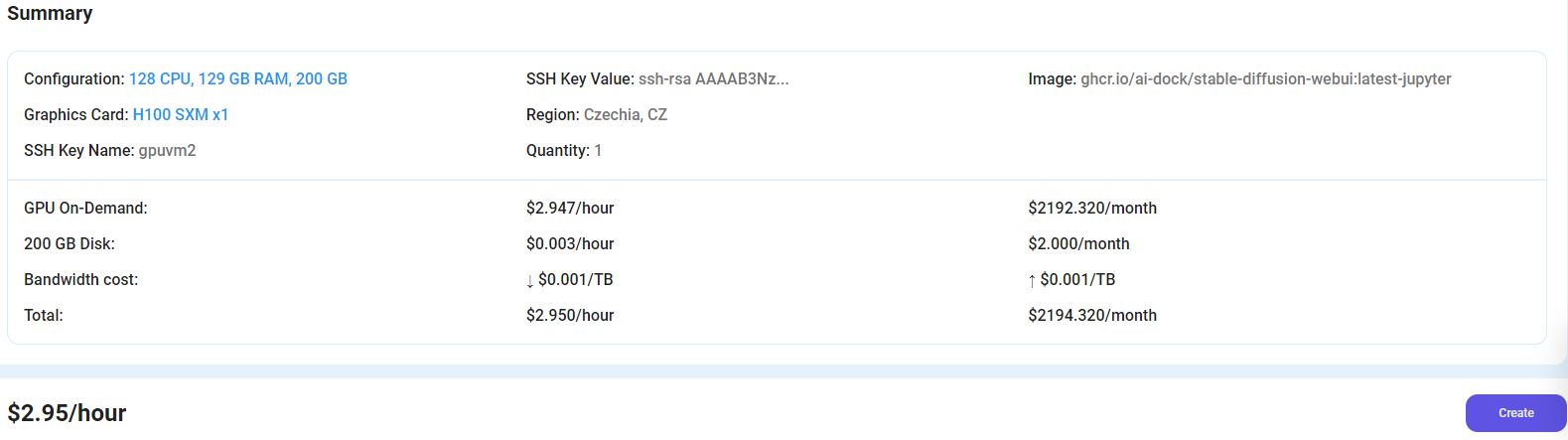

Step 3: Select a Model, Region, and Storage

In the "GPU Nodes" tab, select a GPU Model and Storage according to your needs and the geographical region where you want to launch your model.

We will use 1x H100 SXM GPU for this tutorial to achieve the fastest performance. However, you can choose a more affordable GPU with less VRAM if that better suits your requirements.

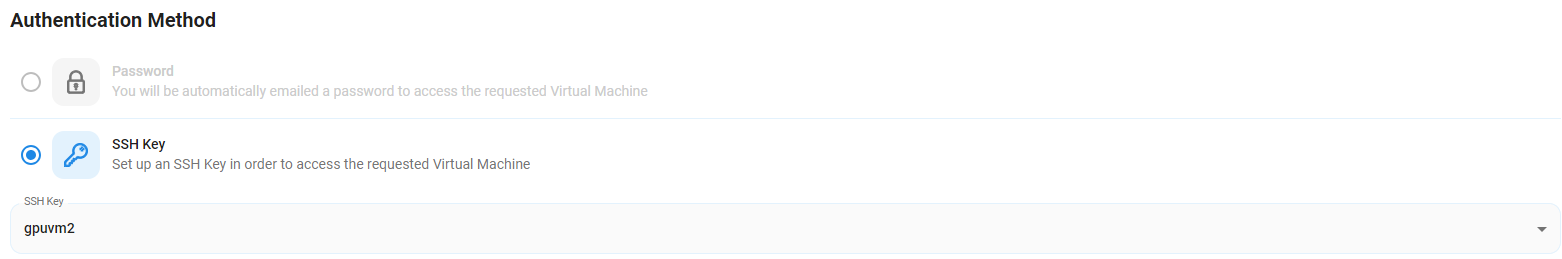

Step 4: Select Authentication Method

There are two authentication methods available: Password and SSH Key. SSH keys are a more secure option. To create them, please refer to our official documentation.

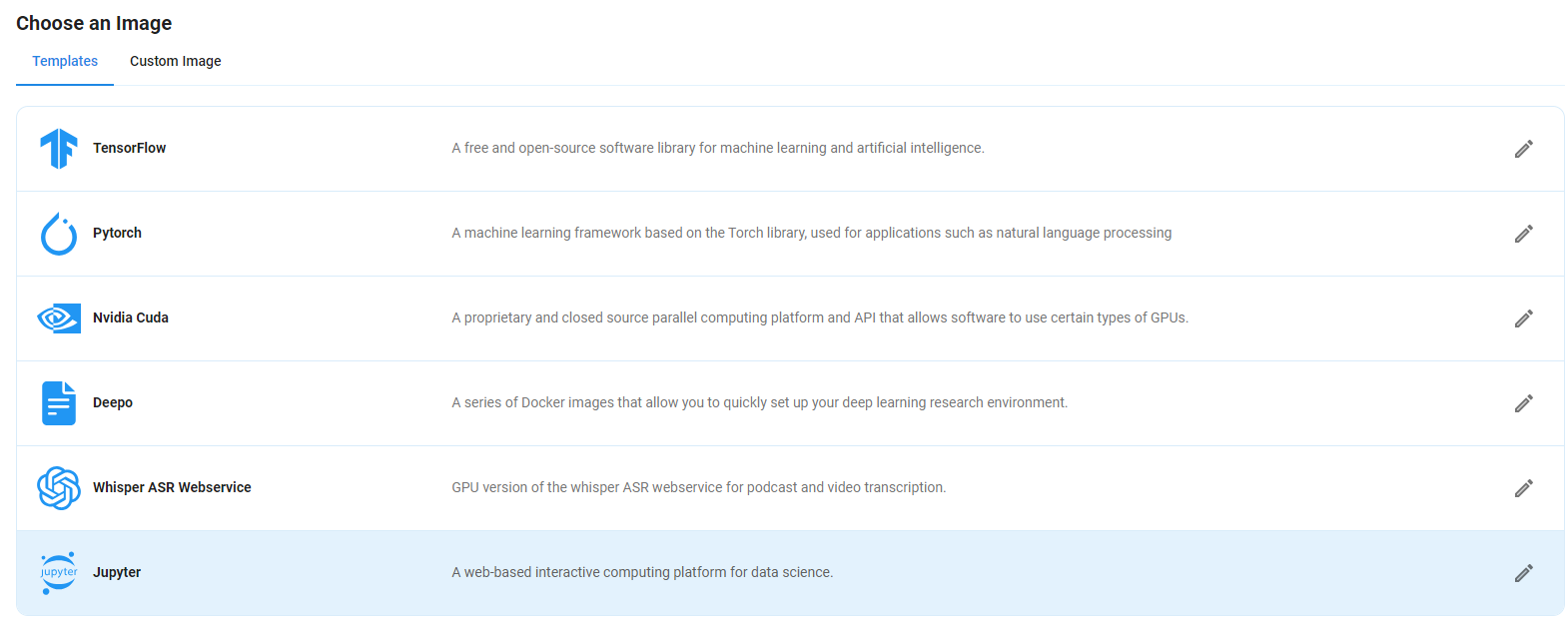

Step 5: Choose an Image

Next, you will need to choose an image for your Virtual Machine. We will deploy the Pixtral-12b Model on a Jupyter Virtual Machine. This open-source platform will allow you to install and run the Pixtral-12b Model on your GPU node. By running this model on a Jupyter Notebook, we avoid using the terminal, simplifying the process and reducing the setup time. This allows you to configure the model in just a few steps and minutes.

Note: NodeShift provides multiple image template options, such as TensorFlow, PyTorch, NVIDIA CUDA, Deepo, Whisper ASR Webservice, and Jupyter Notebook. With these options, you don’t need to install additional libraries or packages to run Jupyter Notebook. You can start Jupyter Notebook in just a few simple clicks.

After choosing the image, click the 'Create' button, and your Virtual Machine will be deployed.

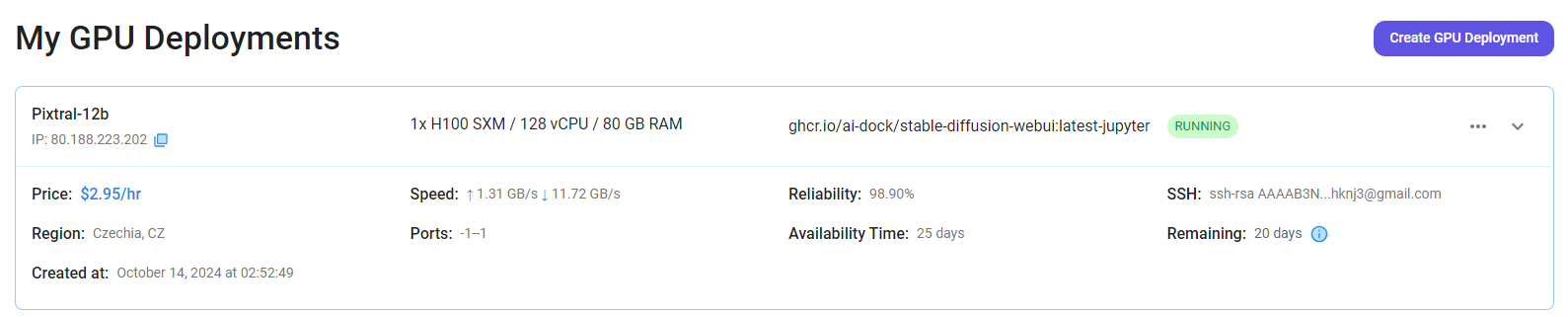

Step 6: Virtual Machine Successfully Deployed

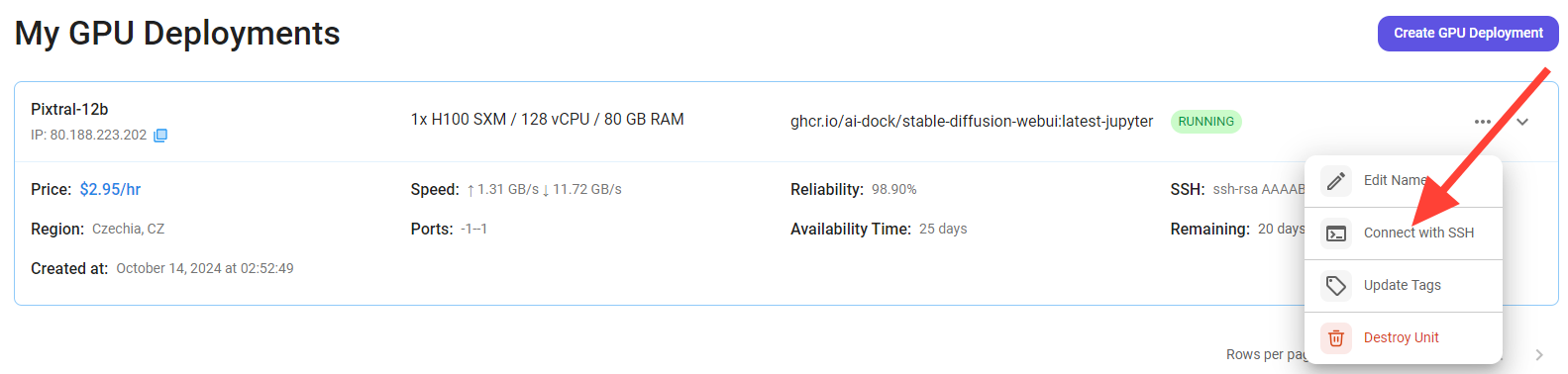

You will get visual confirmation that your node is up and running.

Step 7: Connect to Jupyter Notebook

Once your GPU VM deployment is successfully created and has reached the 'RUNNING' status, you can navigate to the page of your GPU Deployment Instance. Then, click the 'Connect' Button in the top right corner.

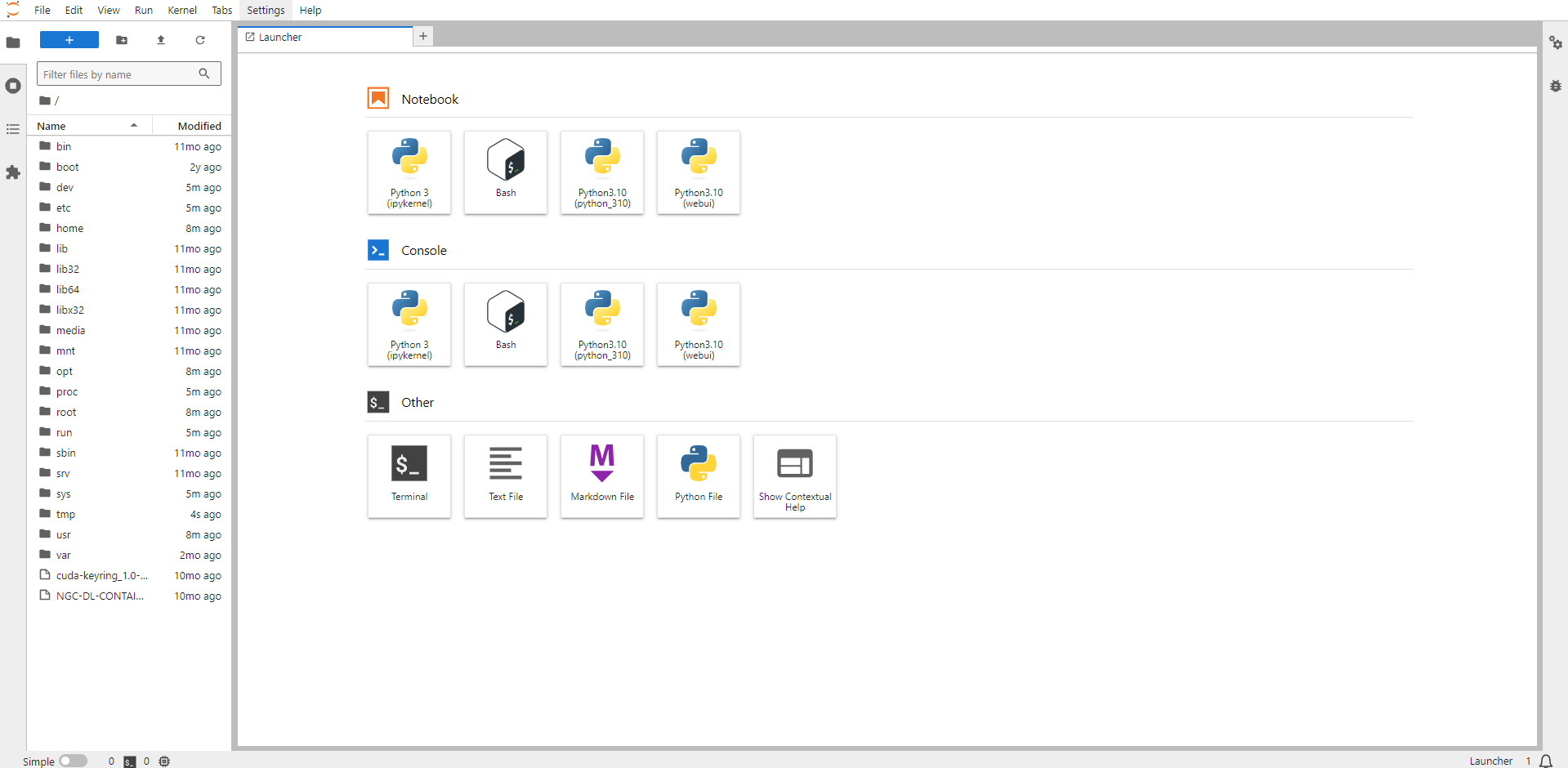

After clicking the 'Connect' button, you can view the Jupyter Notebook.

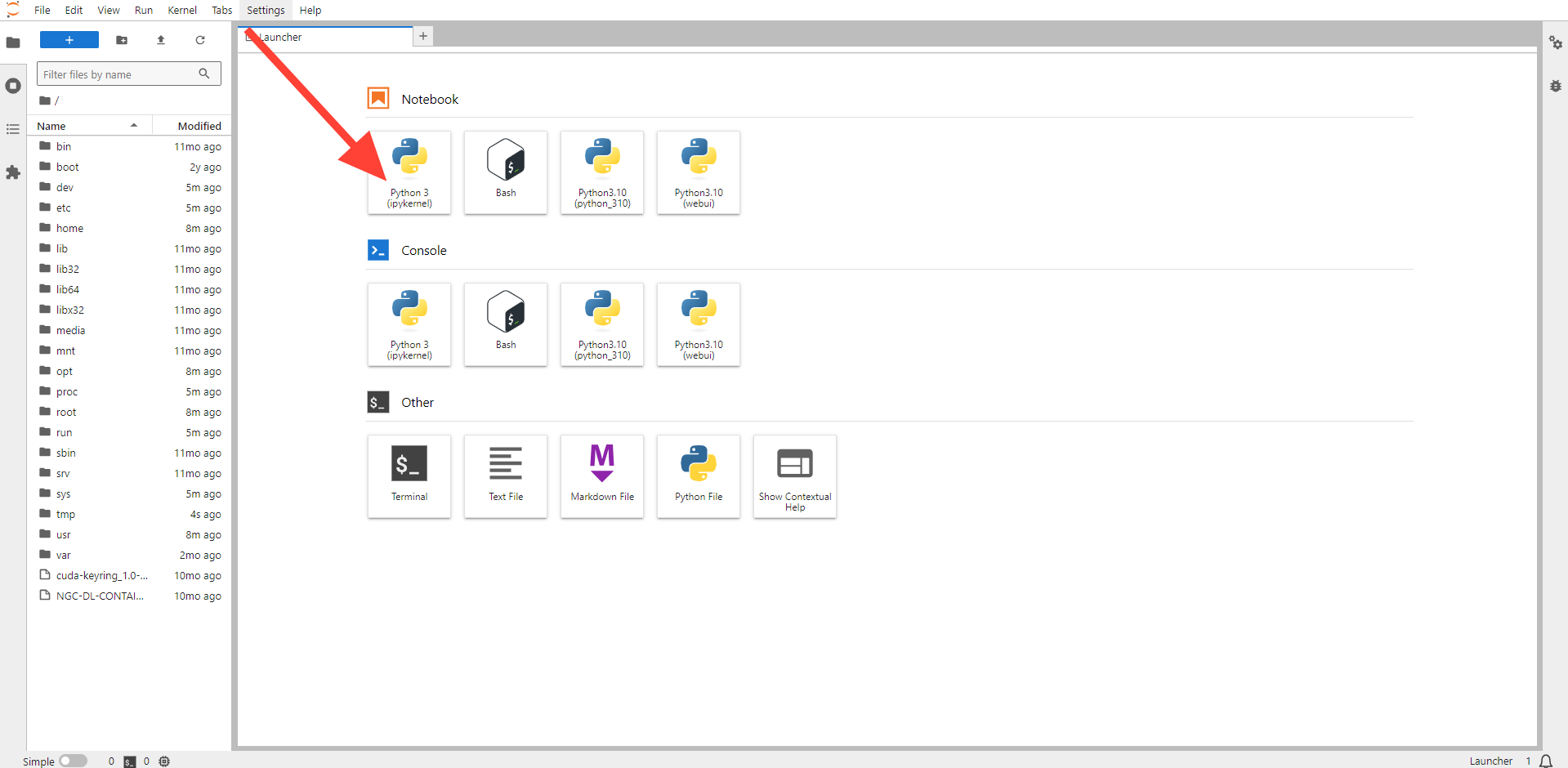

Now open Python 3(pykernel) Notebook.

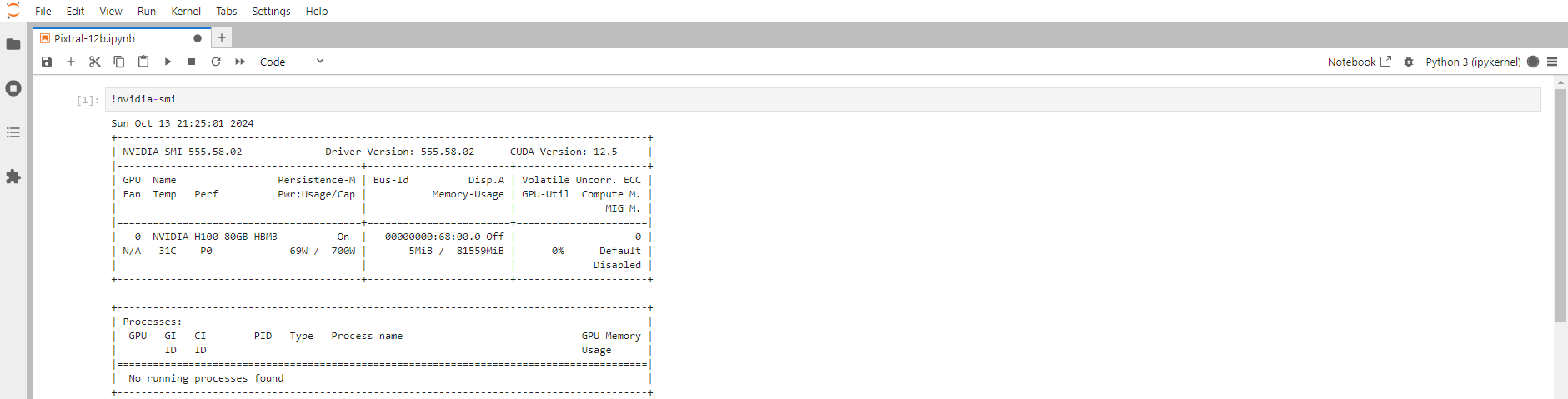

Next, If you want to check the GPU details, run the command in the Jupyter Notebook cell:

!nvidia-smi

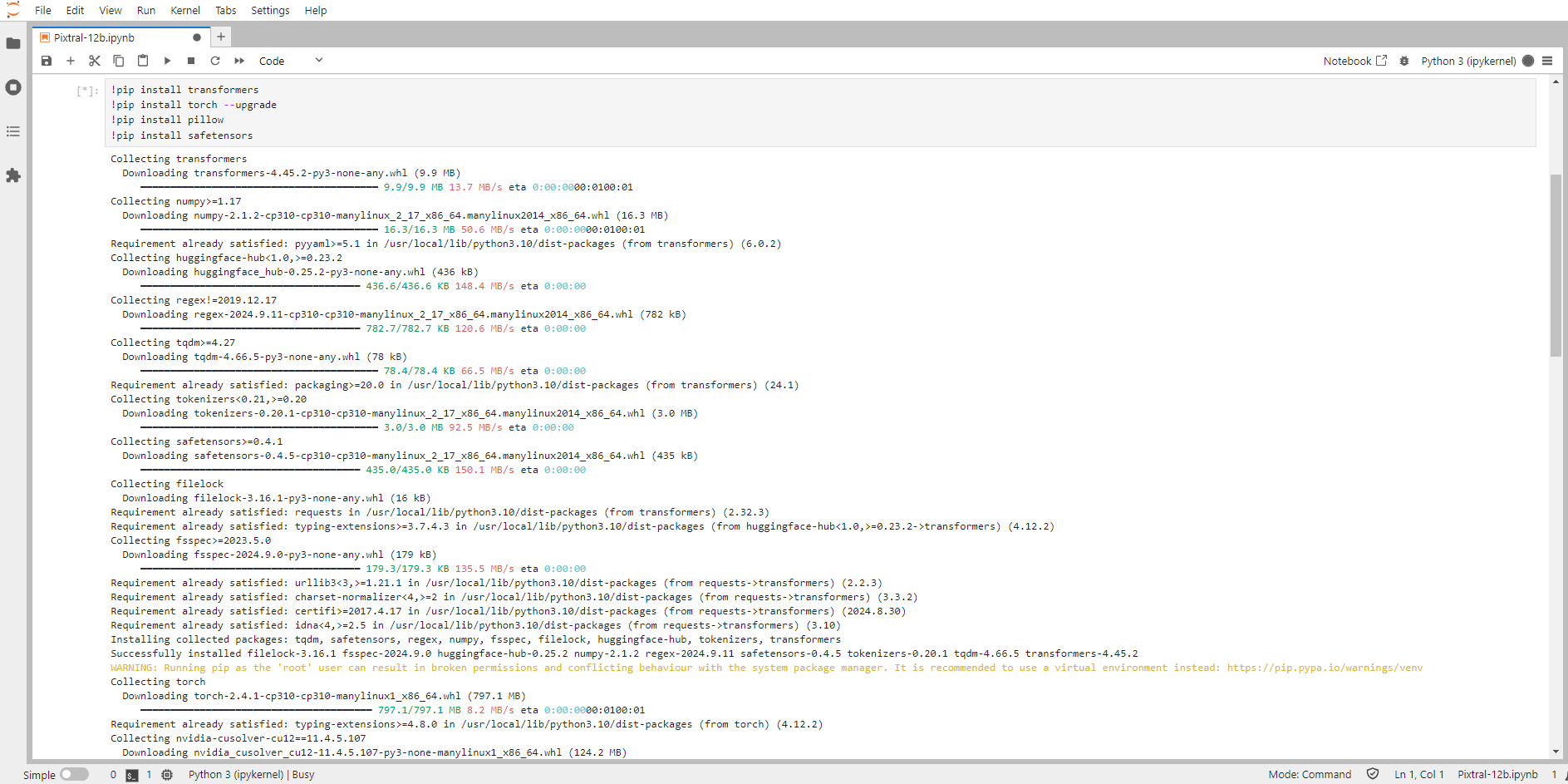

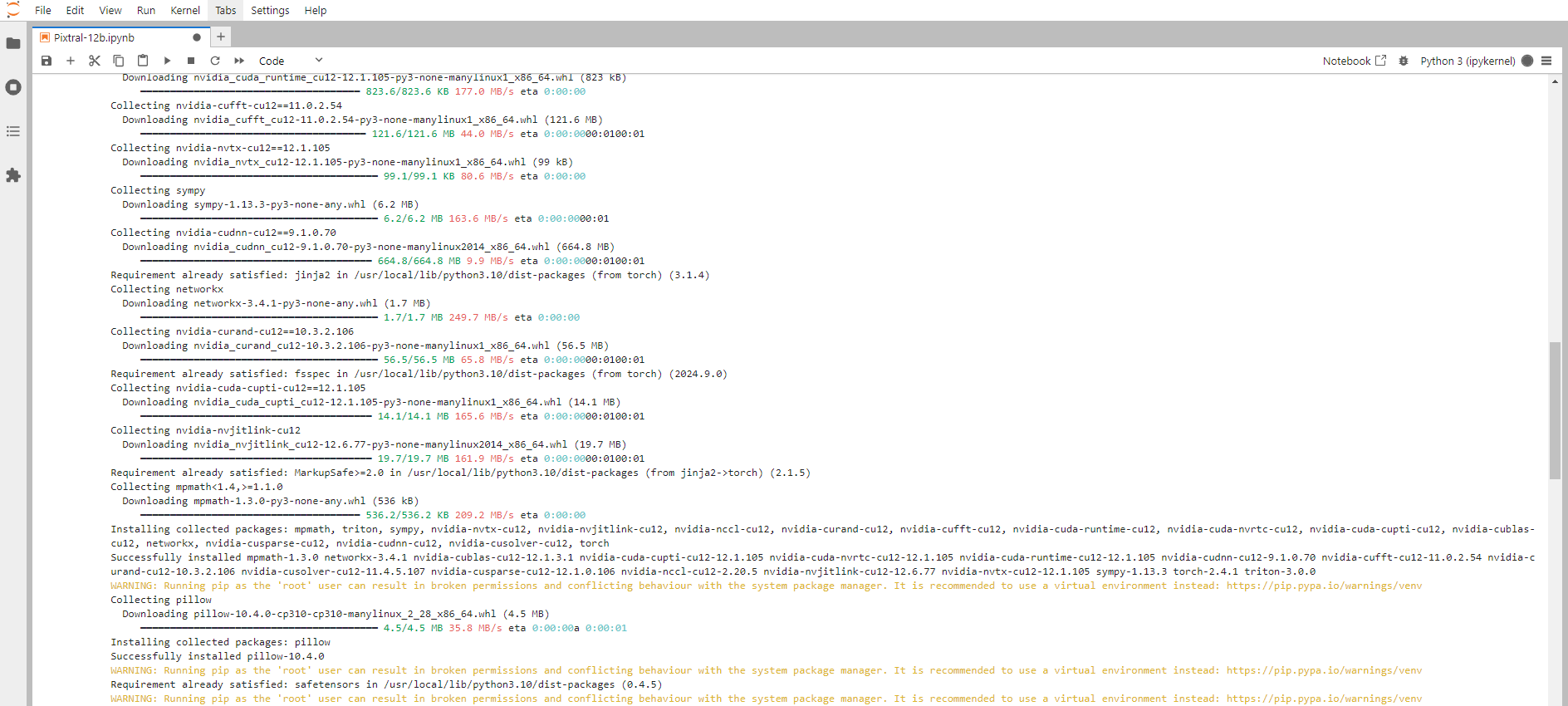

Step 8: Install the Required Packages and Libraries

Run the following command in the Jupyter Notebook cell to install the Required Packages and Libraries:

! pip install transformers

! pip install torch --upgrade

! pip install pillow

! pip install safetensorsTransformers: Transformers provide APIs and tools to download and efficiently train pre-trained models.

Torch: Torch is an open-source machine learning library, a scientific computing framework, and a scripting language based on Lua. It provides LuaJIT interfaces to deep learning algorithms implemented in C. Torch was designed with performance in mind, leveraging highly optimized libraries like CUDA, BLAS, and LAPACK for numerical computations.

Pillow: Pillow module is built on top of PIL (Python Image Library). It is the essential modules for image processing in Python.

Safetensors: Safetensors is a safe and fast file format for storing and loading tensors. Typically, PyTorch model weights are saved or pickled into a . bin file with Python's pickle utility.

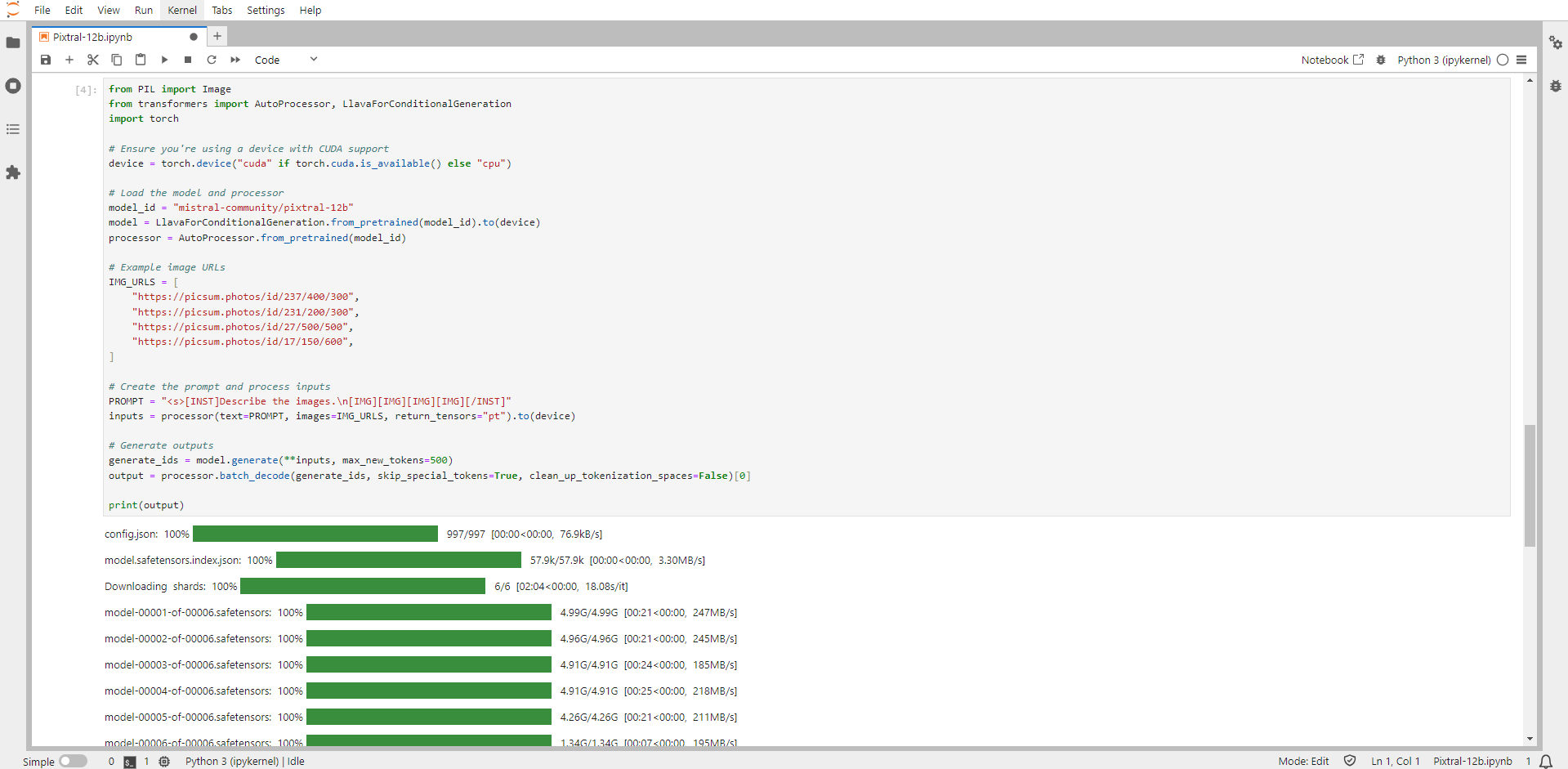

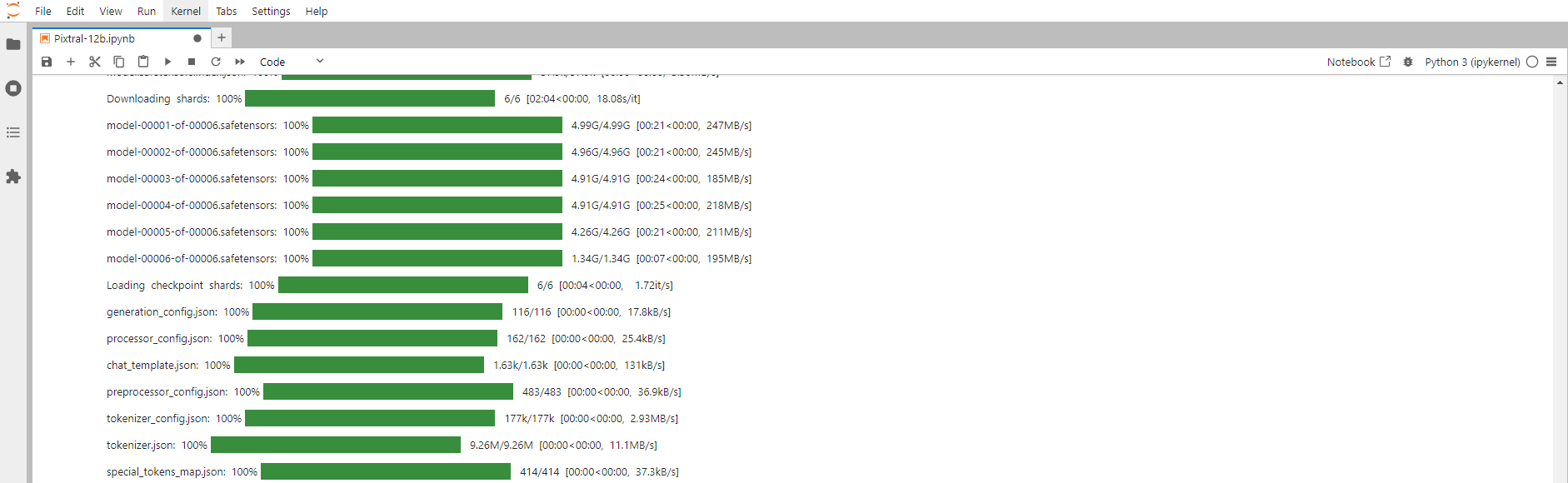

Step 9: Run the Pixtral-12b code and Print the Output

from PIL import Image

from transformers import AutoProcessor, LlavaForConditionalGeneration

model_id = "mistral-community/pixtral-12b"

model = LlavaForConditionalGeneration.from_pretrained(model_id)

processor = AutoProcessor.from_pretrained(model_id)

IMG_URLS = [

"https://picsum.photos/id/231/200/300",

"https://picsum.photos/id/27/500/500",

"https://picsum.photos/id/17/150/600",

]

PROMPT = "<s>[INST]Describe the images.\n[IMG][IMG][IMG][IMG][/INST]"

inputs = processor(text=PROMPT, images=IMG_URLS, return_tensors="pt").to("cuda")

generate_ids = model.generate(**inputs, max_new_tokens=500)

output = processor.batch_decode(generate_ids, skip_special_tokens=True, clean_up_tokenization_spaces=False)[0]

You should get an output similar to the below:

Image 1:

Description: A picturesque scene of towering mountains, with a meandering road snaking through the landscape. The road is flanked by vibrant, green foliage and stretches toward a distant valley bathed in soft light.

Details: The mountains stand tall with rugged, steep slopes, under a clear sky that hints at perfect weather. The twisting road enhances the sense of depth and perspective, drawing the eye toward the distant horizon.

Image 2:

Description: A coastal scene with waves rolling onto the sandy shore, their rhythmic crashes filling the air. People are scattered along the beach and swimming in the water, savoring the ocean breeze and the warm hues of the setting sun on the horizon.

Details: The waves crash with force, infusing the scene with energy and vibrancy. The sky glows with shades of orange and pink, as the setting sun casts a warm, golden light over the water and beach, enhancing the lively ambiance.

Image 3:

Description: A charming garden path winds toward a majestic tree, beneath which a cozy bench invites visitors to rest. The path is lined with neatly trimmed grass and vibrant flowers, adding a touch of color and serenity to the peaceful setting.

Details: The path, paved with small stones and gravel, gently leads to a grand tree that offers cool shade. Beneath its branches, an inviting bench sits, perfect for quiet reflection. The surrounding landscape is lush and vibrant, with well-tended grass and blooming flowers, reflecting the care put into maintaining the serene garden.

Conclusion

Pixtral-12b is a groundbreaking open-source model from Mistral AI that brings state-of-the-art AI capabilities to developers and researchers. Following this step-by-step guide, you can quickly deploy Pixtral-12b on a GPU-powered Virtual Machine with NodeShift, harnessing its full potential. NodeShift provides an accessible, secure, affordable platform to run your AI models efficiently. It is an excellent choice for those experimenting with Pixtral-12b and other cutting-edge AI tools.