How to Run Qwen2.5-Coder-7B-Instruct Model in the Cloud?

The Qwen2.5-Coder is Alibaba Cloud's latest series of large, code-specific Qwen language models. Compared to previous versions of the Qwen Series models, It is a more robust model that performs exceptionally well in various areas, including coding, mathematics, reasoning, and instruction-following tasks.

Qwen2.5-Coder is released in three base and instruction-tuned language models, with 1.5, 7, and 32 billion parameters (coming soon). All models are available under the Apache 2.0 License.

The Qwen2.5-Coder-7B-Instruct model has a context length of up to 128K tokens and can generate text of up to 8K tokens in length. It demonstrates exceptional performance compared to Llama in various fields, such as coding and mathematics.

Use cases of Qwen2.5-Coder-7B-Instruct Model.

There are several use cases of the Qwen2.5-72B-Instruct Model:

- Code Generation: Generate questions of Data Structures & Algorithms.

- AI Applications: Facilitate the development of chatbots and virtual assistants.

- Code Reasoning: Generate MCQ Answers etc.

- Math Reasoning: Generate questions about Maths Reasoning, like theorems, sums, etc

- Debugging: Support code generation, debugging, and automation.

Model Inputs and Outputs

Inputs

- Text prompt describing the theorem, binary questions, coding questions, instructions, etc.

Outputs

- The primary output of the Qwen2.5-Coder-7B-Instruct model is natural language text in long forms, like code, theorems, etc.

Qwen2.5-Coder-Instruct excels in several key areas:

- Outstanding Multi-programming Expert.

- Code Reasoning

- Math Reasoning

| Model | Math | GSM8K | GaoKao2023en | OlympiadBench | CollegeMath | AIME24 |

|---|---|---|---|---|---|---|

| DeepSeek-Coder-V2-Lite-Instruct | 61.0 | 87.6 | 56.1 | 26.4 | 39.8 | 6.7 |

| Qwen2.5-Coder-7B-Instruct | 66.8 | 86.7 | 60.5 | 29.8 | 43.5 | 10.0 |

- Basic capabilities

| Model | AMC23 | MMLU | MMLU-Pro | IFEval | CEval | GPQA |

|---|---|---|---|---|---|---|

| DeepSeek-Coder-V2-Lite-Instruct | 40.4 | 42.5 | 60.6 | 38.6 | 60.1 | 27.6 |

| Qwen2.5-Coder-7B-Instruct | 42.5 | 45.6 | 68.7 | 58.6 | 61.4 | 35.6 |

In this blog, you'll learn:

- About Qwen2.5-Coder-7B-Instruct model

- Setup GPU-powered Virtual Machine offered by NodeShift

- Run Qwen2.5-Coder-7B-Instruct Model in the NodeShift Cloud.

Step-by-Step Process to Run Qwen2.5-Coder-7B-Instruct Model in the Cloud

For the purpose of this tutorial, we will use a GPU-powered Virtual Machine offered by NodeShift; however, you can replicate the same steps with any other cloud provider of your choice. NodeShift provides the most affordable Virtual Machines at a scale that meets GDPR, SOC2, and ISO27001 requirements.

Step 1: Sign Up and Set Up a NodeShift Cloud Account

Visit the NodeShift Platform and create an account. Once you've signed up, log into your account.

Follow the account setup process and provide the necessary details and information.

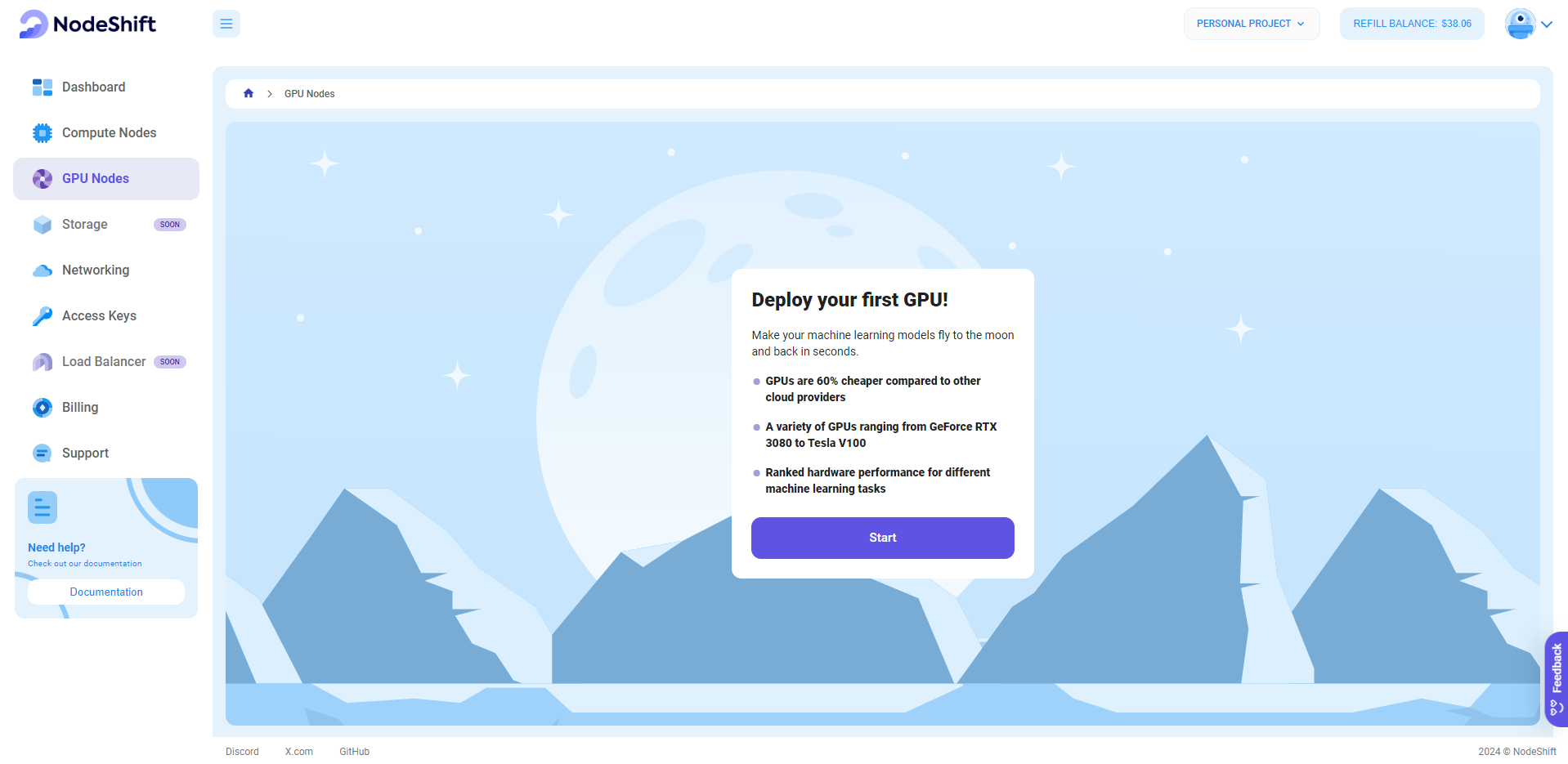

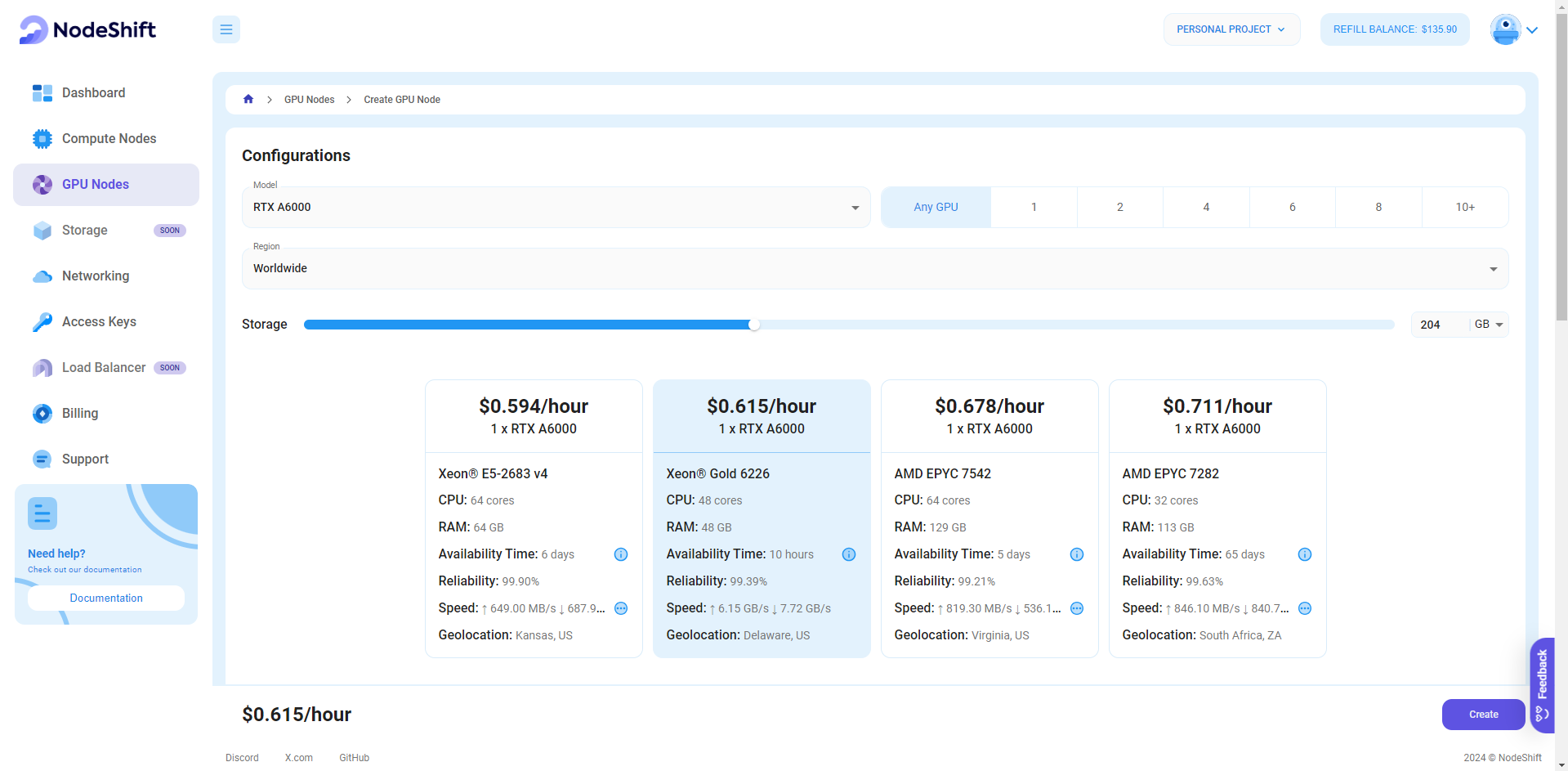

Step 2: Create a GPU Node (Virtual Machine)

GPU Nodes are NodeShift's GPU Virtual Machines, on-demand resources equipped with diverse GPUs ranging from H100s to A100s. These GPU-powered VMs provide enhanced environmental control, allowing configuration adjustments for GPUs, CPUs, RAM, and Storage based on specific requirements.

Navigate to the menu on the left side. Select the GPU Nodes option, create a GPU Node in the Dashboard, click the Create GPU Node button, and create your first Virtual Machine deployment.

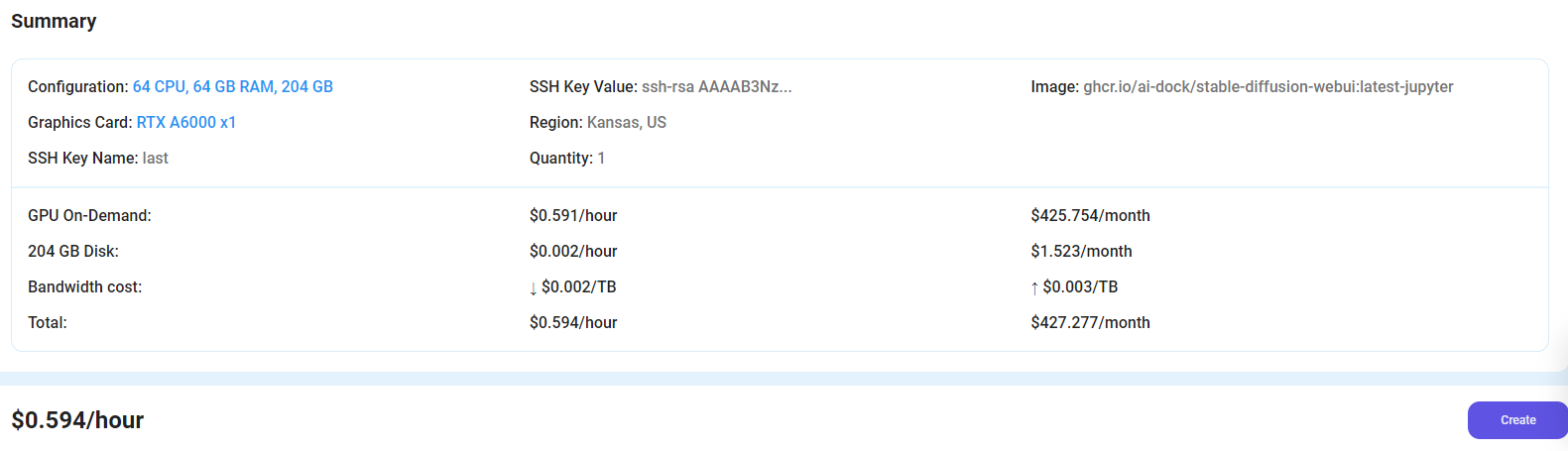

Step 3: Select a Model, Region, and Storage

In the "GPU Nodes" tab, select a GPU Model and Storage according to your needs and the geographical region where you want to launch your model.

We will use 1x RTX A6000 GPU for this tutorial to achieve the fastest performance. However, you can choose a more affordable GPU with less VRAM if that better suits your requirements.

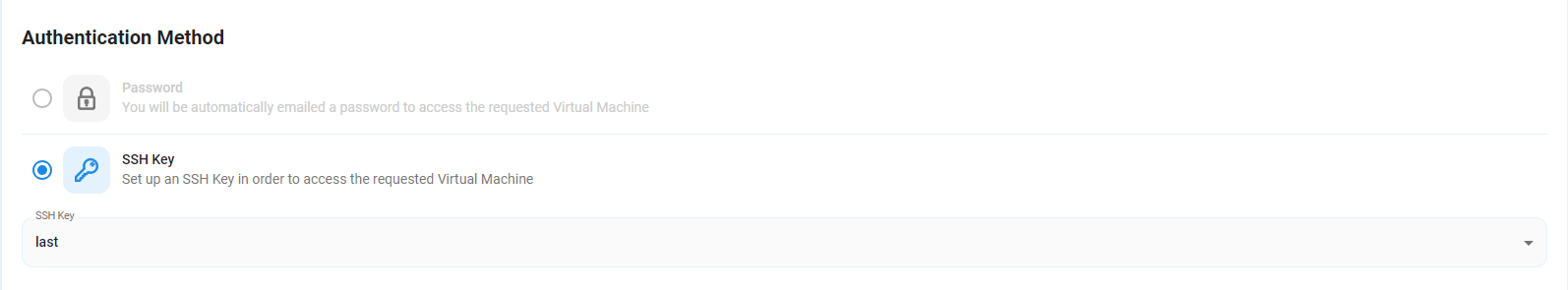

Step 4: Select Authentication Method

There are two authentication methods available: Password and SSH Key. SSH keys are a more secure option. To create them, please refer to our official documentation.

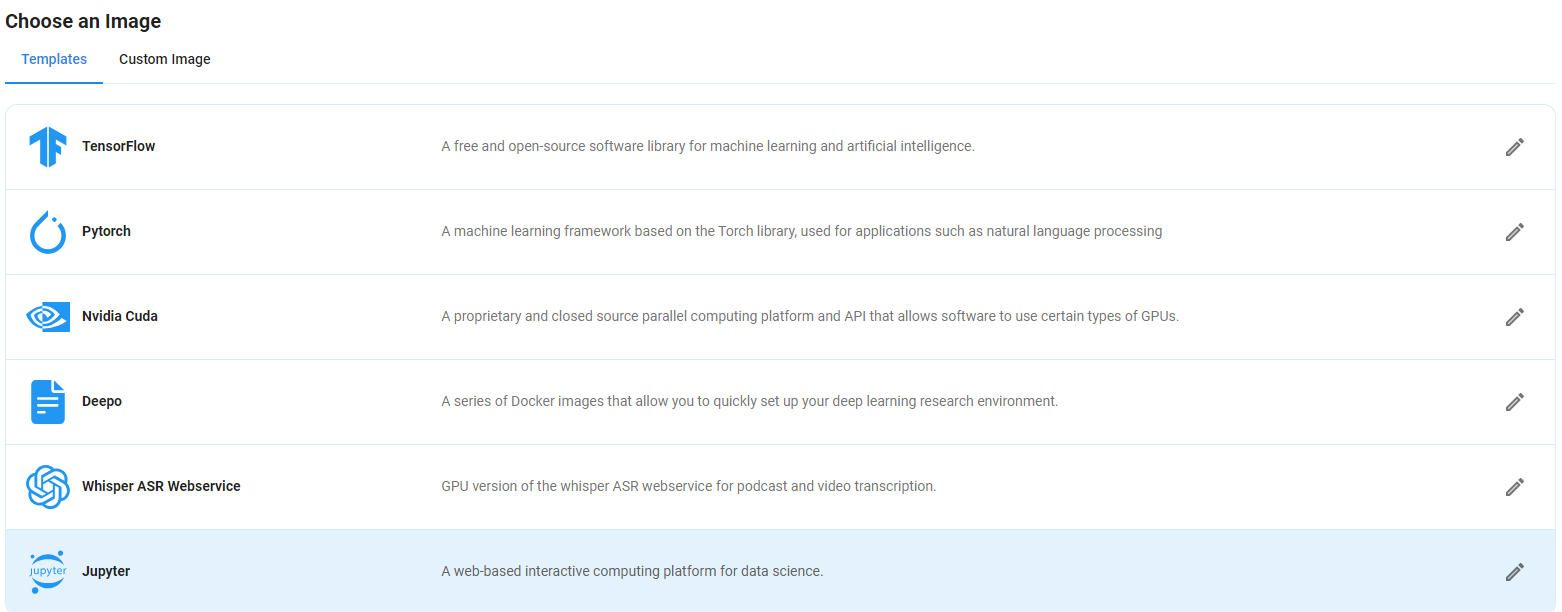

Step 5: Choose an Image

Next, you will need to choose an image for your Virtual Machine. We will deploy the Qwen2.5-Coder-7B-Instruct Model on a Jupyter Virtual Machine. This open-source platform will allow you to install and run the Qwen2.5-Coder-7B-Instruct Model on your GPU node. By running this model on a Jupyter Notebook, we avoid using the terminal, simplifying the process and reducing the setup time. This allows you to configure the model in just a few steps and minutes.

Note: NodeShift provides multiple image template options, such as TensorFlow, PyTorch, NVIDIA CUDA, Deepo, Whisper ASR Webservice, and Jupyter Notebook. With these options, you don’t need to install additional libraries or packages to run Jupyter Notebook. You can start Jupyter Notebook in just a few simple clicks.

After choosing the image, click the 'Create' button, and your Virtual Machine will be deployed.

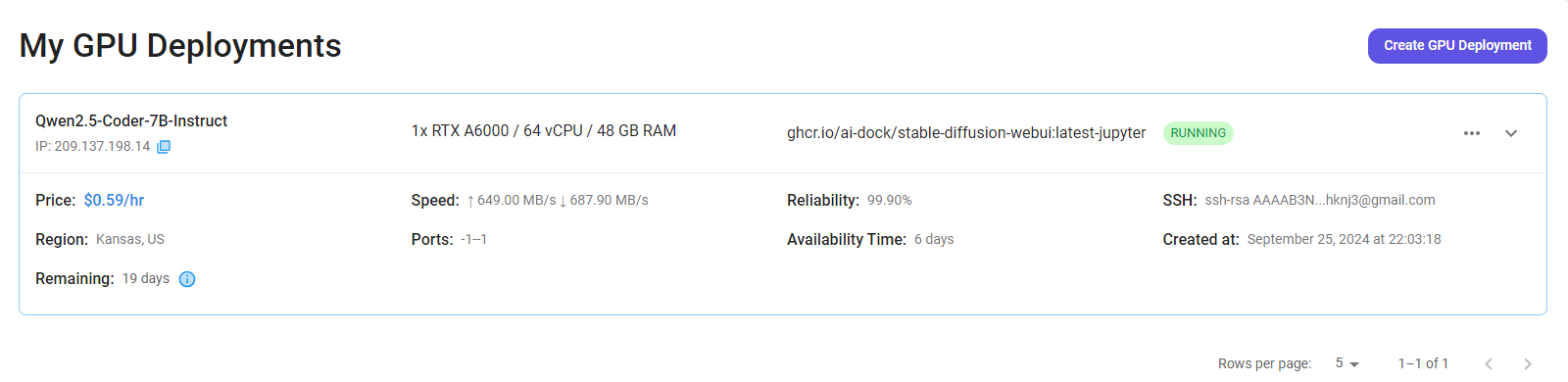

Step 6: Virtual Machine Successfully Deployed

You will get visual confirmation that your node is up and running.

Step 7: Connect to Jupyter Notebook

Once your GPU VM deployment is successfully created and has reached the 'RUNNING' status, you can navigate to the page of your GPU Deployment Instance. Then, click the 'Connect' Button in the top right corner.

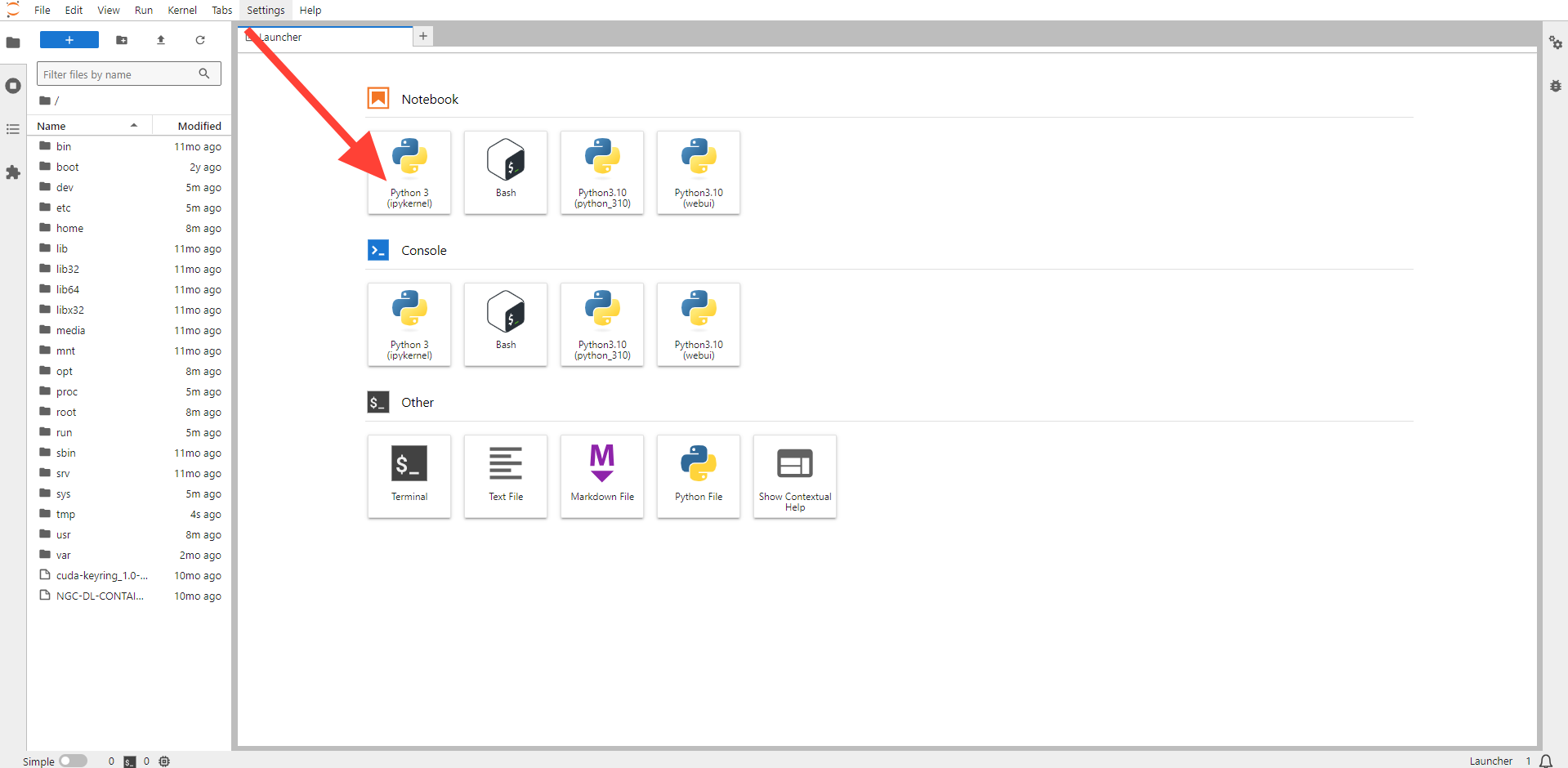

After clicking the 'Connect' button, you can view the Jupyter Notebook.

Now open Python 3(pykernel) Notebook.

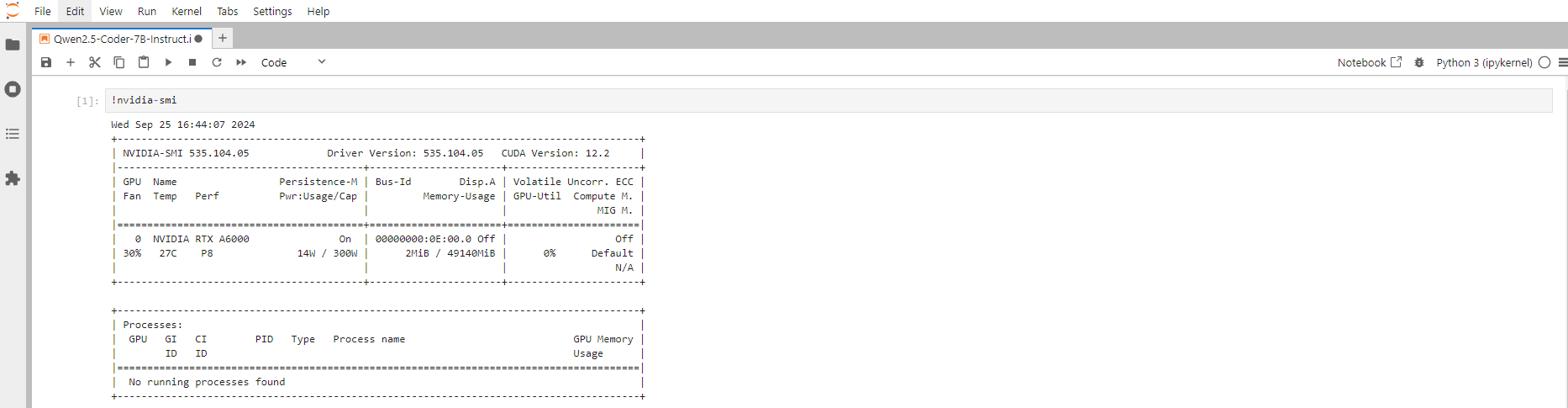

Next, If you want to check the GPU details, run the command in the Jupyter Notebook cell:

!nvidia-smi

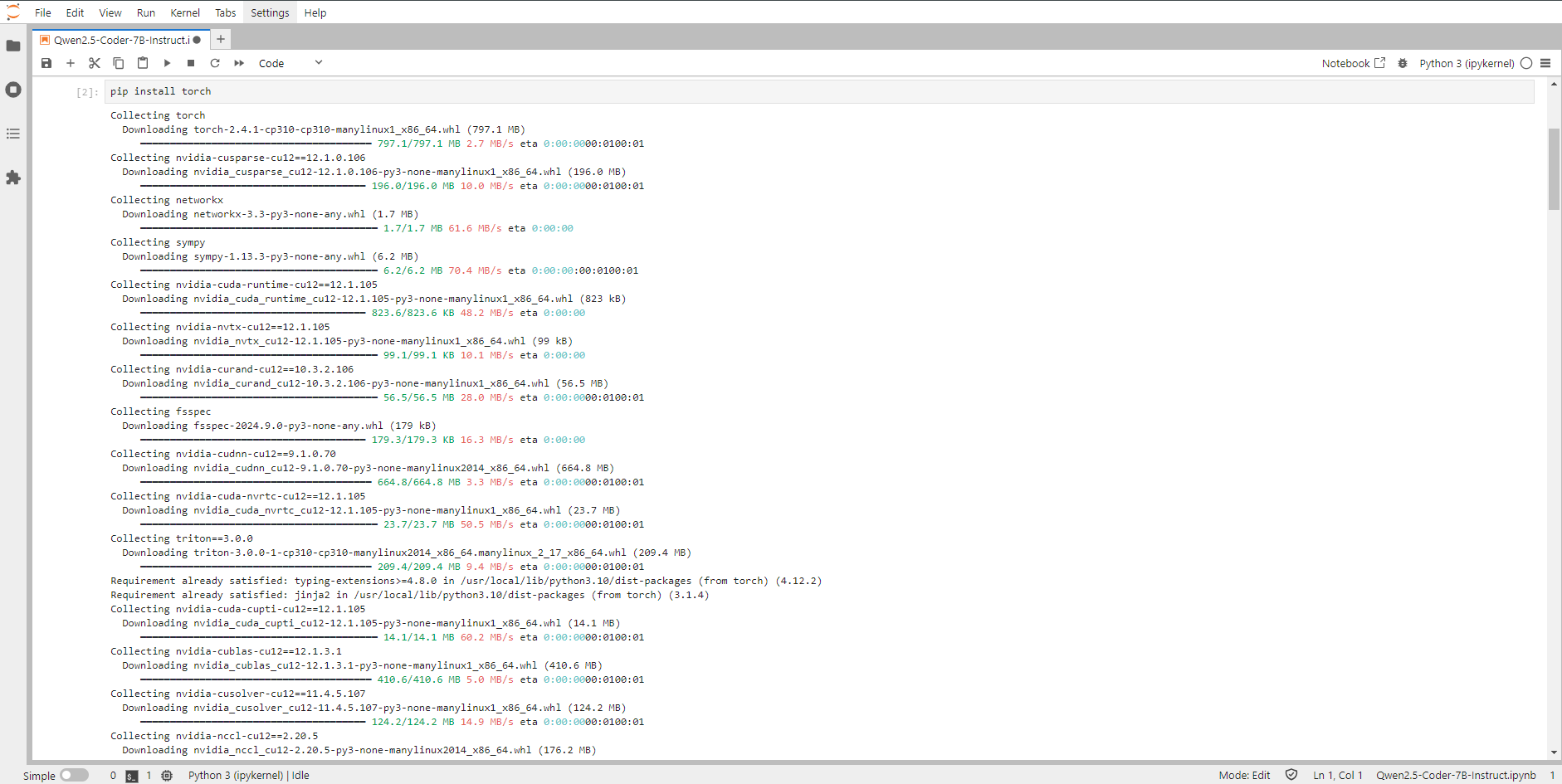

Step 8: Install the Torch Library

Torch is an open-source machine learning library, a scientific computing framework, and a scripting language based on Lua. It provides LuaJIT interfaces to deep learning algorithms implemented in C.

Torch was designed with performance in mind, leveraging highly optimized libraries like CUDA, BLAS, and LAPACK for numerical computations.

Run the following command in the Jupyter Notebook cell to install the Torch Library:

pip install torch

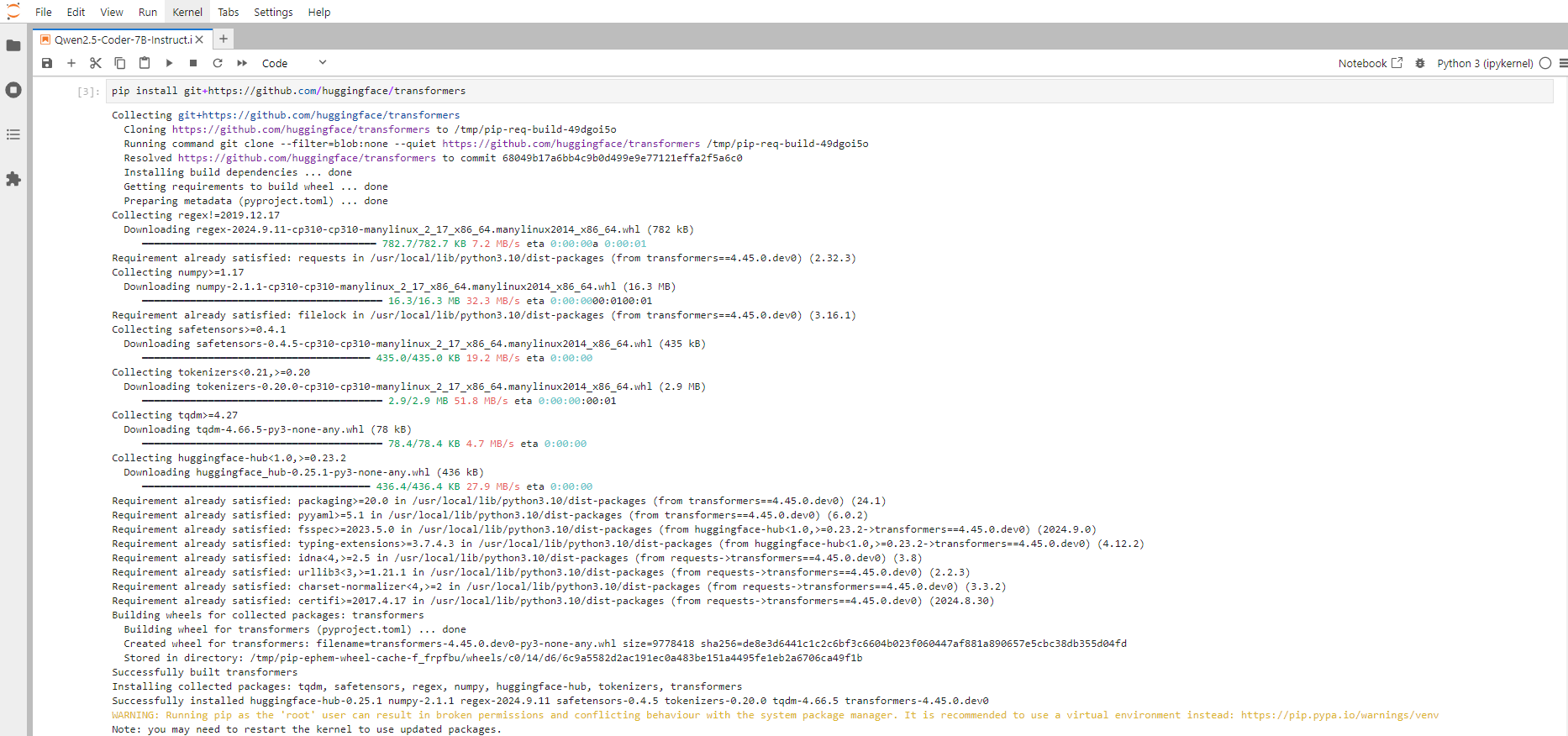

Step 9: Install Transformers from GitHub

To install the Transformers, run the following command in the Jupyter Notebook cell:

pip install git+https://github.com/huggingface/transformersTransformers provide APIs and tools to download and efficiently train pre-trained models.

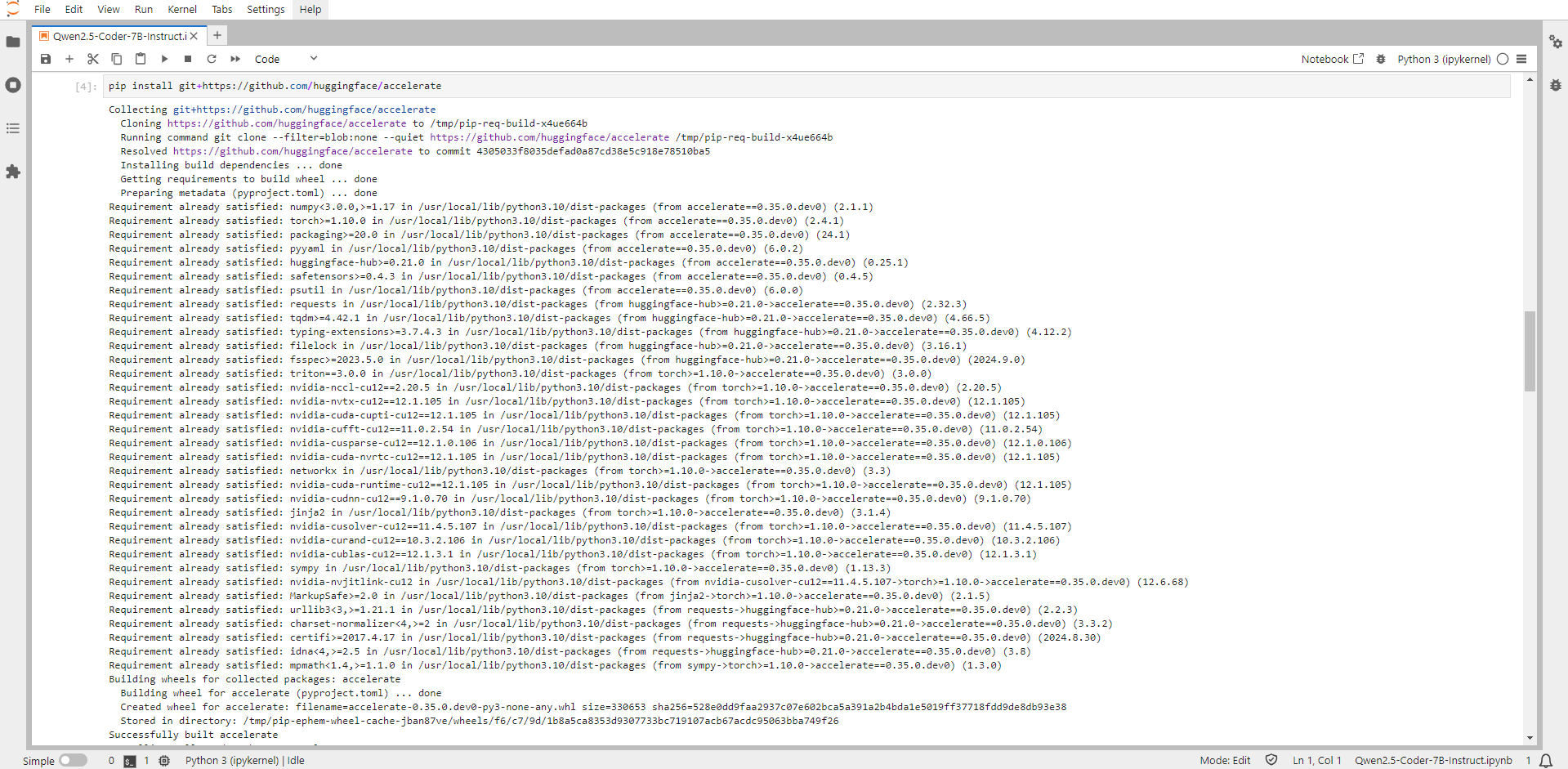

Step 10: Install Accelerate from GitHub

To install the Accelerate, run the following command in the Jupyter Notebook cell:

pip install git+https://github.com/huggingface/accelerateAccelerate is a library that enables the same PyTorch code to be run across any distributed configuration by adding just four lines of code.

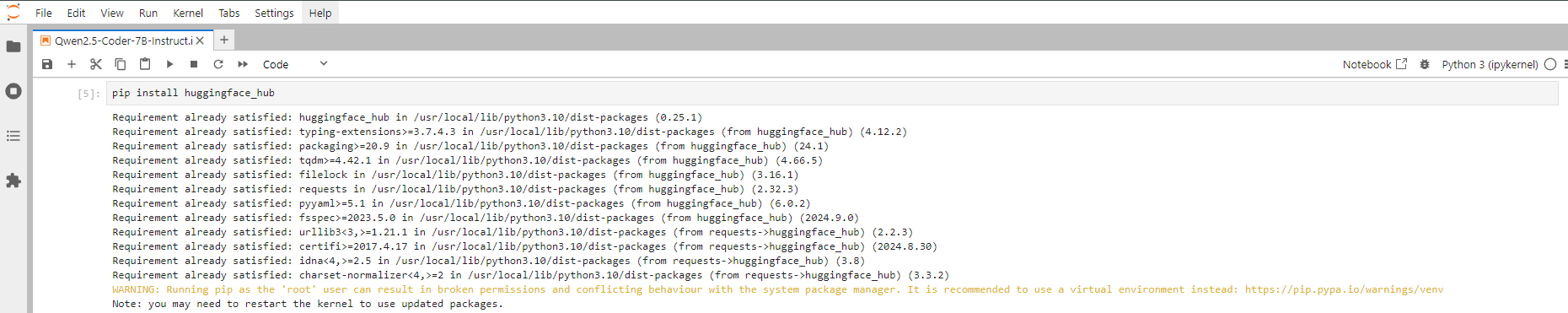

Step 11: Install Huggingface Hub

To install the Huggingface hub, run the following command in the Jupyter Notebook cell:

pip install huggingface_hubHugging Face Hub is the go-to place for sharing machine learning models, demos, datasets, and metrics. huggingface_hub library helps you interact with the Hub without leaving your development environment.

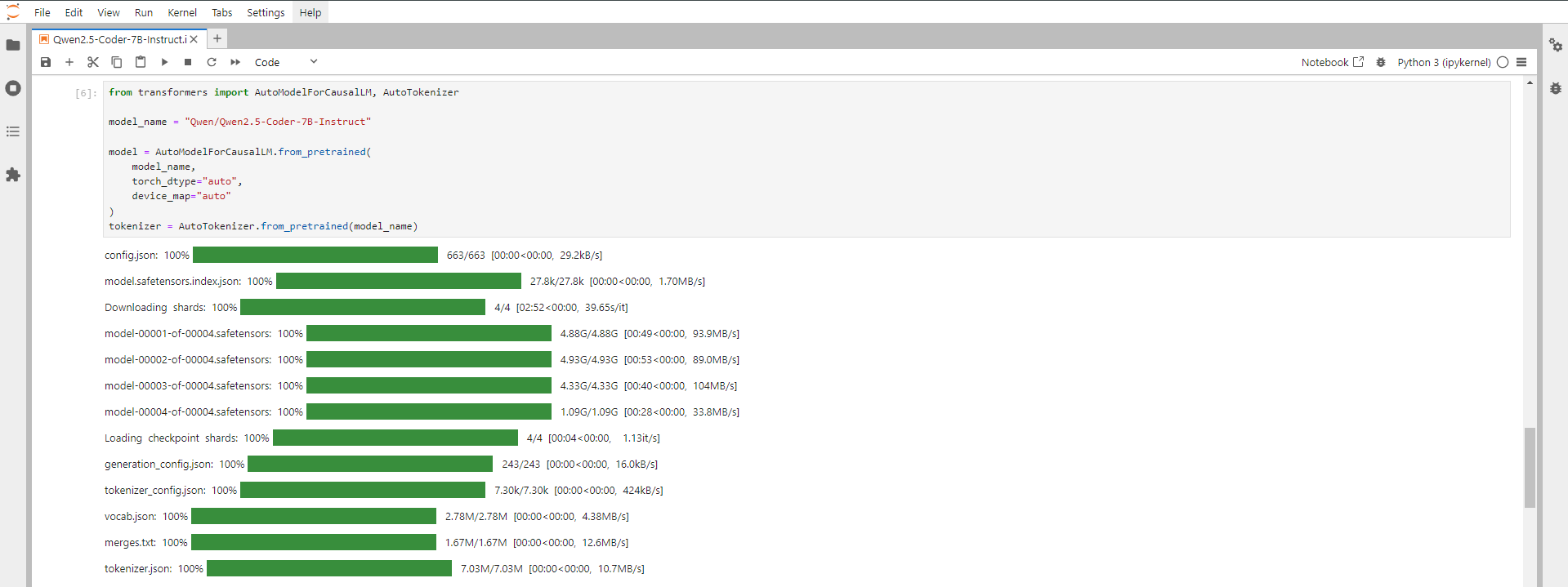

Step 12: Run the Qwen2.5-Coder-7B-Instruct code

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen2.5-Coder-7B-Instruct"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

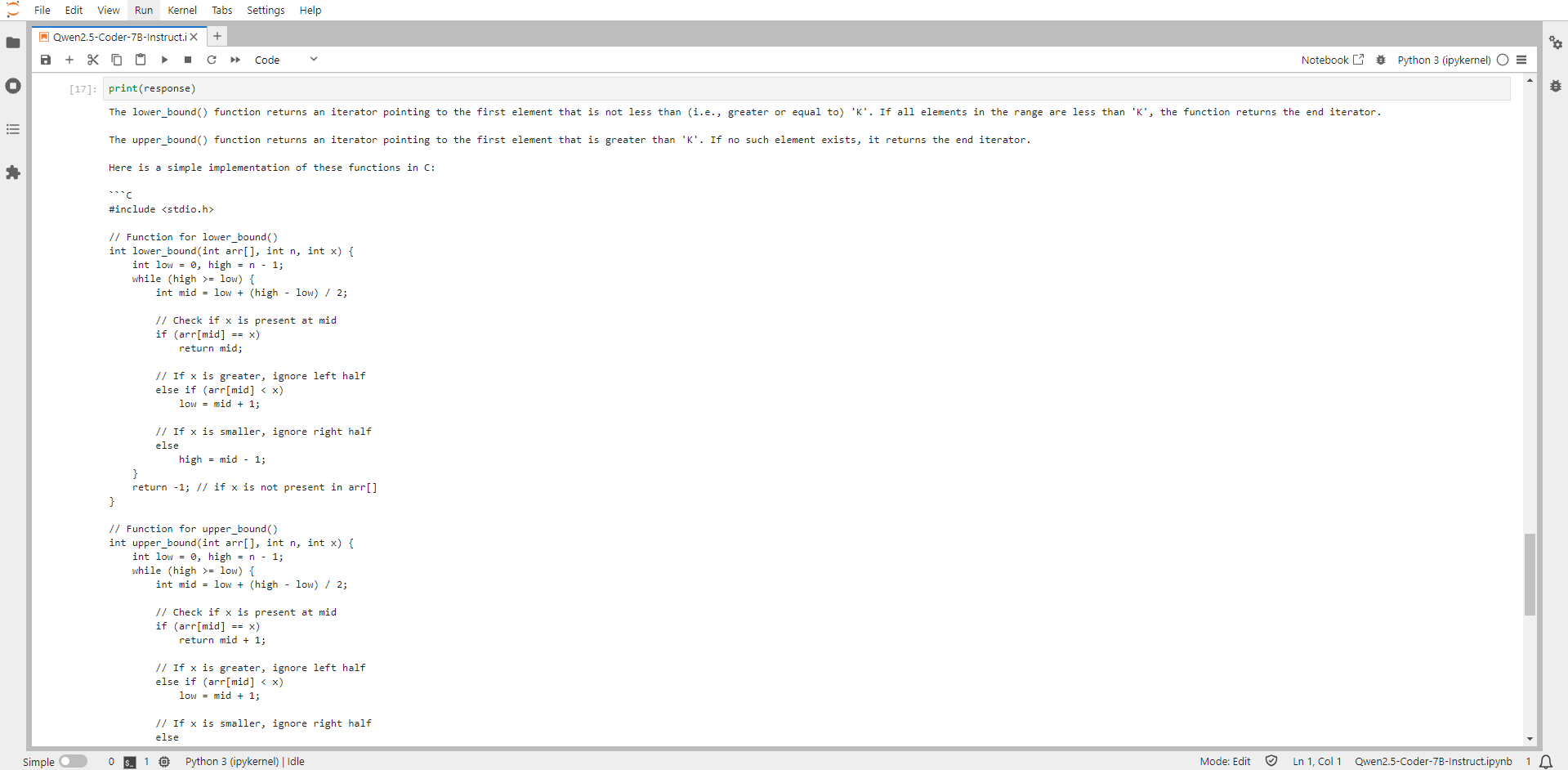

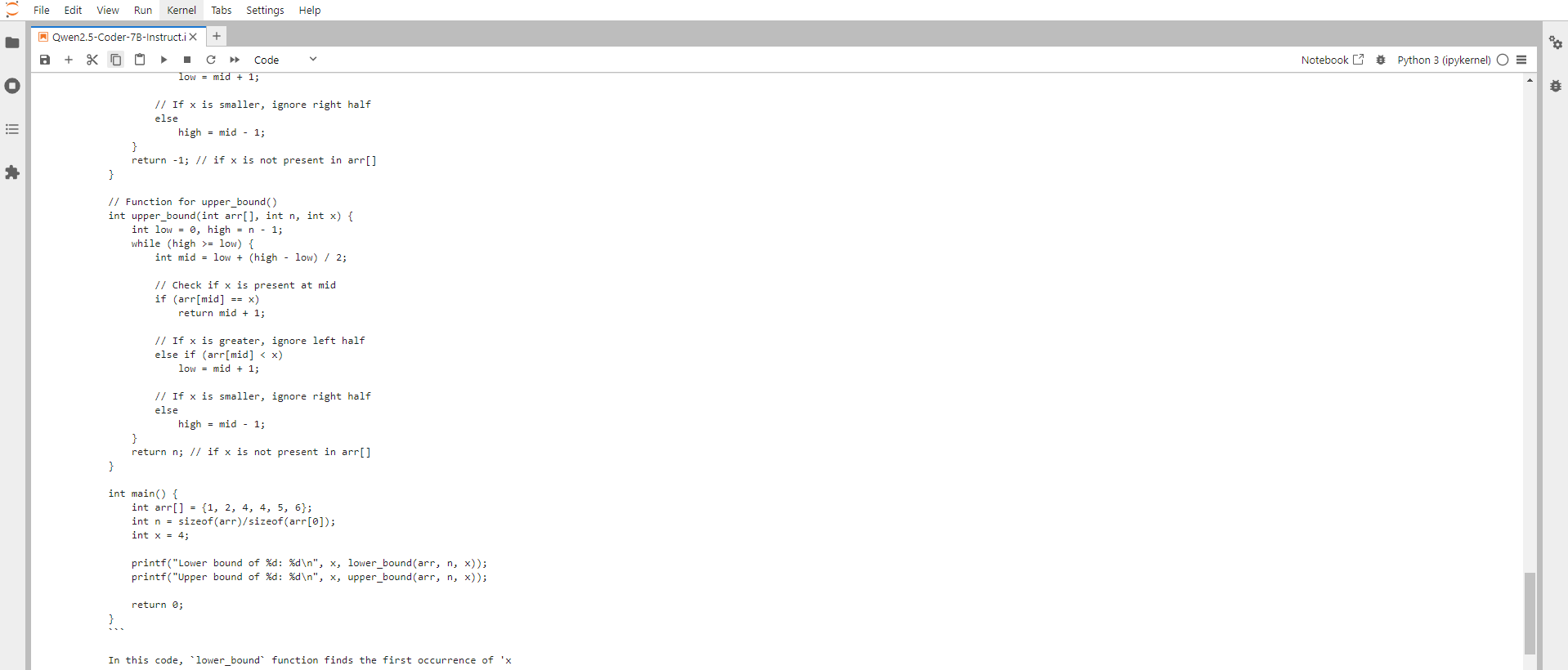

Step 13: Run the Prompt and Print the Output

prompt = "Given a sorted array arr[] of N integers and a number K, the task is to write the C program to find the upper_bound() and lower_bound() of K in the given array."

messages = [

{"role": "system", "content": "You are Qwen, created by Alibaba Cloud. You are a helpful assistant."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=512

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(response)

Conclusion

Qwen2.5-Coder-7B-Instruct is a groundbreaking open-source model from Alibaba Cloud that brings state-of-the-art AI capabilities to developers and researchers. Following this guide, you can quickly deploy Qwen2.5-Coder-7B-Instruct on a GPU-powered Virtual Machine with NodeShift, harnessing its full potential. NodeShift provides an accessible, secure, affordable platform to run your AI models efficiently. It is an excellent choice for those experimenting with Qwen2.5-Coder-7B-Instruct and other cutting-edge AI tools.